Website: royf.org

sigai.acm.org/main/2025/03...

#SIGAIAward

sigai.acm.org/main/2025/03...

#SIGAIAward

🔗 Register now: forms.gle/QZS1GkZhYGRF...

Register now to save €100 on your ticket. Early bird prices are only available until 1st April.

🔗 Register now: forms.gle/QZS1GkZhYGRF...

Register now to save €100 on your ticket. Early bird prices are only available until 1st April.

This probably explains the NSF panel suspensions as well.

This probably explains the NSF panel suspensions as well.

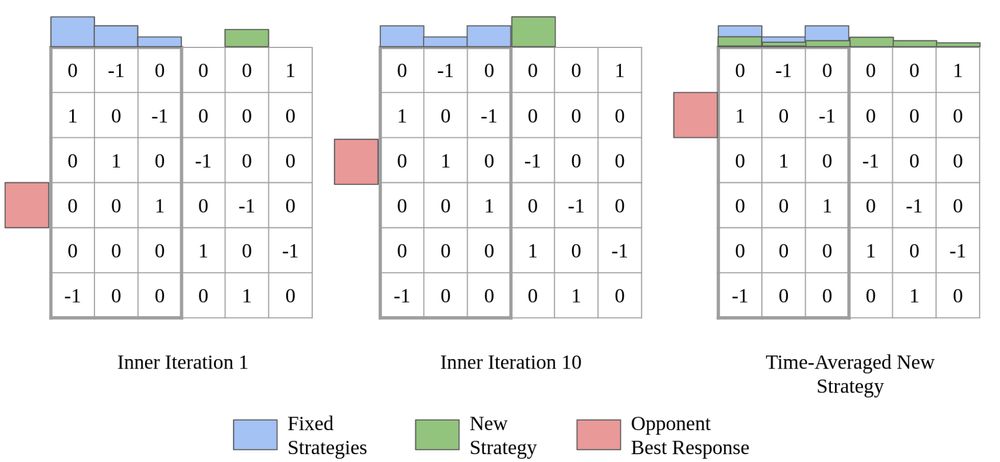

You want an agent's behavior that can't be exploited by an adversary (zero-sum Nash equilibrium = NE). The world is big, so you restrict the agent to stochastic mixing of a small population. How should you grow the population?

You want an agent's behavior that can't be exploited by an adversary (zero-sum Nash equilibrium = NE). The world is big, so you restrict the agent to stochastic mixing of a small population. How should you grow the population?