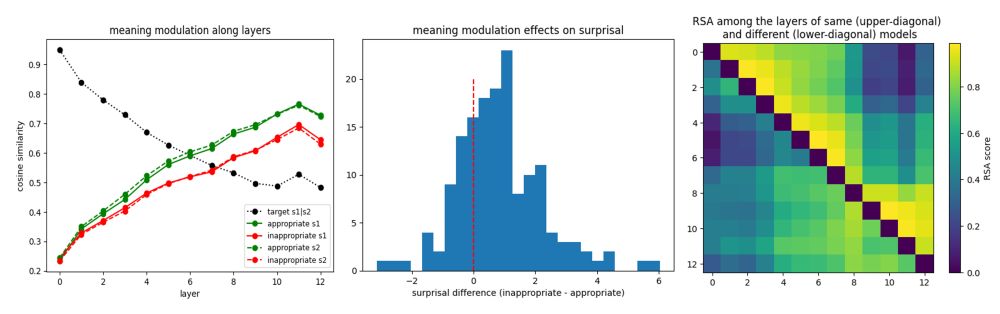

Our new Cerebral Cortex paper shows the left inferior frontal gyrus (BA45) does it automatically, even when task-irrelevant. We used fMRI + computational models.

Congrats Marco Ciapparelli, Marco Marelli & team!

doi.org/10.1093/cerc...

Our new Cerebral Cortex paper shows the left inferior frontal gyrus (BA45) does it automatically, even when task-irrelevant. We used fMRI + computational models.

Congrats Marco Ciapparelli, Marco Marelli & team!

doi.org/10.1093/cerc...

Watch the full presentation here:

www.youtube.com/watch?v=zN7G...

Watch the full presentation here:

www.youtube.com/watch?v=zN7G...

A computational approach to morphological productivity using the Discriminative Lexicon Model

Professor Harald Baayen (University of Tübingen, Germany)

🗓️ September 8, 2025

2:00 PM - 3:30 PM

📍 UniMiB, Room U6-01C, Milan

🔗 Join remotely: meet.google.com/dkj-kzmw-vzt

A computational approach to morphological productivity using the Discriminative Lexicon Model

Professor Harald Baayen (University of Tübingen, Germany)

🗓️ September 8, 2025

2:00 PM - 3:30 PM

📍 UniMiB, Room U6-01C, Milan

🔗 Join remotely: meet.google.com/dkj-kzmw-vzt

It's geared toward psychologists & linguists and covers extracting embeddings, predictability measures, comparing models across languages & modalities (vision). see examples 🧵

It's geared toward psychologists & linguists and covers extracting embeddings, predictability measures, comparing models across languages & modalities (vision). see examples 🧵