https://bravenewword.unimib.it/

@mariakna.bsky.social, @kathyrastle.bsky.social

How does morphological knowledge serve as a powerful heuristic for vocabulary growth and reading efficiency? How do readers navigate noisy text to learn the meanings of affixes?

www.sciencedirect.com/science/arti...

@mariakna.bsky.social, @kathyrastle.bsky.social

How does morphological knowledge serve as a powerful heuristic for vocabulary growth and reading efficiency? How do readers navigate noisy text to learn the meanings of affixes?

www.sciencedirect.com/science/arti...

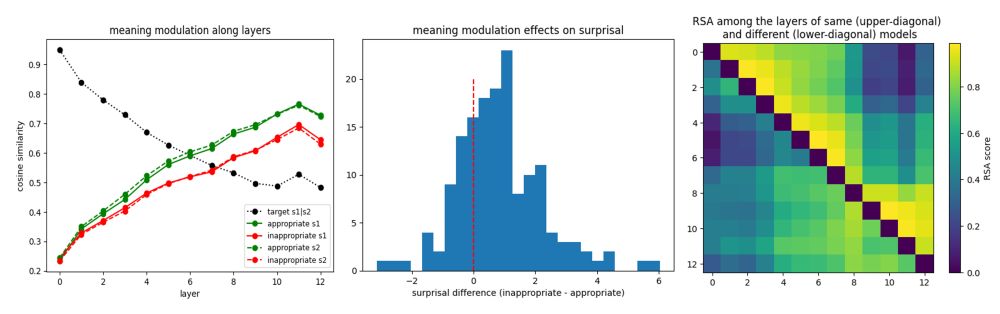

The latest upload features Prof. Giovanni Cassani (Tilburg University), discussing how humans and LLMs interpret novel words in context.

www.youtube.com/channel/UClH...

The latest upload features Prof. Giovanni Cassani (Tilburg University), discussing how humans and LLMs interpret novel words in context.

www.youtube.com/channel/UClH...

🗓️ Today, Jan 19, 2:00 PM CET 📍 Bicocca (U6 - Sala Lauree) 🌐 Join online: meet.google.com/suf-ybti-oop

🗓️ Today, Jan 19, 2:00 PM CET 📍 Bicocca (U6 - Sala Lauree) 🌐 Join online: meet.google.com/suf-ybti-oop

Our new Cerebral Cortex paper shows the left inferior frontal gyrus (BA45) does it automatically, even when task-irrelevant. We used fMRI + computational models.

Congrats Marco Ciapparelli, Marco Marelli & team!

doi.org/10.1093/cerc...

Our new Cerebral Cortex paper shows the left inferior frontal gyrus (BA45) does it automatically, even when task-irrelevant. We used fMRI + computational models.

Congrats Marco Ciapparelli, Marco Marelli & team!

doi.org/10.1093/cerc...

Thrilled to see this article with @ruimata.bsky.social out. We discuss how LLMs can be leveraged to map, clarify, and generate psychological measures and constructs.

Open access article: doi.org/10.1177/0963...

Thrilled to see this article with @ruimata.bsky.social out. We discuss how LLMs can be leveraged to map, clarify, and generate psychological measures and constructs.

Open access article: doi.org/10.1177/0963...

Did you know Germans averaged 53 taboo words, while Brits e Spaniards listed only 16?

Great to see the work of our colleague Simone Sulpizio & Jon Andoni Duñabeitia highlighted! 👏

www.theguardian.com/science/2025...

Did you know Germans averaged 53 taboo words, while Brits e Spaniards listed only 16?

Great to see the work of our colleague Simone Sulpizio & Jon Andoni Duñabeitia highlighted! 👏

www.theguardian.com/science/2025...

He'll discuss "Making sense from the parts: What Chinese compounds tell us about reading," exploring how we process ambiguity & meaning consistency

🗓️ 27th Oct ⏰ 2PM (CET)📍UniMiB 💻 meet.google.com/zvk-owhv-tfw

He'll discuss "Making sense from the parts: What Chinese compounds tell us about reading," exploring how we process ambiguity & meaning consistency

🗓️ 27th Oct ⏰ 2PM (CET)📍UniMiB 💻 meet.google.com/zvk-owhv-tfw

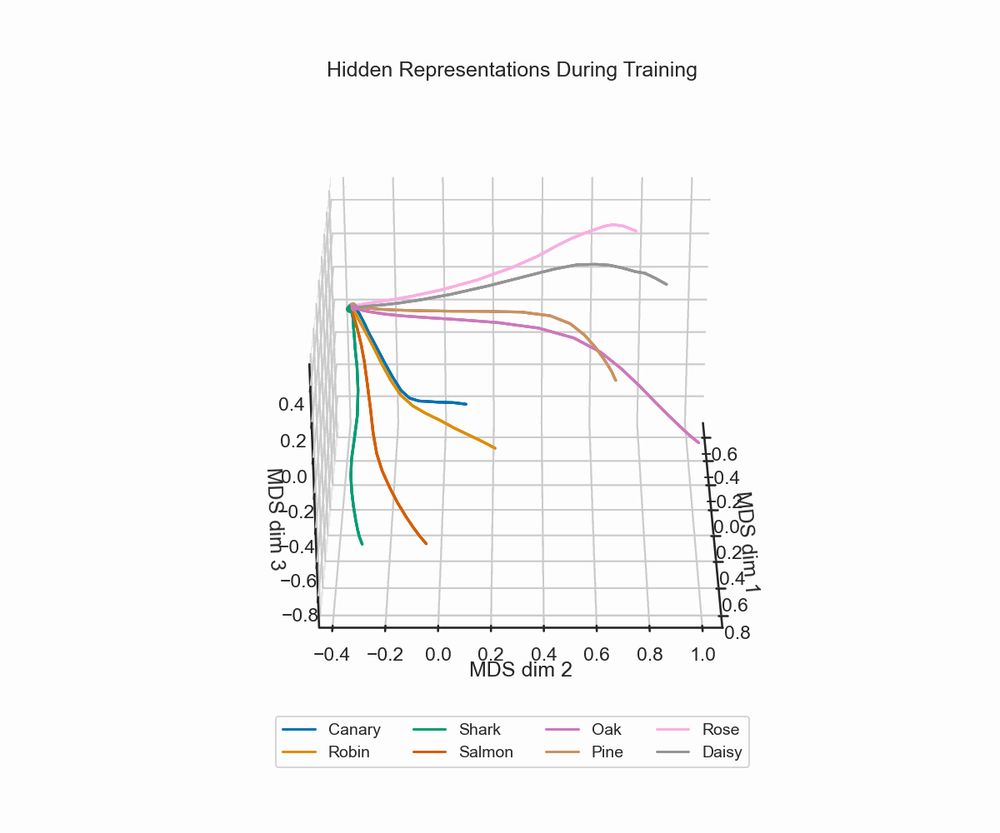

It's geared toward psychologists & linguists and covers extracting embeddings, predictability measures, comparing models across languages & modalities (vision). see examples 🧵

It's geared toward psychologists & linguists and covers extracting embeddings, predictability measures, comparing models across languages & modalities (vision). see examples 🧵

arxiv.org/abs/2502.11856

arxiv.org/abs/2502.11856

academic.oup.com/cercor/artic...

academic.oup.com/cercor/artic...

w/@mariannabolog.bsky.social is now online & forthcoming in the #ElsevierEncyclopedia of Language & Linguistics

🔍 Theoretical overview, quantification tools, and behavioral evidence on specificity.

👉 Read: urly.it/31c4nm

@abstractionerc.bsky.social

w/@mariannabolog.bsky.social is now online & forthcoming in the #ElsevierEncyclopedia of Language & Linguistics

🔍 Theoretical overview, quantification tools, and behavioral evidence on specificity.

👉 Read: urly.it/31c4nm

@abstractionerc.bsky.social

@ruimata.bsky.social and I propose a solution based on a fine-tuned 🤖 LLM (bit.ly/mpnet-pers) and test it for 🎭 personality psychology.

The paper is finally out in @natrevpsych.bsky.social: go.nature.com/4bEaaja

@ruimata.bsky.social and I propose a solution based on a fine-tuned 🤖 LLM (bit.ly/mpnet-pers) and test it for 🎭 personality psychology.

The paper is finally out in @natrevpsych.bsky.social: go.nature.com/4bEaaja

Watch the full presentation here:

www.youtube.com/watch?v=VJTs...

Watch the full presentation here:

www.youtube.com/watch?v=VJTs...

With Marco Marelli (@ercbravenewword.bsky.social), @wwgraves.bsky.social & @carloreve.bsky.social.

doi.org/10.1093/cerc...

With Marco Marelli (@ercbravenewword.bsky.social), @wwgraves.bsky.social & @carloreve.bsky.social.

doi.org/10.1093/cerc...

Congratulations on a fascinating talk!

Congratulations on a fascinating talk!

Watch the full presentation here:

www.youtube.com/watch?v=zN7G...

Watch the full presentation here:

www.youtube.com/watch?v=zN7G...

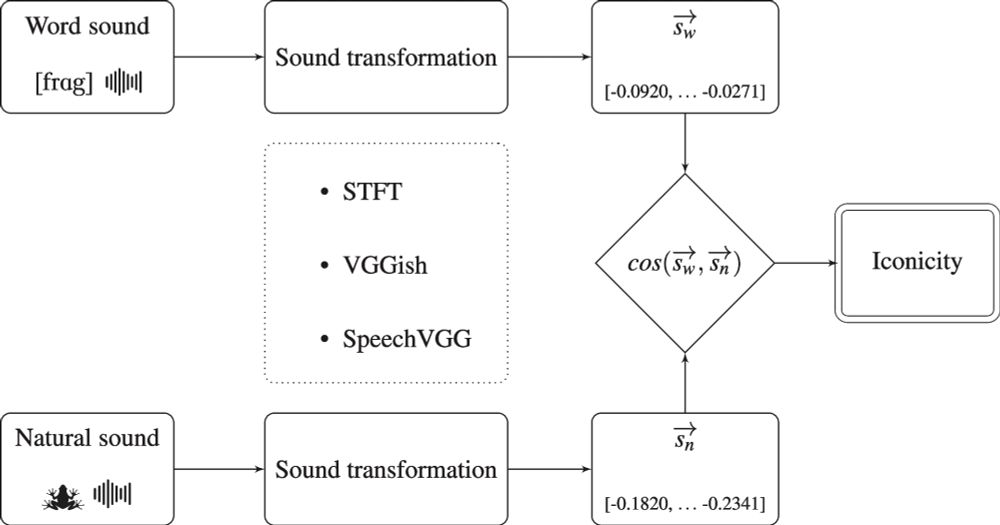

Exploring form-meaning interactions in novel word learning and memory search

Abhilasha Kumar (Assistant Professor, Bowdoin College)

A fantastic opportunity to delve into how we learn new words and retrieve them from memory.

💻 Join remotely: meet.google.com/pay-qcpv-sbf

Exploring form-meaning interactions in novel word learning and memory search

Abhilasha Kumar (Assistant Professor, Bowdoin College)

A fantastic opportunity to delve into how we learn new words and retrieve them from memory.

💻 Join remotely: meet.google.com/pay-qcpv-sbf

A computational approach to morphological productivity using the Discriminative Lexicon Model

Professor Harald Baayen (University of Tübingen, Germany)

🗓️ September 8, 2025

2:00 PM - 3:30 PM

📍 UniMiB, Room U6-01C, Milan

🔗 Join remotely: meet.google.com/dkj-kzmw-vzt

A computational approach to morphological productivity using the Discriminative Lexicon Model

Professor Harald Baayen (University of Tübingen, Germany)

🗓️ September 8, 2025

2:00 PM - 3:30 PM

📍 UniMiB, Room U6-01C, Milan

🔗 Join remotely: meet.google.com/dkj-kzmw-vzt

slides: shorturl.at/q2iKq

code (with colab support!): github.com/qihongl/demo...

slides: shorturl.at/q2iKq

code (with colab support!): github.com/qihongl/demo...

She investigates how meanings are encoded and evolve, combining linguistic and computational approaches.

Her work spans diachronic modeling of lexical change in Mandarin and semantic transparency in LLMs.

🔗 research.polyu.edu.hk/en/publicati...

She investigates how meanings are encoded and evolve, combining linguistic and computational approaches.

Her work spans diachronic modeling of lexical change in Mandarin and semantic transparency in LLMs.

🔗 research.polyu.edu.hk/en/publicati...

Read more: link.springer.com/article/10.3...

@andreadevarda.bsky.social

Read more: link.springer.com/article/10.3...

@andreadevarda.bsky.social

doi.org/10.1111/cogs...

doi.org/10.1111/cogs...