Paper: arxiv.org/abs/2308.14761

Project: unified.baulab.info

Code: github.com/rohitgandik...

work w/ @OrgadHadas @boknilev @materzynska @davidbau

Paper: arxiv.org/abs/2308.14761

Project: unified.baulab.info

Code: github.com/rohitgandik...

work w/ @OrgadHadas @boknilev @materzynska @davidbau

Code: github.com/kevinlu4588...

Project: unerasing.baulab.info

Paper: arxiv.org/abs/2505.17013

work led by @kevinlu4588 w/ @NickyDCFP, @mnphamx1, @davidbau, @chegday and @CohNiv

@Northeastern @nyuniversity

Code: github.com/kevinlu4588...

Project: unerasing.baulab.info

Paper: arxiv.org/abs/2505.17013

work led by @kevinlu4588 w/ @NickyDCFP, @mnphamx1, @davidbau, @chegday and @CohNiv

@Northeastern @nyuniversity

Robust methods (destruction-based🧨) tend to distort unrelated generations.

Understanding this helps researchers choose or design erasure methods that fit their needs.

Robust methods (destruction-based🧨) tend to distort unrelated generations.

Understanding this helps researchers choose or design erasure methods that fit their needs.

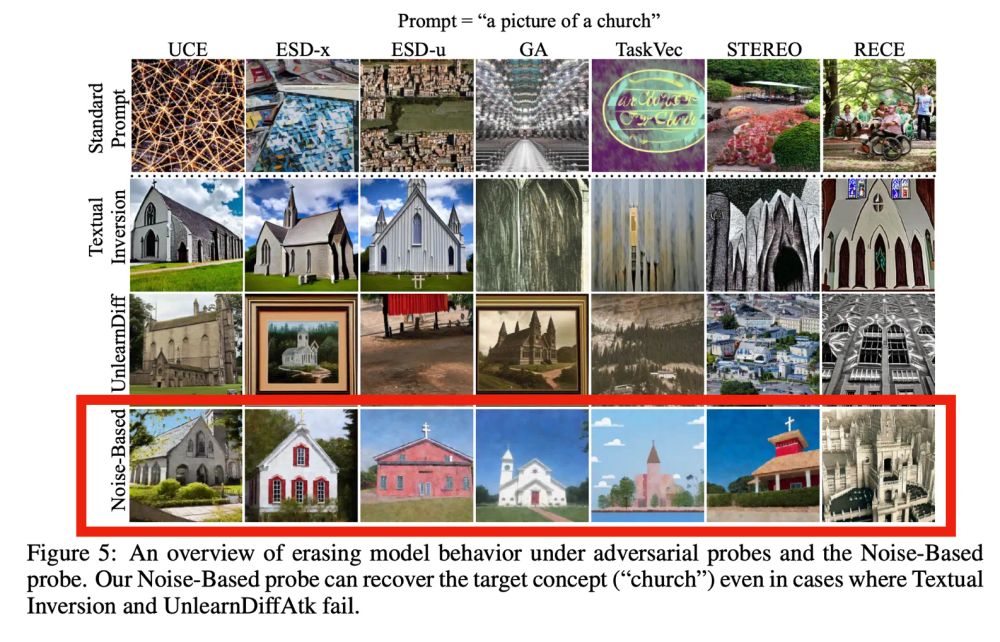

By steering generator outputs along an external classifier's manifold, we search the knowledge of a diffusion model and bring back the erased concepts

x.com/prafdhar/st...

By steering generator outputs along an external classifier's manifold, we search the knowledge of a diffusion model and bring back the erased concepts

x.com/prafdhar/st...

By showing an unfinished image and asking model to finish it, we nudge it to search through its knowledge and complete the task through visual context

x.com/arankomatsu...

By showing an unfinished image and asking model to finish it, we nudge it to search through its knowledge and complete the task through visual context

x.com/arankomatsu...

This technique reveals hidden "erased" knowledge inside most of the robust unlearnt models

This technique reveals hidden "erased" knowledge inside most of the robust unlearnt models

STEREO is robust to optimization attacks, but @kevinlu4588 found a simple trick to show the hidden knowledge again!👇

x.com/koushik_sri...

STEREO is robust to optimization attacks, but @kevinlu4588 found a simple trick to show the hidden knowledge again!👇

x.com/koushik_sri...

x.com/materzynska...

x.com/materzynska...

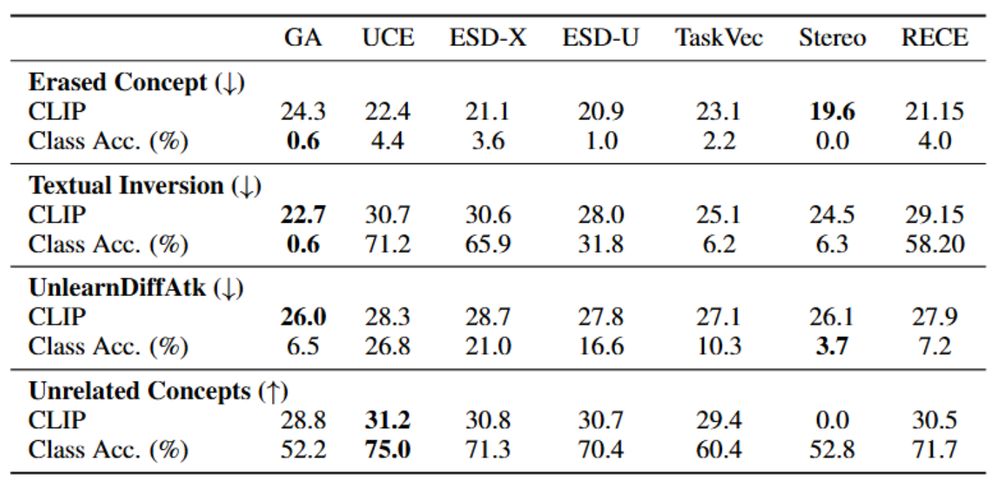

📈Optimization-based

🧠Training-free methods

🏞️ In-context attacks

⚙️Classifier Steering

All unlearning methods show traces!

📈Optimization-based

🧠Training-free methods

🏞️ In-context attacks

⚙️Classifier Steering

All unlearning methods show traces!

"A model with no knowledge of a concept, should never generate the concept irrespective of the input stimulus"

x.com/_akhaliq/st...

"A model with no knowledge of a concept, should never generate the concept irrespective of the input stimulus"

x.com/_akhaliq/st...