Unified Concept Editing now supports video models, thanks to Mamiglia!

It's amazing how UCE extends to large video models: instant erase/edit (1 sec) compared to standard fine-tuning (>30 mins) ⏱️

Try the code👇

Unified Concept Editing now supports video models, thanks to Mamiglia!

It's amazing how UCE extends to large video models: instant erase/edit (1 sec) compared to standard fine-tuning (>30 mins) ⏱️

Try the code👇

It's all in the first time-step!⏱️

Turns out the concepts to be diverse are present in the model - it simply doesn't use them!

Checkout our @wacv_official work - we added theoretical evidence👇

x.com/rohitgandik...

It's all in the first time-step!⏱️

Turns out the concepts to be diverse are present in the model - it simply doesn't use them!

Checkout our @wacv_official work - we added theoretical evidence👇

x.com/rohitgandik...

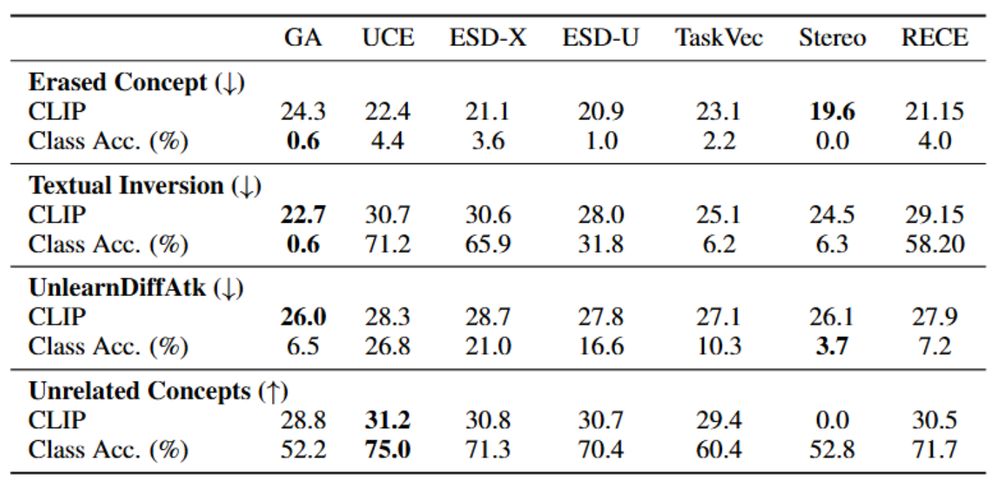

Robust methods (destruction-based🧨) tend to distort unrelated generations.

Understanding this helps researchers choose or design erasure methods that fit their needs.

Robust methods (destruction-based🧨) tend to distort unrelated generations.

Understanding this helps researchers choose or design erasure methods that fit their needs.

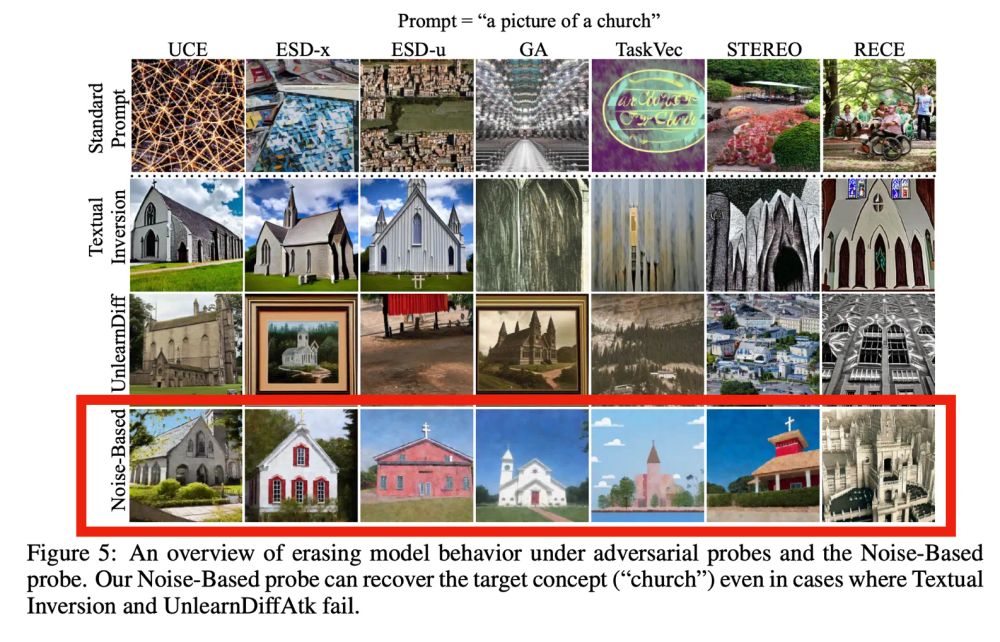

By steering generator outputs along an external classifier's manifold, we search the knowledge of a diffusion model and bring back the erased concepts

x.com/prafdhar/st...

By steering generator outputs along an external classifier's manifold, we search the knowledge of a diffusion model and bring back the erased concepts

x.com/prafdhar/st...

By showing an unfinished image and asking model to finish it, we nudge it to search through its knowledge and complete the task through visual context

x.com/arankomatsu...

By showing an unfinished image and asking model to finish it, we nudge it to search through its knowledge and complete the task through visual context

x.com/arankomatsu...

This technique reveals hidden "erased" knowledge inside most of the robust unlearnt models

This technique reveals hidden "erased" knowledge inside most of the robust unlearnt models

📈Optimization-based

🧠Training-free methods

🏞️ In-context attacks

⚙️Classifier Steering

All unlearning methods show traces!

📈Optimization-based

🧠Training-free methods

🏞️ In-context attacks

⚙️Classifier Steering

All unlearning methods show traces!

What does this mean? We must tread carefully with unlearning research within diffusion models🚨

Here is what we learned 🧵👇(led by @kevinlu4588)

x.com/kevinlu4588...

What does this mean? We must tread carefully with unlearning research within diffusion models🚨

Here is what we learned 🧵👇(led by @kevinlu4588)

x.com/kevinlu4588...

Stay tuned for technical details! 🧵

Happy Holidays!🎄✨

@davidbau.bsky.social

Stay tuned for technical details! 🧵

Happy Holidays!🎄✨

@davidbau.bsky.social