@riverdong.bsky.social

⚖️ Personalization Can Protect Minority Viewpoints!

In diverse-user settings, personalization helps amplify underrepresented perspectives (User 8 in the Figure). Without personalization, models tend to default to majority opinions, sidelining minority viewpoints.

In diverse-user settings, personalization helps amplify underrepresented perspectives (User 8 in the Figure). Without personalization, models tend to default to majority opinions, sidelining minority viewpoints.

March 5, 2025 at 4:05 PM

⚖️ Personalization Can Protect Minority Viewpoints!

In diverse-user settings, personalization helps amplify underrepresented perspectives (User 8 in the Figure). Without personalization, models tend to default to majority opinions, sidelining minority viewpoints.

In diverse-user settings, personalization helps amplify underrepresented perspectives (User 8 in the Figure). Without personalization, models tend to default to majority opinions, sidelining minority viewpoints.

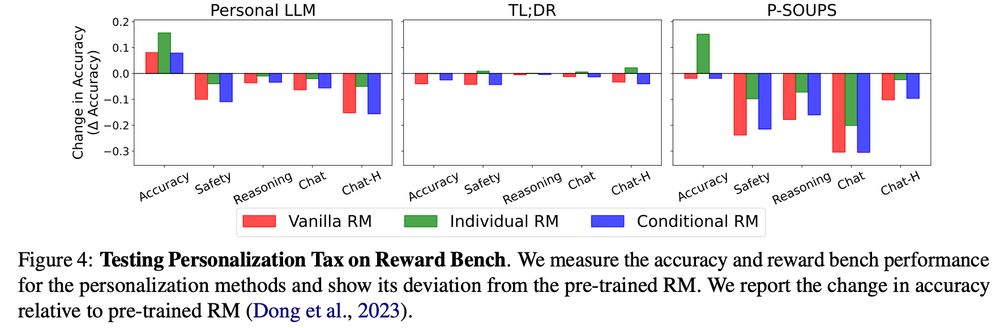

⚠️ Personalization can hurt model safety & reasoning by up to 30%.

March 5, 2025 at 4:04 PM

⚠️ Personalization can hurt model safety & reasoning by up to 30%.

📊 Key Findings:

(1) Performance can vary by up to 36%

(2) Fine-tuning per user is a strong baseline

(3) For the recently proposed algorithms: Personalized Reward Modeling (PRM) achieves best performance. Group Preference Optimization (GPO) show fast adaptation to new users.

(1) Performance can vary by up to 36%

(2) Fine-tuning per user is a strong baseline

(3) For the recently proposed algorithms: Personalized Reward Modeling (PRM) achieves best performance. Group Preference Optimization (GPO) show fast adaptation to new users.

March 5, 2025 at 4:04 PM

📊 Key Findings:

(1) Performance can vary by up to 36%

(2) Fine-tuning per user is a strong baseline

(3) For the recently proposed algorithms: Personalized Reward Modeling (PRM) achieves best performance. Group Preference Optimization (GPO) show fast adaptation to new users.

(1) Performance can vary by up to 36%

(2) Fine-tuning per user is a strong baseline

(3) For the recently proposed algorithms: Personalized Reward Modeling (PRM) achieves best performance. Group Preference Optimization (GPO) show fast adaptation to new users.