https://ritchot.me/

https://www.linkedin.com/in/mritchot

"explain the probability of getting an 8 with 2 dice"

gemini.google.com/share/f00d99...

"explain the probability of getting an 8 with 2 dice"

gemini.google.com/share/f00d99...

ritchot.me/gpt-5-has-co...

ritchot.me/gpt-5-has-co...

www.youtube.com/watch?v=LCEm...

www.youtube.com/watch?v=LCEm...

There are many issues with AI & many things that need critique, but pretending it is going away is not helpful.

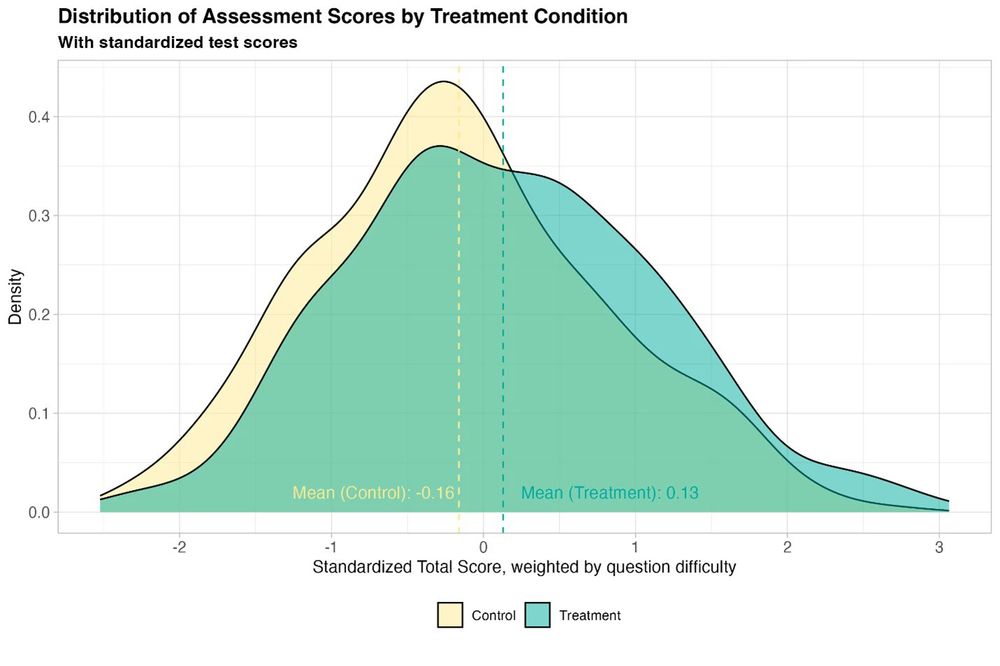

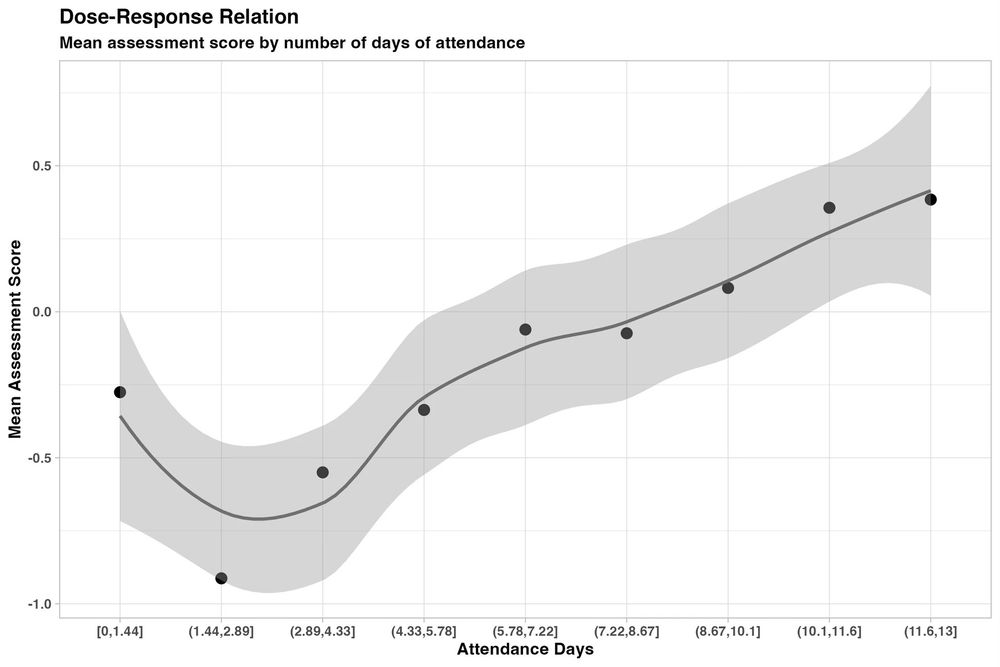

Law students using o1-preview had the quality of work on most tasks increase (up to 28%) & time savings of 12-28%

There were a few hallucinations, but a RAG-based AI with access to legal material reduced those to human level

There are many issues with AI & many things that need critique, but pretending it is going away is not helpful.

💻 pudding.cool/2025/02/midd...

📽️ www.youtube.com/watch?v=b4zL...

💻 pudding.cool/2025/02/midd...

📽️ www.youtube.com/watch?v=b4zL...

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

www.theintrinsicperspective.com/p/brain-drain

www.theintrinsicperspective.com/p/brain-drain

Anthropic's Economic Index initial report released today. Usage is concentrated in software development and technical writing. I was surprised at how many job types are single digit %'s.

Anthropic's Economic Index initial report released today. Usage is concentrated in software development and technical writing. I was surprised at how many job types are single digit %'s.

anfalmushtaq.com/articles/dee...

anfalmushtaq.com/articles/dee...

1. The human working in conjunction with the machine will be increasingly important. AI Agents may not have the biggest new ideas on their own, but they will with a human mind working in tangent...the truly creative will thrive.

1. The human working in conjunction with the machine will be increasingly important. AI Agents may not have the biggest new ideas on their own, but they will with a human mind working in tangent...the truly creative will thrive.

The answer appears to be yes - using 3 agents with a structured review process reduced hallucination scores by 96% across 310 test cases. arxiv.org/pdf/2501.13946

The answer appears to be yes - using 3 agents with a structured review process reduced hallucination scores by 96% across 310 test cases. arxiv.org/pdf/2501.13946

For Google, it feels like a big emergency and a huge opportunity. They have a unique position (search, Books, YouTube, etc.) and the models to do something big. We will see

For Google, it feels like a big emergency and a huge opportunity. They have a unique position (search, Books, YouTube, etc.) and the models to do something big. We will see

Look at how it hunts down a concept in the literature (& works around problems)

Look at how it hunts down a concept in the literature (& works around problems)

Wrote a bit more about this on my blog here: simonwillison.net/2025/Feb/2/w...

Wrote a bit more about this on my blog here: simonwillison.net/2025/Feb/2/w...

And it helped all students, especially girls who were initially behind.

And it helped all students, especially girls who were initially behind.

Researchers secretly added AI-created papers to the exam pool: “We found that 94% of our AI submissions were undetected. The grades awarded to our AI submissions were on average half a grade boundary higher than that achieved by real students”

Researchers secretly added AI-created papers to the exam pool: “We found that 94% of our AI submissions were undetected. The grades awarded to our AI submissions were on average half a grade boundary higher than that achieved by real students”

There is a trade-off between false accusations and detection rates for AI. At a 10% false positive rate, detectors find 80% or less of AI content. At a 1% rate most find 60% or less.

Don’t trust AI detectors!

There is a trade-off between false accusations and detection rates for AI. At a 10% false positive rate, detectors find 80% or less of AI content. At a 1% rate most find 60% or less.

Don’t trust AI detectors!