Richard D. Morey

@richarddmorey.bsky.social

Statistics, cognitive modelling, and other sundry things. Mastodon: @richarddmorey@tech.lgbt

[I deleted my twitter account]

[I deleted my twitter account]

my knowledge of Hume has unexpectedly come in handy for my fire safety training test

October 31, 2025 at 10:25 AM

my knowledge of Hume has unexpectedly come in handy for my fire safety training test

Also - contrast b/w the response when I advocate teaching R instead of SPSS -- "No hurry, let's not rush into it" (still waiting) -- & others re: use of LLMs -- "It's inevitable, we're behind; need it implement it ASAP!" -- is telling. Learning to code is freeing. Overhyped LLMs create dependency.

![Excerpt from Guest & van Rooij, 2025:

As Danielle Navarro (2015) says about shortcuts through us-

ing inappropriate technology, which chatbots are, we end up dig-

ging ourselves into “a very deep hole.” She goes on to explain:

"The business model here is to suck you in during

your student days, and then leave you dependent on

their tools when you go out into the real world. [...]

And you can avoid it: if you make use of packages

like R that are open source and free, you never get

trapped having to pay exorbitant licensing fees." (pp.

37–38)](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:c56dfqq2kropq4ys65h5pvgr/bafkreibuo2eq7v5fc7x3aokbeo224rpcwf52rtrjkgtitnqmkl64g6jory@jpeg)

October 4, 2025 at 9:01 AM

Also - contrast b/w the response when I advocate teaching R instead of SPSS -- "No hurry, let's not rush into it" (still waiting) -- & others re: use of LLMs -- "It's inevitable, we're behind; need it implement it ASAP!" -- is telling. Learning to code is freeing. Overhyped LLMs create dependency.

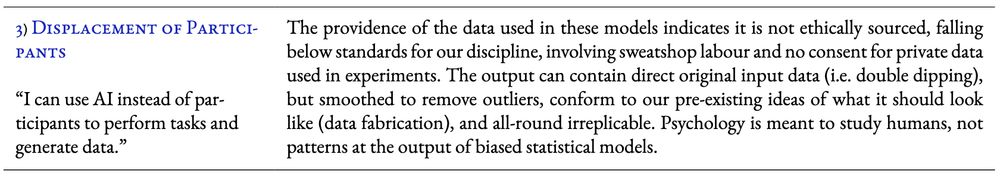

For me this is a hard red line in psychological science. If you advocate the use of "silicon samples" you do not understand what it is we're supposed to be doing (and likely don't understand LLMs, or are a grifter). Luckily I haven't seen much of this among people I'd consider my peer group.

October 4, 2025 at 8:27 AM

For me this is a hard red line in psychological science. If you advocate the use of "silicon samples" you do not understand what it is we're supposed to be doing (and likely don't understand LLMs, or are a grifter). Luckily I haven't seen much of this among people I'd consider my peer group.

It is this bit in particular, that they infer from a small number of simulations. This is basically like saying "the problem isn't variance, it is the presence of big and small observations". But heterogeneity is continuous. If you create a "population" of noncentrality pars 50% just bigger than >

September 25, 2025 at 1:10 PM

It is this bit in particular, that they infer from a small number of simulations. This is basically like saying "the problem isn't variance, it is the presence of big and small observations". But heterogeneity is continuous. If you create a "population" of noncentrality pars 50% just bigger than >

as though he knows how they should affect the test; he chooses a test that gives him what he wants. This, to us (S style) is backward. Back to tests in general: how do you tell what tests are good and what tests are *not good*, where you'd want to look for alternatives (or fix them)? (15/x)

![Text from Simonsohn (2025)'s blog post, "[129] P-curve works in practice, but would it work if you dropped a piano on it?":

All else equal we would of course choose the method with higher power, Fisher over Stouffer. But all else is not equal. Stouffer has three properties that are appealing in the context of p-curve analysis:

Property 1 that makes Stouffer appealing

Stouffer is less sensitive to individual extreme p-values, but more sensitive to several moderately low values.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:c56dfqq2kropq4ys65h5pvgr/bafkreig5rdxpad6uyeczfgup7vd7a7wzoayiyemg5r64xqewra25jhcmzq@jpeg)

September 25, 2025 at 10:07 AM

as though he knows how they should affect the test; he chooses a test that gives him what he wants. This, to us (S style) is backward. Back to tests in general: how do you tell what tests are good and what tests are *not good*, where you'd want to look for alternatives (or fix them)? (15/x)

of the test, like we did with the P-curve. The W-test is shown in the attached figure. It is a Z test, but modified so that 80% of the alpha in the rejection region has been taken from the tails and moved around Z=0. "Hey, this is bad," you argue to the inventor of the W-test. They respond: (11/x)

September 25, 2025 at 10:07 AM

of the test, like we did with the P-curve. The W-test is shown in the attached figure. It is a Z test, but modified so that 80% of the alpha in the rejection region has been taken from the tails and moved around Z=0. "Hey, this is bad," you argue to the inventor of the W-test. They respond: (11/x)

"NO" they say. "The power is fine, it's just sub-optimal!" (note that this is a *smaller* power penalty than some of the ones we showed in our P-curve paper). (8/x)

September 25, 2025 at 10:07 AM

"NO" they say. "The power is fine, it's just sub-optimal!" (note that this is a *smaller* power penalty than some of the ones we showed in our P-curve paper). (8/x)

But you happen to know that for this scenario, the Z test is the most powerful test. You decide to compare the W-test to the Z test. The blue (upper) curve shows the Z test power relative W-test power (lower; true power is solid green line). "Huh," you think; "let's get to the bottom of this." (7/x)

September 25, 2025 at 10:07 AM

But you happen to know that for this scenario, the Z test is the most powerful test. You decide to compare the W-test to the Z test. The blue (upper) curve shows the Z test power relative W-test power (lower; true power is solid green line). "Huh," you think; "let's get to the bottom of this." (7/x)

via simulation, that it works. First you test the Type I error rate using 100 simulations. Seems close to 0.05. So far, so good. Then you try changing the effect size and look at the power. It seems to increase smoothly. The plot below shows the resulting simulated power curve. Seems ok too! (6/x)

September 25, 2025 at 10:07 AM

via simulation, that it works. First you test the Type I error rate using 100 simulations. Seems close to 0.05. So far, so good. Then you try changing the effect size and look at the power. It seems to increase smoothly. The plot below shows the resulting simulated power curve. Seems ok too! (6/x)

FWIW, both Clint and I discussed some points in the paper w/Uri (can't tag him b/c he blocked me) in private some time ago. To be clear, neither we nor he is entitled to "first comment" on anything. But if you say you have a policy you're willing to ignore when you feel, you don't have that policy.>

September 23, 2025 at 4:45 PM

FWIW, both Clint and I discussed some points in the paper w/Uri (can't tag him b/c he blocked me) in private some time ago. To be clear, neither we nor he is entitled to "first comment" on anything. But if you say you have a policy you're willing to ignore when you feel, you don't have that policy.>

Where @candicemorey.bsky.social and I are today

August 31, 2025 at 2:40 PM

Where @candicemorey.bsky.social and I are today

…and, uh, also Lammy is spending family time with fascists? We think the lack of a fishing license is the issue here?

August 13, 2025 at 6:55 PM

…and, uh, also Lammy is spending family time with fascists? We think the lack of a fishing license is the issue here?

I can’t recall ever seeing “unsure” and “don’t know” as options in the same response scale…

August 13, 2025 at 12:06 PM

I can’t recall ever seeing “unsure” and “don’t know” as options in the same response scale…

No, I didn't sign out, I signed in!

August 13, 2025 at 9:11 AM

No, I didn't sign out, I signed in!

Yes, it is indeed a VERY weird suggestion. It is also literally the FIRST paragraph of the interpretation of their test in their app. You don't have to defend it. We critique it because they use it, and because others use it. Did you think we just made it up?

August 10, 2025 at 9:15 PM

Yes, it is indeed a VERY weird suggestion. It is also literally the FIRST paragraph of the interpretation of their test in their app. You don't have to defend it. We critique it because they use it, and because others use it. Did you think we just made it up?

This is just untrue. The compound rule was the *definition* of their 2015 evidential value test. They used it in their 2017 paper vs Cuddy. Others use it; I've looked at hundreds of papers. I've added screenshots of literally the first two random papers I pulled from my database.

August 10, 2025 at 4:35 PM

This is just untrue. The compound rule was the *definition* of their 2015 evidential value test. They used it in their 2017 paper vs Cuddy. Others use it; I've looked at hundreds of papers. I've added screenshots of literally the first two random papers I pulled from my database.

That makes sense to me, since the S of Sq also matters for the t test (e.g. I could blow up the SD by increasing one value). In our case we're combining the test statistics themselves, and we follow Birnbaum (1954) (in the image below, u_i is the p value corresponding to the ith test statistic).

August 10, 2025 at 8:03 AM

That makes sense to me, since the S of Sq also matters for the t test (e.g. I could blow up the SD by increasing one value). In our case we're combining the test statistics themselves, and we follow Birnbaum (1954) (in the image below, u_i is the p value corresponding to the ith test statistic).

but, counterpoint...I discovered papers like this, which are wonderful and nearly impossible to read; unless you're deep in that literature they take months to digest the proofs ("how did he get from step 2 to step 3...?!"). That bit makes me happy.

August 9, 2025 at 9:11 AM

but, counterpoint...I discovered papers like this, which are wonderful and nearly impossible to read; unless you're deep in that literature they take months to digest the proofs ("how did he get from step 2 to step 3...?!"). That bit makes me happy.

There's much, much more in the paper. Here are our conclusions: we suggest not using the p curve at all. Most of the tests are fatally flawed. The original 2014 "evidential value" test is ok, but still not much is known theoretically about combining test statistics across families. 14/?

![admissibility with $F(1,\nu_2)$ statistics and combining across test statistic families.

From a conceptual perspective, it is more challenging to offer concrete recommendations. We recommend that future work in this area focus on directly evaluating the higher-order properties of whole $p$ value distributions, rather than testing a simple transformed average of truncated $p$ values. Re-imaginings should also be based on explicit models of cheating behavior that the developers wish to detect.

Given what is needed to improve the $P$-curve tests, we do not recommend their use in their current form. Their statistical properties are problematic and it is not clear what substantive conclusions they afford. Given the stated purpose of the $P$-curve---evaluating the trustworthiness of scientific literatures---the stakes are too high to use tests with such poor, or poorly-understood, properties.

Users of the $P$-curve procedure may object on practical grounds: the tests seem to agree with what they suspect from a histogram of $p$ values. Although the tests are poorly constructed, the results are still driven by patterns in the data, and these patterns overlap with those one might notice in such a histogram. But if the justification of the method cannot rest on formal principles---and we argue the formal justification is shaky at best---and proponents of the method decide instead to justify conclusions via agreement with visual inspection, this raises the question of why the test was necessary in the first place.

[continued on next image]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:c56dfqq2kropq4ys65h5pvgr/bafkreih37cgobxorxuyq5fkqncki2pbq4cul7csw6xhuleb26wlr7mkkfy@jpeg)

August 8, 2025 at 6:56 PM

There's much, much more in the paper. Here are our conclusions: we suggest not using the p curve at all. Most of the tests are fatally flawed. The original 2014 "evidential value" test is ok, but still not much is known theoretically about combining test statistics across families. 14/?

It should go without saying, but a signficance test should never go from significant to nonsignificant with increasing evidence. But theirs does; in fact, several times! This makes no sense for a test, but it is a result of the fact that...12/?

August 8, 2025 at 6:56 PM

It should go without saying, but a signficance test should never go from significant to nonsignificant with increasing evidence. But theirs does; in fact, several times! This makes no sense for a test, but it is a result of the fact that...12/?

Imagine a set of studies with (signficant) chi-square(1) test statistics. We'll fix all the values but 2, then systematically increase 2 of them 5 times (for 6 sets of results). Then we'll look at when their test is significant ("the results show evidential value") 11/?

August 8, 2025 at 6:56 PM

Imagine a set of studies with (signficant) chi-square(1) test statistics. We'll fix all the values but 2, then systematically increase 2 of them 5 times (for 6 sets of results). Then we'll look at when their test is significant ("the results show evidential value") 11/?

Take the 2014 "lack of evidential value" test (which we call "LEV"). The transformation is given below. If this is significant (large values of the summed test statistic), then we are supposed to infer a "lack of evidential value" or a "flat(ish) p value distribution". But look carefully. 4/?

August 8, 2025 at 6:56 PM

Take the 2014 "lack of evidential value" test (which we call "LEV"). The transformation is given below. If this is significant (large values of the summed test statistic), then we are supposed to infer a "lack of evidential value" or a "flat(ish) p value distribution". But look carefully. 4/?

These tests are distinguished by their transformations. The graphs below show the transformations from significant p values to chi-squared distributed values (given a null) in their 2014 paper. These transformed values are then summed, and compared to an appropriate null, then an inference. 3/?

August 8, 2025 at 6:56 PM

These tests are distinguished by their transformations. The graphs below show the transformations from significant p values to chi-squared distributed values (given a null) in their 2014 paper. These transformed values are then summed, and compared to an appropriate null, then an inference. 3/?

The P-curve works by transforming signif. P-values in such a way that if the p values were uniform, the transformed values would have a distribution that can easily be summed (in a 2014 paper, chi-square; in a 2015 paper, normals). They develop 5 P-curve tests in total, for different purposes. 2/?

August 8, 2025 at 6:56 PM

The P-curve works by transforming signif. P-values in such a way that if the p values were uniform, the transformed values would have a distribution that can easily be summed (in a 2014 paper, chi-square; in a 2015 paper, normals). They develop 5 P-curve tests in total, for different purposes. 2/?