Comparable performance to 405B with 6x LESSER parameters ⚡

Comparable performance to 405B with 6x LESSER parameters ⚡

w/ fully customisable speech and voice personas!

Try it out directly below or use the model weights as you want!

🇮🇳/acc

w/ fully customisable speech and voice personas!

Try it out directly below or use the model weights as you want!

🇮🇳/acc

let your creativity guide you - powered by qwen 2.5 coder 32b ⚡

available on all 254,746 public datasets on the hub!

go check it out today! 🤗

let your creativity guide you - powered by qwen 2.5 coder 32b ⚡

available on all 254,746 public datasets on the hub!

go check it out today! 🤗

My notes here: simonwillison.net/2024/Nov/29/...

My notes here: simonwillison.net/2024/Nov/29/...

Powered by MLC Web-LLM & XGrammar ⚡

Define a JSON schema, Input free text, get structured data right in your browser - profit!!

Powered by MLC Web-LLM & XGrammar ⚡

Define a JSON schema, Input free text, get structured data right in your browser - profit!!

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message())

FirehoseSubscribeReposClient().start(f)

```

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message())

FirehoseSubscribeReposClient().start(f)

```

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

📊 1M public posts from Bluesky's firehose API

🔍 Includes text, metadata, and language predictions

🔬 Perfect to experiment with using ML for Bluesky 🤗

huggingface.co/datasets/blu...

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

trained ONLY on 1.5T tokens

> massive reductions in KV cache size and improved throughput

> combines Mamba and Attention in a hybrid parallel architecture with a 5:1 ratio and meta-tokens

trained ONLY on 1.5T tokens

> massive reductions in KV cache size and improved throughput

> combines Mamba and Attention in a hybrid parallel architecture with a 5:1 ratio and meta-tokens

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

> Multilingual - English, Chinese, Korean & Japanese

> Cross platform inference w/ llama.cpp

> Trained on 5 Billion audio tokens

> Qwen 2.5 0.5B LLM backbone

> Trained via HF GPU grants

> Multilingual - English, Chinese, Korean & Japanese

> Cross platform inference w/ llama.cpp

> Trained on 5 Billion audio tokens

> Qwen 2.5 0.5B LLM backbone

> Trained via HF GPU grants

Run with Transformers, MLX, Transformers.js, MLC Web-LLM, Ollama, Candle and more!

Apache 2.0 licensed codebase - go explore now!

Run with Transformers, MLX, Transformers.js, MLC Web-LLM, Ollama, Candle and more!

Apache 2.0 licensed codebase - go explore now!

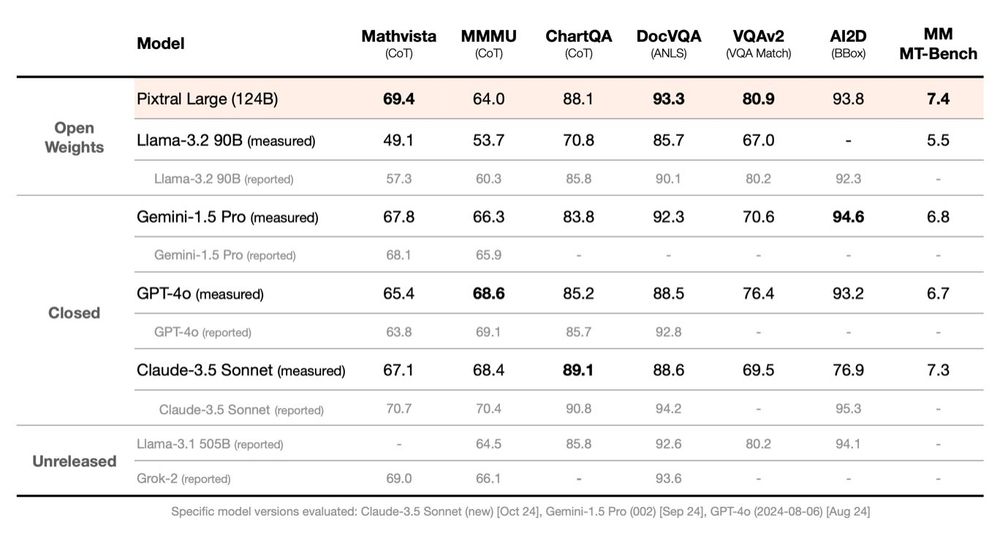

Mistral Pixtral & Instruct Large - ~123B, 128K context, multilingual, json + function calling & open weights

Allen AI Tülu 70B & 8B - competive with claude 3.5 haiku, beats all major open models like llama 3.1 70B, qwen 2.5 and nemotron

Mistral Pixtral & Instruct Large - ~123B, 128K context, multilingual, json + function calling & open weights

Allen AI Tülu 70B & 8B - competive with claude 3.5 haiku, beats all major open models like llama 3.1 70B, qwen 2.5 and nemotron

> S0 matches OpenAI's ViT-B/16 in zero-shot performance but is 4.8x faster and 2.8x smaller

> S2 outperforms SigLIP's ViT-B/16 in zero-shot accuracy, being 2.3x faster, 2.1x smaller, and trained with 3x fewer data

> S0 matches OpenAI's ViT-B/16 in zero-shot performance but is 4.8x faster and 2.8x smaller

> S2 outperforms SigLIP's ViT-B/16 in zero-shot accuracy, being 2.3x faster, 2.1x smaller, and trained with 3x fewer data

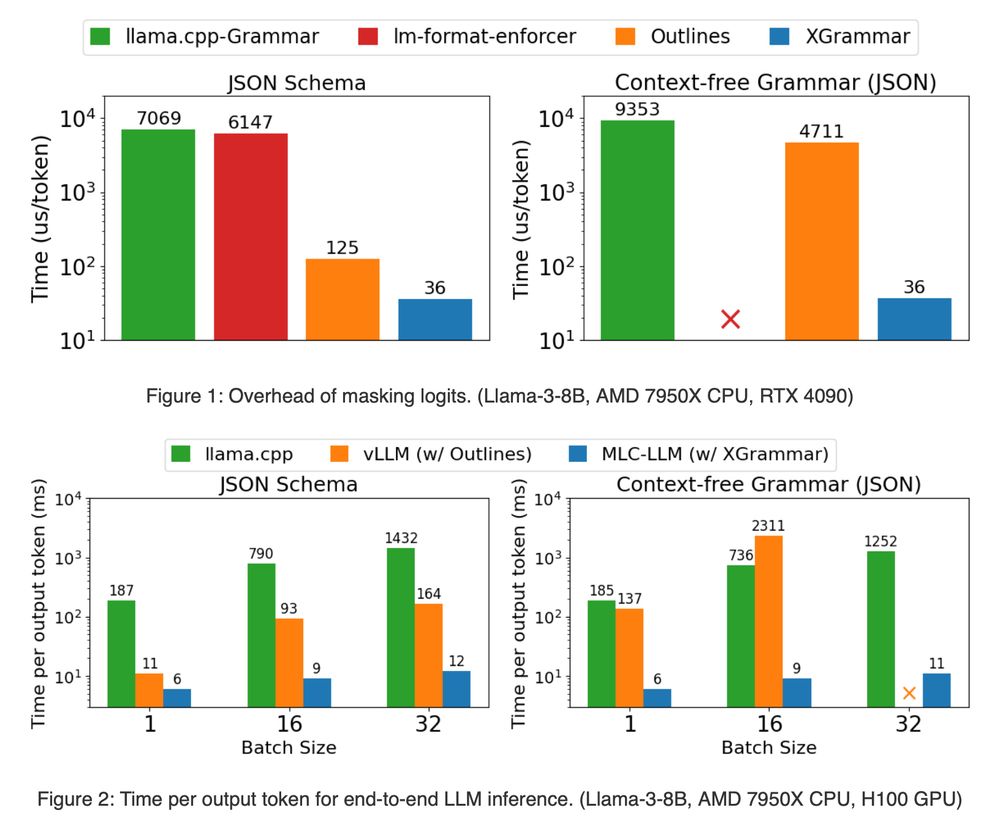

> Accurate JSON/grammar generation

> 3-10x speedup in latency

> 14x faster JSON-schema generation and up to 80x CFG-guided generation

GG MLC team is literally the best in the game and slept on! ⚡

> Accurate JSON/grammar generation

> 3-10x speedup in latency

> 14x faster JSON-schema generation and up to 80x CFG-guided generation

GG MLC team is literally the best in the game and slept on! ⚡

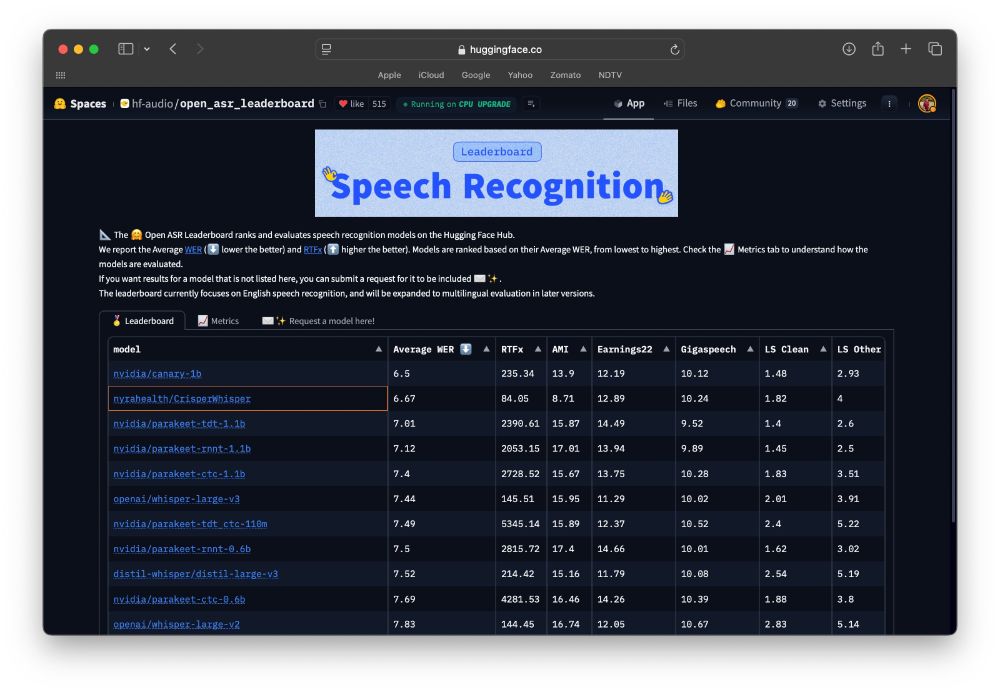

CrisperWhisper aims to transcribe every spoken word exactly as it is, including fillers, pauses, stutters and false starts

Whisper Large V3 fine-tune - beats it by roughly ~1 WER margin ⚡

hf.co/spaces/hf-au...

CrisperWhisper aims to transcribe every spoken word exactly as it is, including fillers, pauses, stutters and false starts

Whisper Large V3 fine-tune - beats it by roughly ~1 WER margin ⚡

hf.co/spaces/hf-au...

Here's a quick snippet of it searching the web for the right documentation, creating the JS files plus the necessary HTML all whilst handling Auth too ⚡

Here's a quick snippet of it searching the web for the right documentation, creating the JS files plus the necessary HTML all whilst handling Auth too ⚡

Pixtral Large: huggingface.co/mistralai/Pi...

Mistral Large: huggingface.co/mistralai/Mi...

Pixtral Large: huggingface.co/mistralai/Pi...

Mistral Large: huggingface.co/mistralai/Mi...

> Qwen 2.5 Coder Artifacts

> Flux Kolors Character

> X Potrait

> Text Behind Image 🤯

> DimensionX

> MagicQuill

> JanusFlow 1.3B

> Netflix Recommentation

Check them out at hf.co/spaces 🏃

> Qwen 2.5 Coder Artifacts

> Flux Kolors Character

> X Potrait

> Text Behind Image 🤯

> DimensionX

> MagicQuill

> JanusFlow 1.3B

> Netflix Recommentation

Check them out at hf.co/spaces 🏃

> Arena Hard: GPT4o (84.9) vs Athene v2 (77.9) vs L3.1 405B (69.3)

> Bigcode-Bench Hard: 30.8 vs 31.4 vs 26.4

> MATH: 76.6 vs 83 vs 73.8

Open science ftw! ⚡

> Arena Hard: GPT4o (84.9) vs Athene v2 (77.9) vs L3.1 405B (69.3)

> Bigcode-Bench Hard: 30.8 vs 31.4 vs 26.4

> MATH: 76.6 vs 83 vs 73.8

Open science ftw! ⚡

> Pure language modeling approach to TTS

> Zero-shot voice cloning

> LLaMa architecture w/ Audio tokens (WavTokenizer)

> BONUS: Works on-device w/ llama.cpp ⚡

> Pure language modeling approach to TTS

> Zero-shot voice cloning

> LLaMa architecture w/ Audio tokens (WavTokenizer)

> BONUS: Works on-device w/ llama.cpp ⚡

images/text input, text output. Think multimodal ChatGPT!

We release 2 sizes: 80B🐳 & 9B🐿️

Read: https://huggingface.co/blog/idefics

Try: https://huggingface.co/spaces/HuggingFaceM4/idefics_playground

images/text input, text output. Think multimodal ChatGPT!

We release 2 sizes: 80B🐳 & 9B🐿️

Read: https://huggingface.co/blog/idefics

Try: https://huggingface.co/spaces/HuggingFaceM4/idefics_playground