Website: rajghugare19.github.io/builderbench...

Paper: arxiv.org/abs/2510.06288

Code: github.com/rajghugare19...

With amazing collaborators Catherine Ji, Kathryn Wantlin, Jin Schofield, @ben-eysenbach.bsky.social.

Website: rajghugare19.github.io/builderbench...

Paper: arxiv.org/abs/2510.06288

Code: github.com/rajghugare19...

With amazing collaborators Catherine Ji, Kathryn Wantlin, Jin Schofield, @ben-eysenbach.bsky.social.

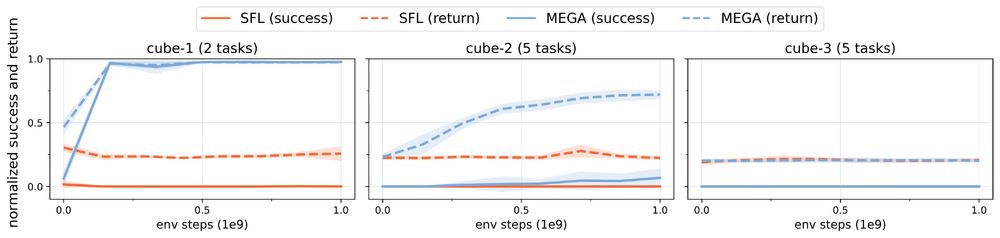

Happy to share BuilderBench, a benchmark to accelerate research in pre-training that centers learning from experience.

Happy to share BuilderBench, a benchmark to accelerate research in pre-training that centers learning from experience.

Paper -> arxiv.org/abs/2505.23527

Code -> github.com/Princeton-RL...

Project Page -> rajghugare19.github.io/nf4rl/

Paper -> arxiv.org/abs/2505.23527

Code -> github.com/Princeton-RL...

Project Page -> rajghugare19.github.io/nf4rl/