Google DeepMind

Paris

arxiv.org/abs/2402.05468

arxiv.org/abs/2402.05468

Our end-to-end method captures a regression and a classification objective, as well as the autoencoder loss.

We see it as "building a bridge" between these different problems.

8/8

Our end-to-end method captures a regression and a classification objective, as well as the autoencoder loss.

We see it as "building a bridge" between these different problems.

8/8

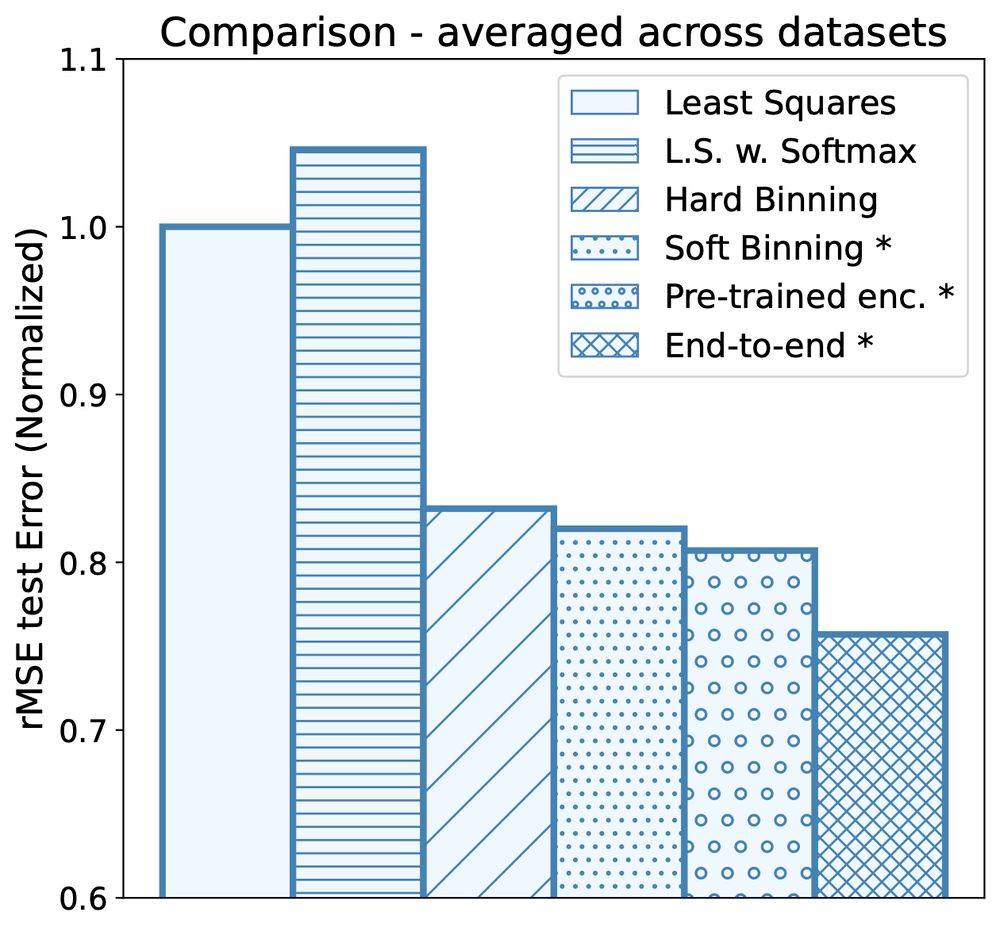

We compare different training methods, showing up to 25% improvement on the least-squares baseline error for our full end-to-end method, over 8 datasets.

7/8

We compare different training methods, showing up to 25% improvement on the least-squares baseline error for our full end-to-end method, over 8 datasets.

7/8

e.g. use softmax(dist) to k different centers

The encoder and the associated decoder μ (in blue) can be trained on an autoencoder loss

5/8

e.g. use softmax(dist) to k different centers

The encoder and the associated decoder μ (in blue) can be trained on an autoencoder loss

5/8

It seems strange, but it's been shown to work well in many settings, even for RL applications.

3/8

It seems strange, but it's been shown to work well in many settings, even for RL applications.

3/8

Adding to the discussion on using least-squares or cross-entropy, regression or classification formulations of supervised problems!

A thread on how to bridge these problems:

Adding to the discussion on using least-squares or cross-entropy, regression or classification formulations of supervised problems!

A thread on how to bridge these problems: