❓Are we actually capturing the bubble of risk for cybersecurity evals? Not really! Adversaries can modify agents by a small amount and get massive gains.

❓Are we actually capturing the bubble of risk for cybersecurity evals? Not really! Adversaries can modify agents by a small amount and get massive gains.

www.polarislab.org/ai-law-track...

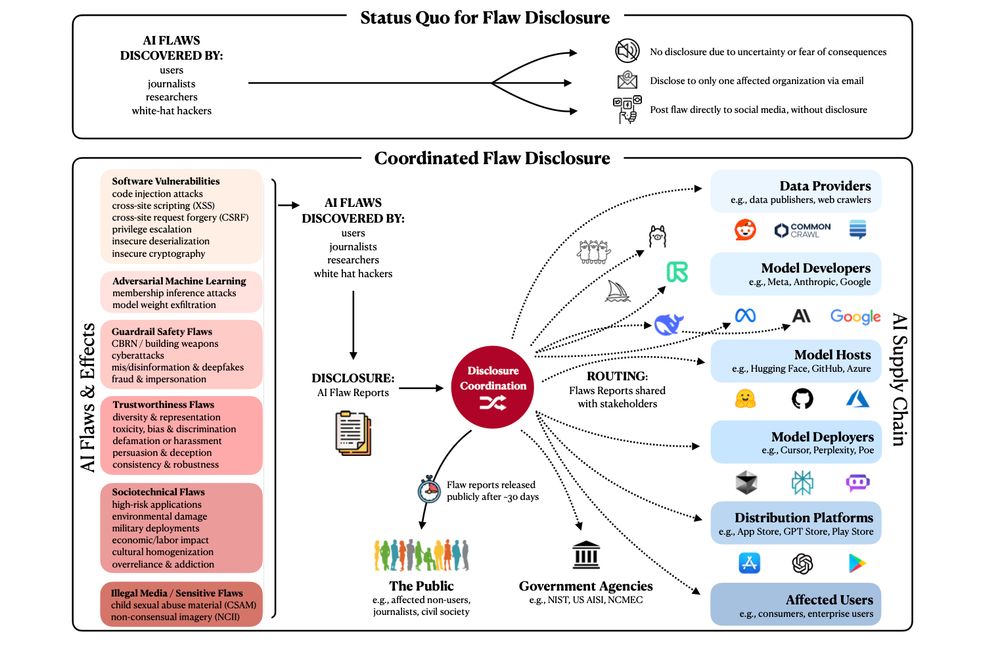

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

#oumi #opensource #collaboration

Can VLMs do difficult reasoning tasks? Using new dataset for evaluating Simple-to-Hard generalization (a form of OOD generalization) we study how to mitigate the dreaded "modality gap" VLM vs its base LLM.

(note: the poster, Simon Park, applied to PhD programs this spring)

Can VLMs do difficult reasoning tasks? Using new dataset for evaluating Simple-to-Hard generalization (a form of OOD generalization) we study how to mitigate the dreaded "modality gap" VLM vs its base LLM.

(note: the poster, Simon Park, applied to PhD programs this spring)

@xiamengzhou.bsky.social (she's also on job market!). tinyurl.com/pepcynaxFully

@xiamengzhou.bsky.social (she's also on job market!). tinyurl.com/pepcynaxFully