The TxT360 dedup pipeline can be recreated and used for other datasets. We include our tips and tricks in a tell-all write up in the release blog:

llm360-txt360.hf.space

huggingface.co/spaces/LLM36...

The TxT360 dedup pipeline can be recreated and used for other datasets. We include our tips and tricks in a tell-all write up in the release blog:

llm360-txt360.hf.space

huggingface.co/spaces/LLM36...

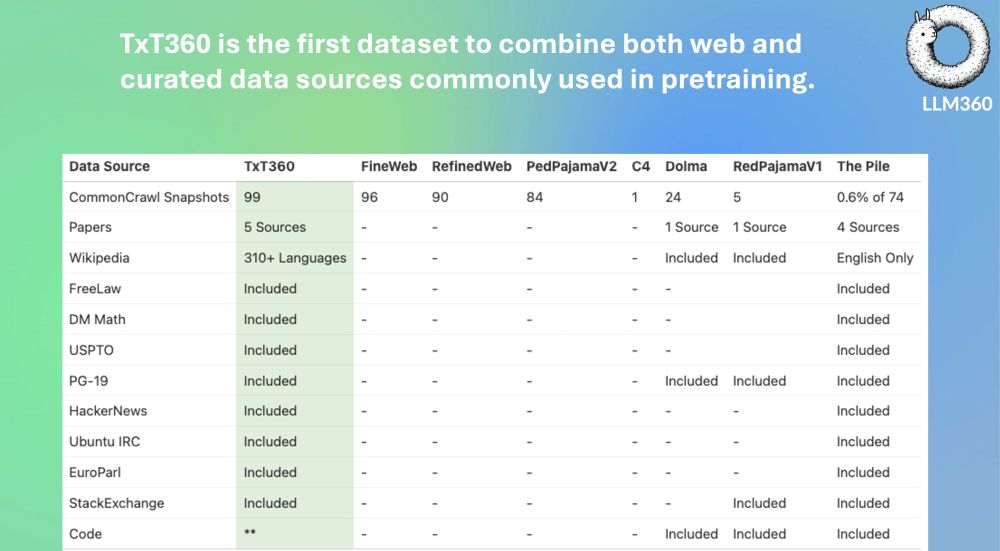

High-quality data is the first step toward better open source models...and we are excited to join the party contributing the first globally deduplicated dataset containing 5.7T tokens!

High-quality data is the first step toward better open source models...and we are excited to join the party contributing the first globally deduplicated dataset containing 5.7T tokens!

TxT360: a globally deduplicated dataset for LLM pretraining

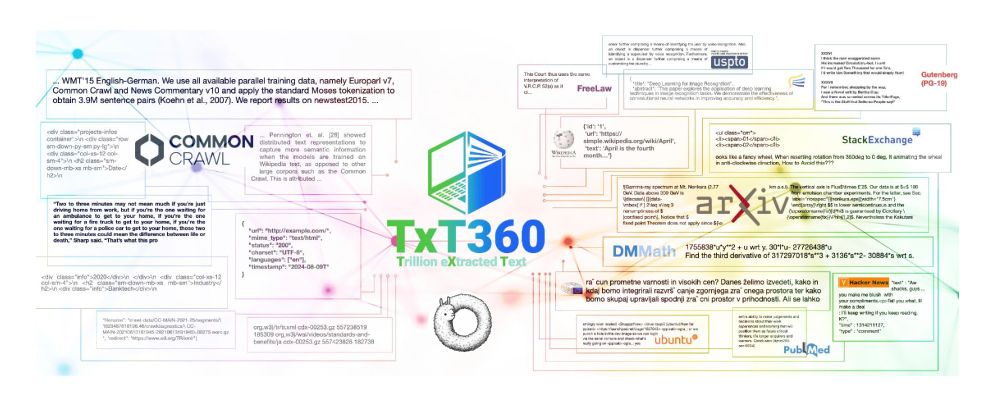

🌐 99 Common Crawls

📘 14 Curated Sources

👨🍳 recipe to easily adjust data weighting and train the most performant models

Dataset:

huggingface.co/datasets/LLM...

Blog:

llm360-txt360.hf.space

TxT360: a globally deduplicated dataset for LLM pretraining

🌐 99 Common Crawls

📘 14 Curated Sources

👨🍳 recipe to easily adjust data weighting and train the most performant models

Dataset:

huggingface.co/datasets/LLM...

Blog:

llm360-txt360.hf.space

Please let us know if we missed you or if you'd like to be added!

go.bsky.app/FELkyDr

Please let us know if we missed you or if you'd like to be added!

go.bsky.app/FELkyDr