Interested in robustness at scale and reasoning.

How does LLM training loss translate to downstream performance?

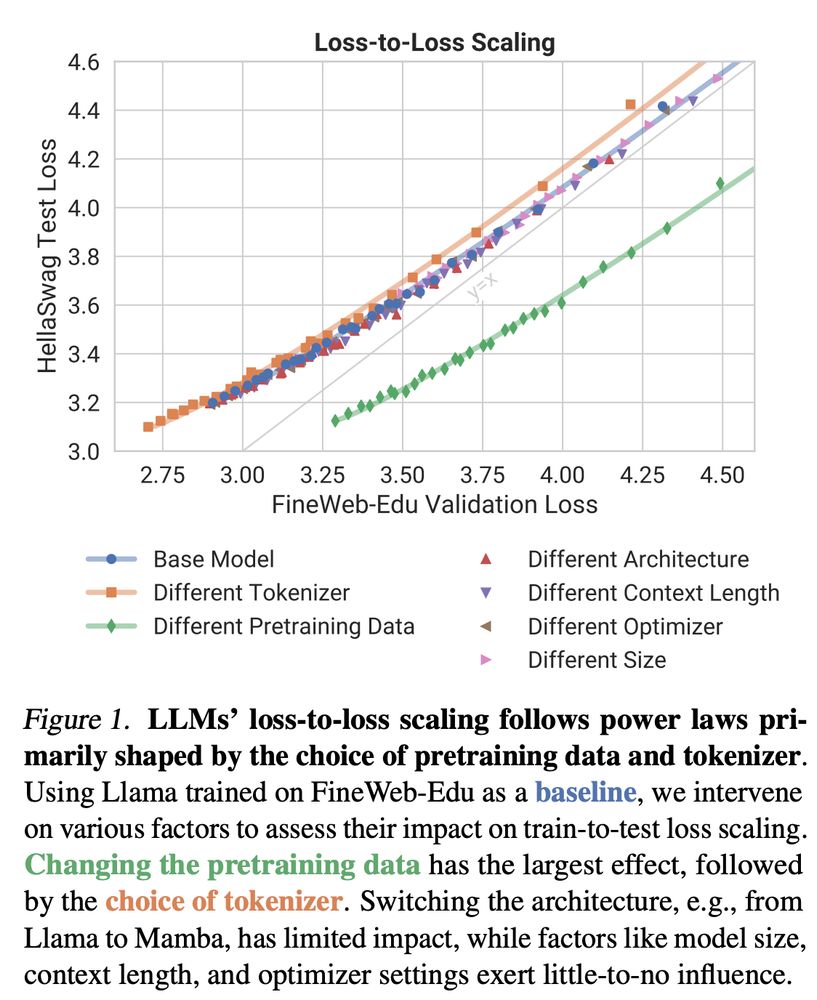

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8