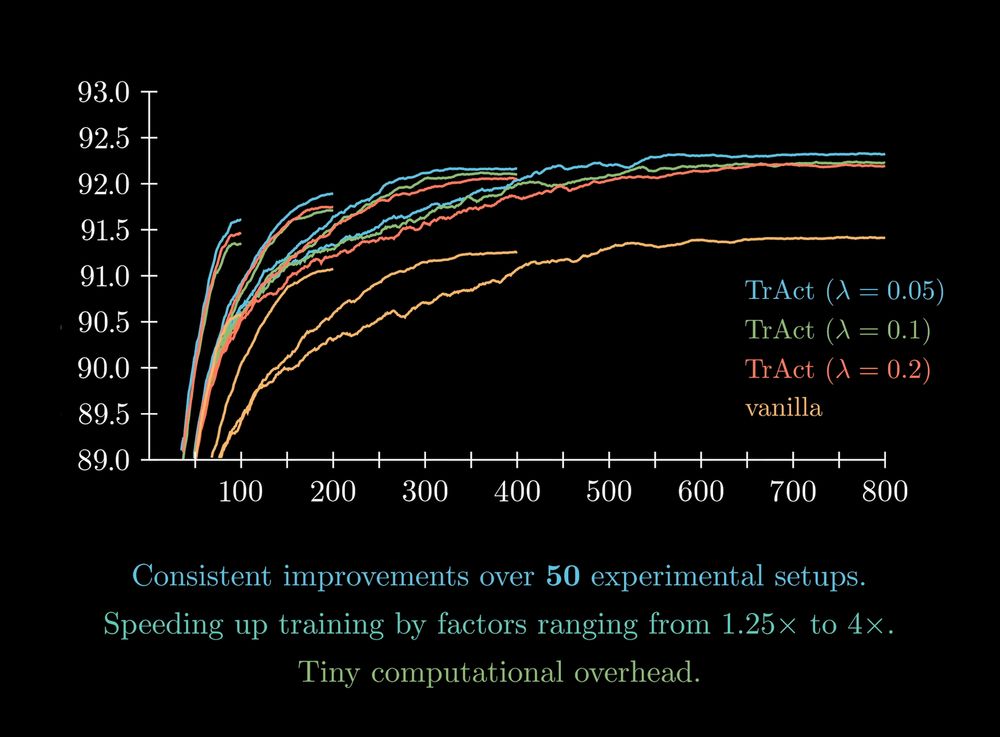

We benchmark TrAct on a suite of 50 experimental settings.

We benchmark TrAct on a suite of 50 experimental settings.

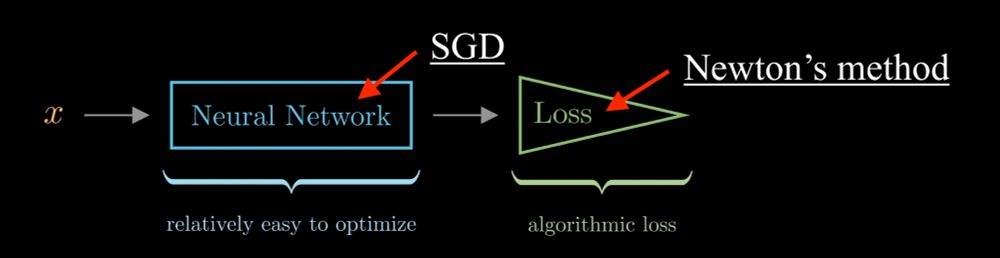

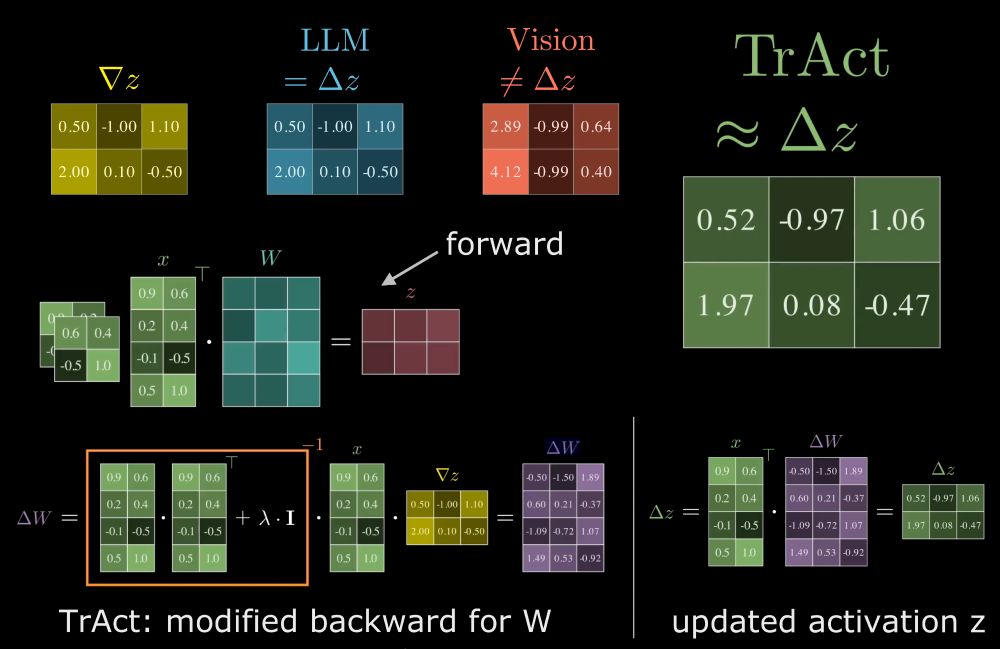

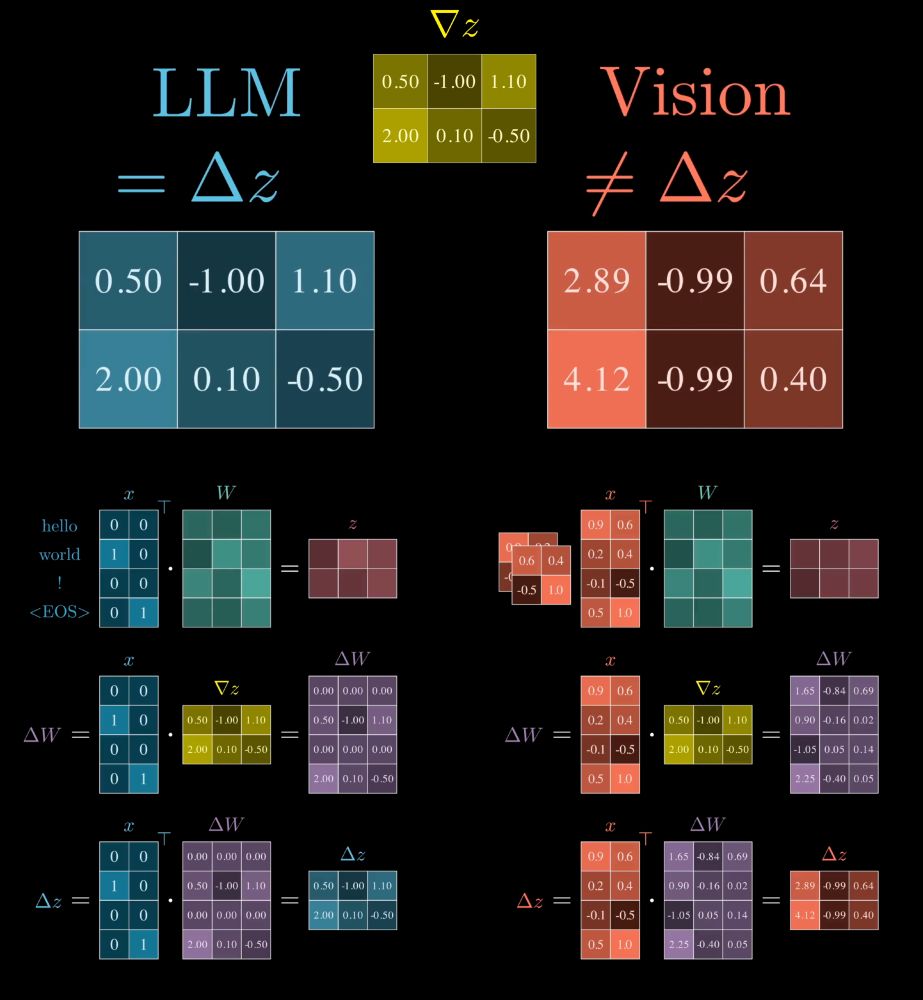

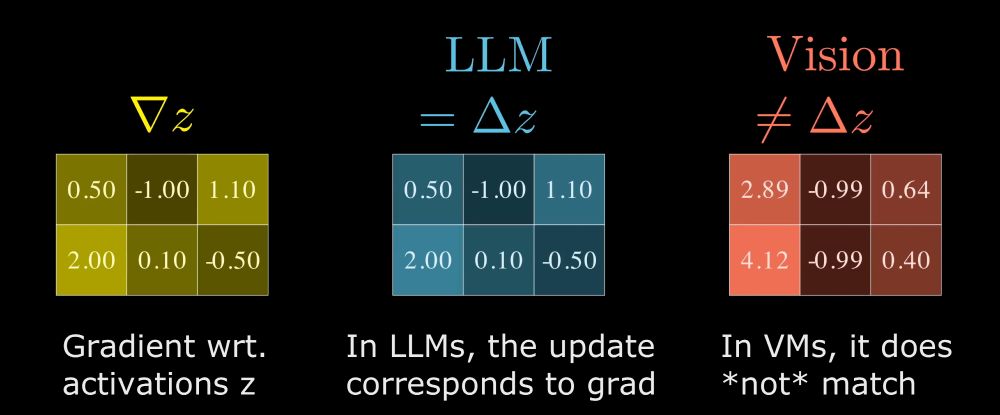

* in LLMs we update Embeddings (/activations) directly

* but in Vision models we update the *weights* of the first layer, which causes indirect updates to the Activations (/embeddings)

* in LLMs we update Embeddings (/activations) directly

* but in Vision models we update the *weights* of the first layer, which causes indirect updates to the Activations (/embeddings)

Paper link 📜: arxiv.org/abs/2410.23970

Video link 🎥: youtu.be/ZjTAjjxbkRY

🧵

Paper link 📜: arxiv.org/abs/2410.23970

Video link 🎥: youtu.be/ZjTAjjxbkRY

🧵