www.technologyreview.com/2024/12/20/1...

@hildekuehne.bsky.social

www.technologyreview.com/2024/12/20/1...

@hildekuehne.bsky.social

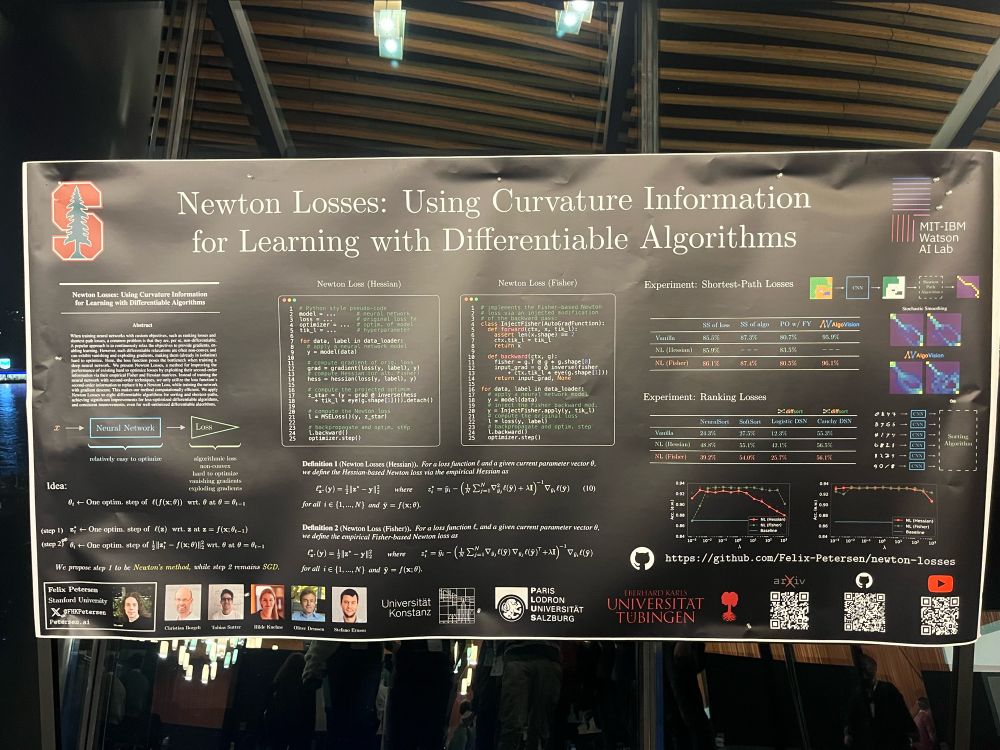

Oral on Friday at 10am PT

neurips.cc/virtual/2024...

Oral on Friday at 10am PT

neurips.cc/virtual/2024...

Paper link 📜: arxiv.org/abs/2410.23970

Video link 🎥: youtu.be/ZjTAjjxbkRY

🧵

Paper link 📜: arxiv.org/abs/2410.23970

Video link 🎥: youtu.be/ZjTAjjxbkRY

🧵

Paper link 📜: arxiv.org/abs/2410.19055

Paper link 📜: arxiv.org/abs/2410.19055