Data management and NLP/LLMs for information quality.

https://www.eurecom.fr/~papotti/

Wider or longer output tables = tougher for all LLMs! 🧨

From Llama 3 and Qwen to GPT-4, no LLM goes above 25% accuracy on our stricter measure.

Wider or longer output tables = tougher for all LLMs! 🧨

From Llama 3 and Qwen to GPT-4, no LLM goes above 25% accuracy on our stricter measure.

We argue this isn’t just a technical shift — it’s an epistemological transformation. Who gets to define what's true when everyone is the fact-checker?

We argue this isn’t just a technical shift — it’s an epistemological transformation. Who gets to define what's true when everyone is the fact-checker?

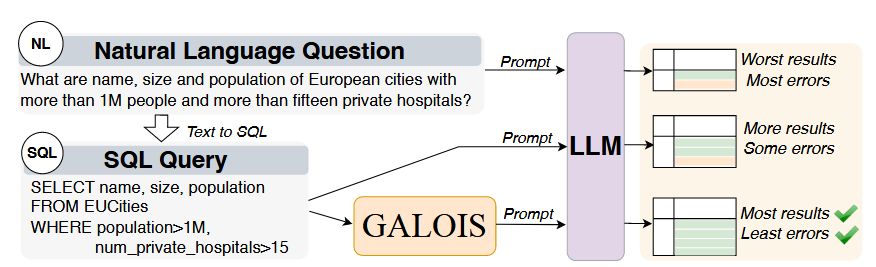

Result: Significant quality gains (+29%) without prohibitive costs. Works across LLMs & for internal knowledge + in-context data (RAG-like setup, reported results in the figure). ✅

Result: Significant quality gains (+29%) without prohibitive costs. Works across LLMs & for internal knowledge + in-context data (RAG-like setup, reported results in the figure). ✅

🔹 Designing physical operators tailored to LLM interaction nuances (e.g., Table-Scan vs Key-Scan in the figure).

🔹 Rethinking logical optimization (like pushdowns) for a cost/quality trade-off.

🔹 Designing physical operators tailored to LLM interaction nuances (e.g., Table-Scan vs Key-Scan in the figure).

🔹 Rethinking logical optimization (like pushdowns) for a cost/quality trade-off.

Our results show standard techniques like predicate pushdown can even reduce result quality by making LLM prompts more complex to process accurately. 🤔

Our results show standard techniques like predicate pushdown can even reduce result quality by making LLM prompts more complex to process accurately. 🤔

As systems increasingly use declarative interfaces on LLMs, traditional optimization falls short

Details 👇

As systems increasingly use declarative interfaces on LLMs, traditional optimization falls short

Details 👇

⏰ 11:00 Session B

Our work, "An LLM-Based Approach for Insight Generation in Data Analysis," uses LLMs to automatically find insights in databases, outperforming baselines both in insightfulness and correctness

Paper: arxiv.org/abs/2503.11664

Details 👇

⏰ 11:00 Session B

Our work, "An LLM-Based Approach for Insight Generation in Data Analysis," uses LLMs to automatically find insights in databases, outperforming baselines both in insightfulness and correctness

Paper: arxiv.org/abs/2503.11664

Details 👇

1️⃣ Zero-Shot Learning (ZSL) +/- general-purpose reasoning

2️⃣ Supervised Fine Tuning (SFT) +/- task-specific reasoning traces

3️⃣ Reinforcement Learning (RL) with EXecution accuracy (EX) vs. our fine-grained rewards

4️⃣ Combined SFT+RL approach

1️⃣ Zero-Shot Learning (ZSL) +/- general-purpose reasoning

2️⃣ Supervised Fine Tuning (SFT) +/- task-specific reasoning traces

3️⃣ Reinforcement Learning (RL) with EXecution accuracy (EX) vs. our fine-grained rewards

4️⃣ Combined SFT+RL approach

Leveraging RL with our reward mechanism, we push Qwen-Coder-2.5 7B to performance on par with much larger LLMs (>400B) on the BIRD dataset! 🤯

Model: huggingface.co/simone-papic...

Paper: huggingface.co/papers/2504....

Details 👇

Leveraging RL with our reward mechanism, we push Qwen-Coder-2.5 7B to performance on par with much larger LLMs (>400B) on the BIRD dataset! 🤯

Model: huggingface.co/simone-papic...

Paper: huggingface.co/papers/2504....

Details 👇

RAG struggles with broad, multi-hop questions.

We surpass RAG by up to 20 absolute points in QA performance, even with extreme cache compression (64x smaller)!

Details 👇

RAG struggles with broad, multi-hop questions.

We surpass RAG by up to 20 absolute points in QA performance, even with extreme cache compression (64x smaller)!

Details 👇

Our #COLING paper uncovers that tropes are used in 37% of the social posts debating immigration and vaccination

📄 coling-2025-proceedings.s3.us-east-1.amazonaws.com/main/pdf/202...

👇

Our #COLING paper uncovers that tropes are used in 37% of the social posts debating immigration and vaccination

📄 coling-2025-proceedings.s3.us-east-1.amazonaws.com/main/pdf/202...

👇

No more large GPUs needed on long context scenarios!

No more large GPUs needed on long context scenarios!

I'm seeking PhD and Post-doc candidates to join my research group in 2025 at EURECOM in the south of France.

- 3 new projects on LLMs

- Full-time positions with competitive salaries and benefits

- English-speaking environment

Interested? Ping me!

I'm seeking PhD and Post-doc candidates to join my research group in 2025 at EURECOM in the south of France.

- 3 new projects on LLMs

- Full-time positions with competitive salaries and benefits

- English-speaking environment

Interested? Ping me!

A huge thank you to the team at NYU-AD (Miro Mannino, Junior Francisco Garcia Ayala, Reem Hazim, and Azza Abouzied) for their dedication to this joint effort!

thanks also to the cikm 2024 organizers and the award committee!

A huge thank you to the team at NYU-AD (Miro Mannino, Junior Francisco Garcia Ayala, Reem Hazim, and Azza Abouzied) for their dedication to this joint effort!

thanks also to the cikm 2024 organizers and the award committee!

ACM #CIKM 2024! 🏆

Data voids are gaps in online information, which are often exploit to spread disinformation.

More details 👇

#CIKM2024 #DataVoids #Disinformation #KGs

ACM #CIKM 2024! 🏆

Data voids are gaps in online information, which are often exploit to spread disinformation.

More details 👇

#CIKM2024 #DataVoids #Disinformation #KGs