https://ofir.io/about

To find out, we built AlgoTune, a benchmark challenging agents to optimize 100+ algorithms like gzip compression, AES encryption and PCA. Frontier models struggle, finding only surface-level wins. Lots of headroom here!

algotune.io

To find out, we built AlgoTune, a benchmark challenging agents to optimize 100+ algorithms like gzip compression, AES encryption and PCA. Frontier models struggle, finding only surface-level wins. Lots of headroom here!

algotune.io

github.com/swe-agent/sw...

github.com/swe-agent/sw...

SWE-bench MM is a new set of JavaScript issues that have a visual component (‘map isn’t rendering correctly’, ‘button text isn’t appearing’).

www.swebench.com/sb-cli/

SWE-bench MM is a new set of JavaScript issues that have a visual component (‘map isn’t rendering correctly’, ‘button text isn’t appearing’).

www.swebench.com/sb-cli/

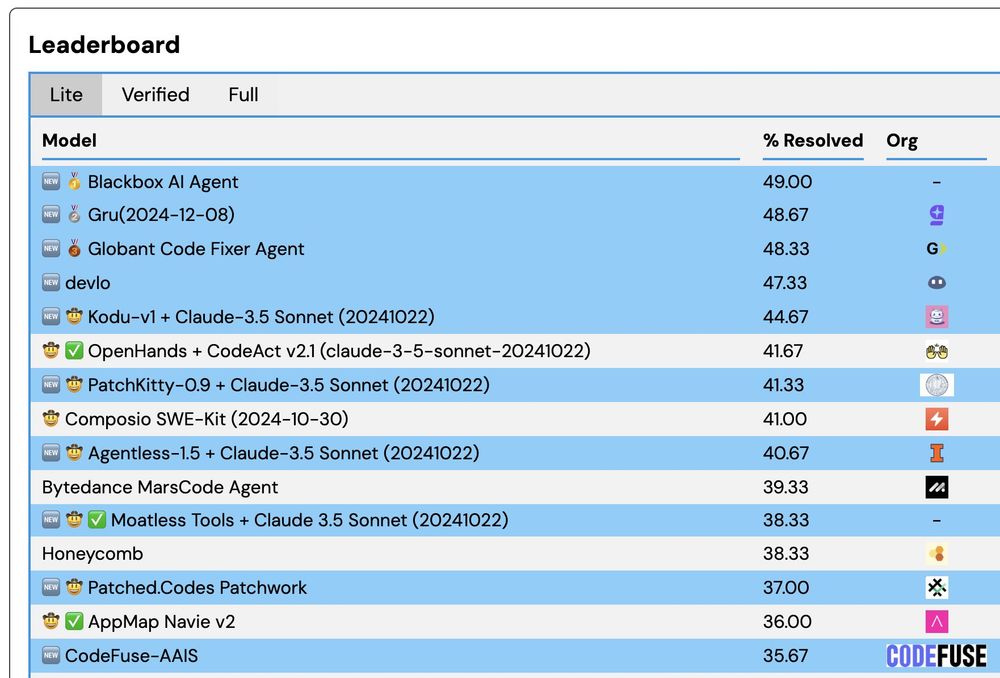

Congrats to Google on your first submission!

Credit to John Yang, Carlos E. Jimeneze & Kilian Lieret who maintain SWE-bench/SWE-agent

Congrats to Google on your first submission!

Credit to John Yang, Carlos E. Jimeneze & Kilian Lieret who maintain SWE-bench/SWE-agent

I thought 6% was very ambitious but doable.

We ended up getting 12% 🤯 so I cooked dinner for everyone.

I thought 6% was very ambitious but doable.

We ended up getting 12% 🤯 so I cooked dinner for everyone.

We're going to talk about a lot of upcoming SWE-agent features. Join @jyangballin @_carlosejimenez @KLieret and me. I also have a bunch of SWE-agent stickers to hand out :)

We're going to talk about a lot of upcoming SWE-agent features. Join @jyangballin @_carlosejimenez @KLieret and me. I also have a bunch of SWE-agent stickers to hand out :)

I develop autonomous systems for: programming, research-level question answering, finding sec vulnerabilities & other useful+challenging tasks.

I do this by building frontier-pushing benchmarks and agents that do well on them.

See you at NeurIPS!

I develop autonomous systems for: programming, research-level question answering, finding sec vulnerabilities & other useful+challenging tasks.

I do this by building frontier-pushing benchmarks and agents that do well on them.

See you at NeurIPS!

Turns out you can perform "motion prompting," just like you might prompt an LLM! Doing so enables many different capabilities."

motion-prompting.github.io

Turns out you can perform "motion prompting," just like you might prompt an LLM! Doing so enables many different capabilities."

motion-prompting.github.io