Re: precision I meant that there's a chance that the "compressed" network is in practice not a bottleneck if it can store the same/approx. solution as the larger net in high precision weights.

Would be interesting to see how it does when quantized (either at test/train)

Re: precision I meant that there's a chance that the "compressed" network is in practice not a bottleneck if it can store the same/approx. solution as the larger net in high precision weights.

Would be interesting to see how it does when quantized (either at test/train)

See e.g. aclanthology.org/2024.acl-lon... and doi.org/10.1162/tacl...

and very similar work by Gaier & Ha 2019 arxiv.org/abs/1906.04358

See e.g. aclanthology.org/2024.acl-lon... and doi.org/10.1162/tacl...

and very similar work by Gaier & Ha 2019 arxiv.org/abs/1906.04358

proceedings.mlr.press/v217/el-nagg...

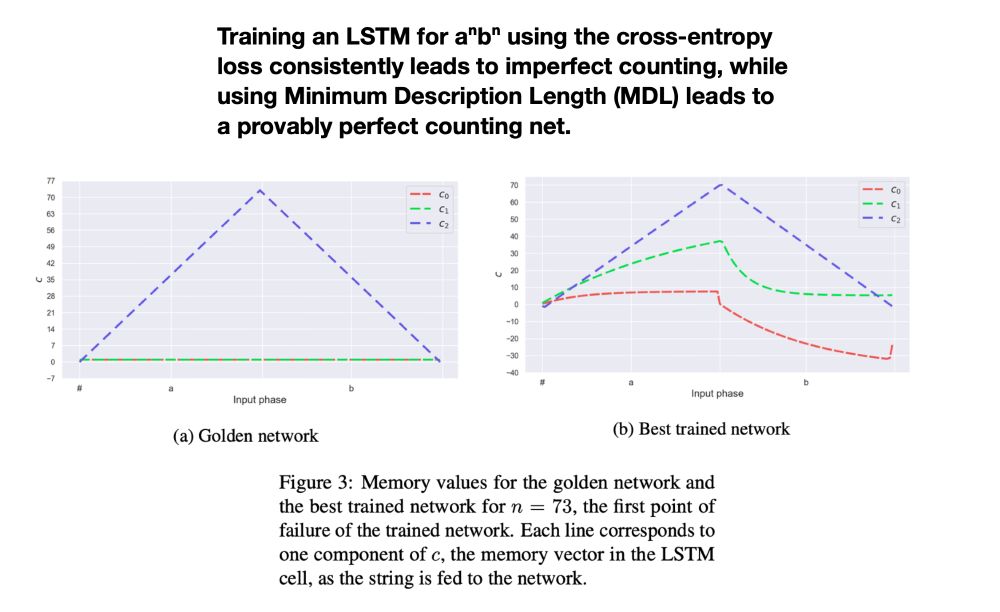

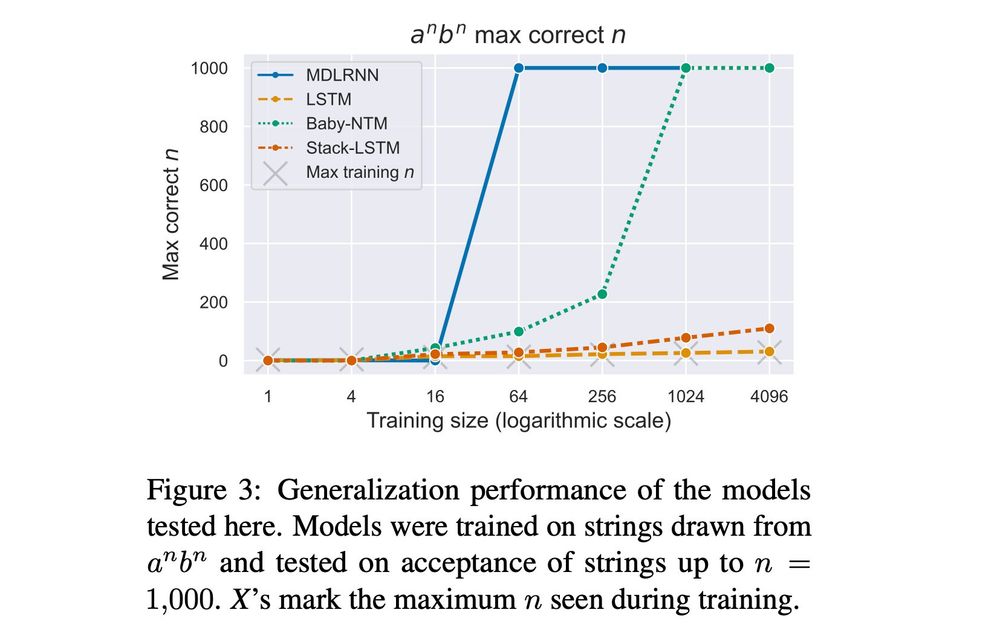

As well as with our MDL RNNs who achieve perfect generalization on aⁿbⁿ, Dyck-1, etc:

direct.mit.edu/tacl/article...

3/3

proceedings.mlr.press/v217/el-nagg...

As well as with our MDL RNNs who achieve perfect generalization on aⁿbⁿ, Dyck-1, etc:

direct.mit.edu/tacl/article...

3/3

Meta-heuristics (early stop, dropout) don't help either.

2/3

Meta-heuristics (early stop, dropout) don't help either.

2/3

Using cases where humans have clear acceptability judgements, we find that all models systematically fail to assign higher probabilities to grammatical continuations.

Using cases where humans have clear acceptability judgements, we find that all models systematically fail to assign higher probabilities to grammatical continuations.

The second-best net, a Memoy-Augmented RNN by Suzgun et al., shows that expressive power is important for GI, but isn't enough for little data.

The second-best net, a Memoy-Augmented RNN by Suzgun et al., shows that expressive power is important for GI, but isn't enough for little data.

A long line of work tested GI in different ways.

Many showed nets generalizing to some extent beyond training, but usually did not explain why generalization stopped at arbitrary points – why would a net get a¹⁰¹⁷b¹⁰¹⁷ right but a¹⁰¹⁸b¹⁰¹⁸ wrong?

A long line of work tested GI in different ways.

Many showed nets generalizing to some extent beyond training, but usually did not explain why generalization stopped at arbitrary points – why would a net get a¹⁰¹⁷b¹⁰¹⁷ right but a¹⁰¹⁸b¹⁰¹⁸ wrong?

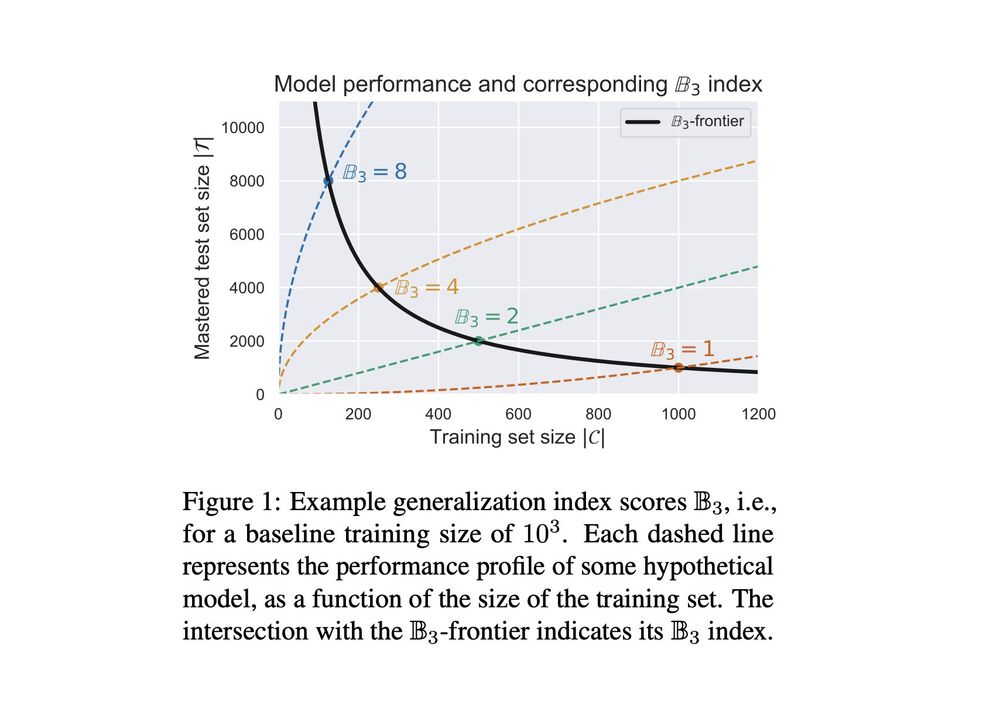

The benchmark assigns a generalization index to a model based on how much it generalizes from how little training data.

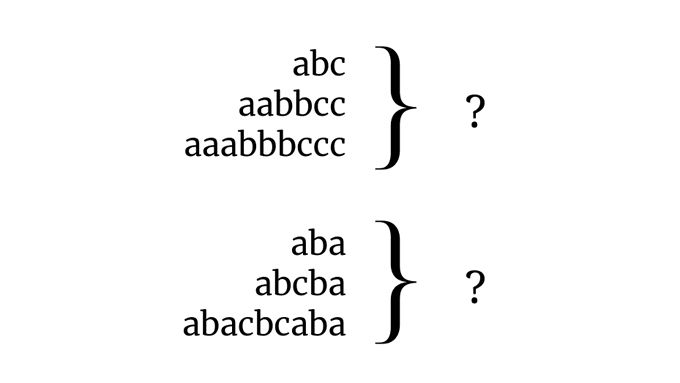

The initial release includes languages such as aⁿbⁿ, aⁿbᵐcⁿ⁺ᵐ, and Dyck 1-2.

The benchmark assigns a generalization index to a model based on how much it generalizes from how little training data.

The initial release includes languages such as aⁿbⁿ, aⁿbᵐcⁿ⁺ᵐ, and Dyck 1-2.

Humans do this remarkably well based on very little data. What about neural nets?

Humans do this remarkably well based on very little data. What about neural nets?