Meta-heuristics (early stop, dropout) don't help either.

2/3

Meta-heuristics (early stop, dropout) don't help either.

2/3

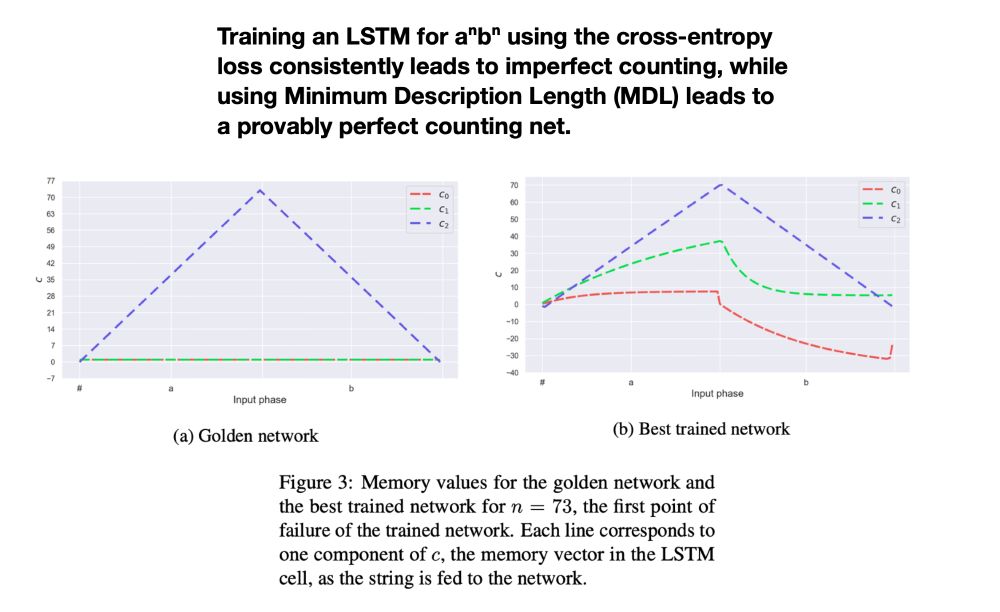

Neural nets offer good approximation but consistently fail to generalize perfectly, even when perfect solutions are proved to exist.

We check whether the culprit might be their training objective.

arxiv.org/abs/2402.10013

Neural nets offer good approximation but consistently fail to generalize perfectly, even when perfect solutions are proved to exist.

We check whether the culprit might be their training objective.

arxiv.org/abs/2402.10013

Using cases where humans have clear acceptability judgements, we find that all models systematically fail to assign higher probabilities to grammatical continuations.

Using cases where humans have clear acceptability judgements, we find that all models systematically fail to assign higher probabilities to grammatical continuations.

ling.auf.net/lingbuzz/006...

ling.auf.net/lingbuzz/006...

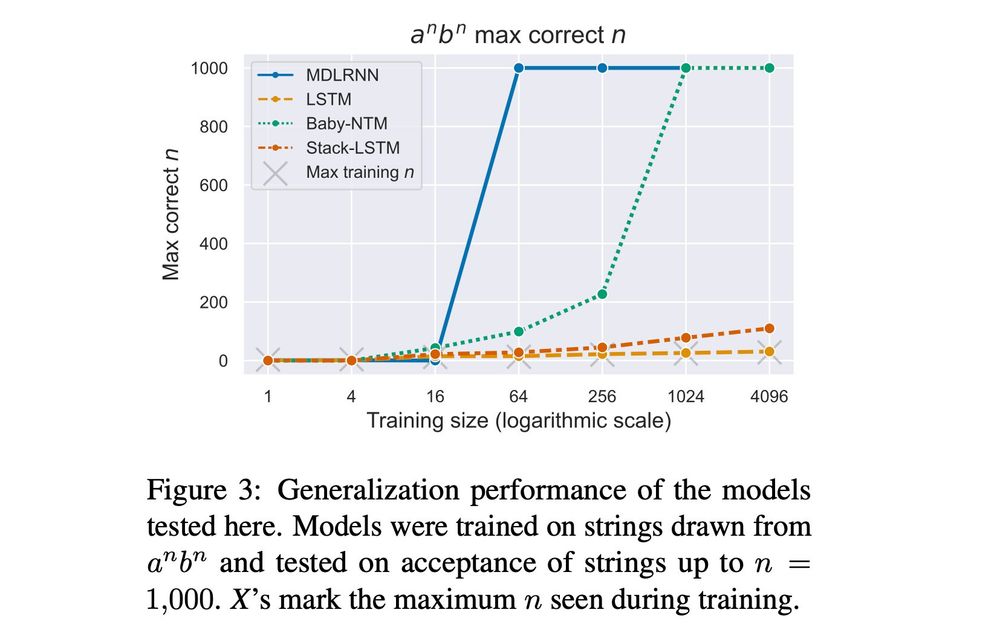

The second-best net, a Memoy-Augmented RNN by Suzgun et al., shows that expressive power is important for GI, but isn't enough for little data.

The second-best net, a Memoy-Augmented RNN by Suzgun et al., shows that expressive power is important for GI, but isn't enough for little data.

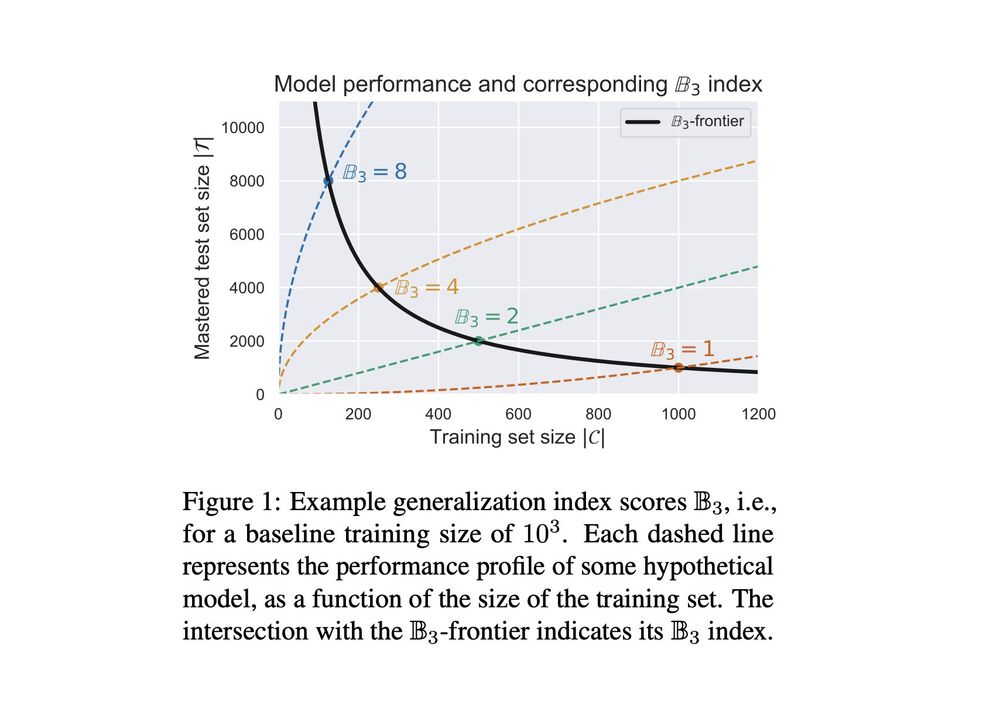

The benchmark assigns a generalization index to a model based on how much it generalizes from how little training data.

The initial release includes languages such as aⁿbⁿ, aⁿbᵐcⁿ⁺ᵐ, and Dyck 1-2.

The benchmark assigns a generalization index to a model based on how much it generalizes from how little training data.

The initial release includes languages such as aⁿbⁿ, aⁿbᵐcⁿ⁺ᵐ, and Dyck 1-2.

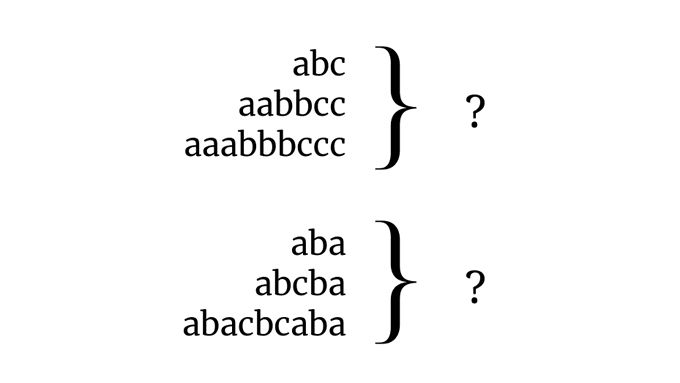

Humans do this remarkably well based on very little data. What about neural nets?

Humans do this remarkably well based on very little data. What about neural nets?