✔️ Enabling more accurate retrieval for AI-generated responses

Kudos to @nohtow.bsky.social for this new SOTA achievement!

🔗 Read the full blog article: www.lighton.ai/lighton-blog...

✔️ Enabling more accurate retrieval for AI-generated responses

Kudos to @nohtow.bsky.social for this new SOTA achievement!

🔗 Read the full blog article: www.lighton.ai/lighton-blog...

Details in 🧵

Details in 🧵

Learn more about PyLate here: lightonai.github.io/pylate/

Learn more about PyLate here: lightonai.github.io/pylate/

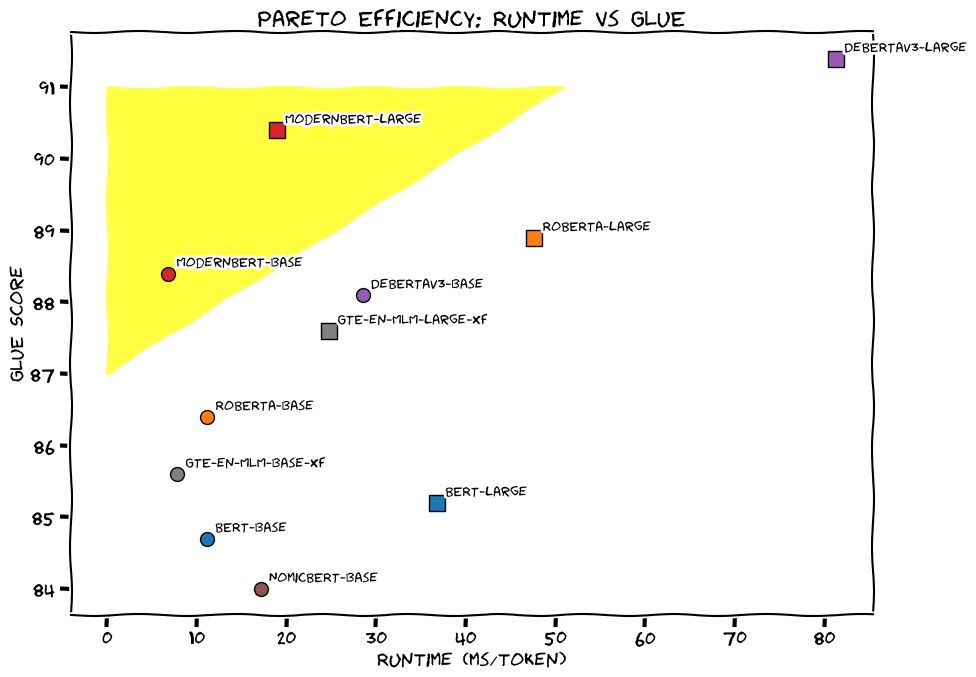

To overcome limitations of awesome ModernBERT-based dense models, today @lightonai.bsky.social is releasing GTE-ModernColBERT, the very first state-of-the-art late-interaction (multi-vectors) model trained using PyLate 🚀

To overcome limitations of awesome ModernBERT-based dense models, today @lightonai.bsky.social is releasing GTE-ModernColBERT, the very first state-of-the-art late-interaction (multi-vectors) model trained using PyLate 🚀

huggingface.co/lightonai/mo...

Congrats to @nohtow.bsky.social for this great work!

huggingface.co/lightonai/mo...

Congrats to @nohtow.bsky.social for this great work!

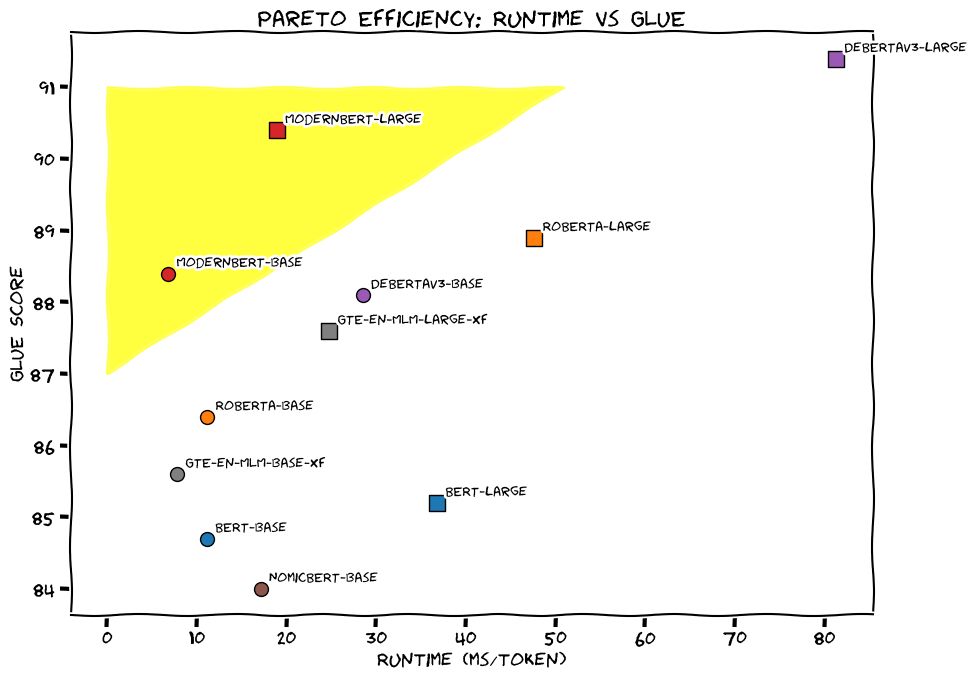

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

This work was performed in collaboration with Answer.ai and the model was trained on Orange Business Cloud Avenue infrastructure.

www.lighton.ai/lighton-blog...

This work was performed in collaboration with Answer.ai and the model was trained on Orange Business Cloud Avenue infrastructure.

www.lighton.ai/lighton-blog...

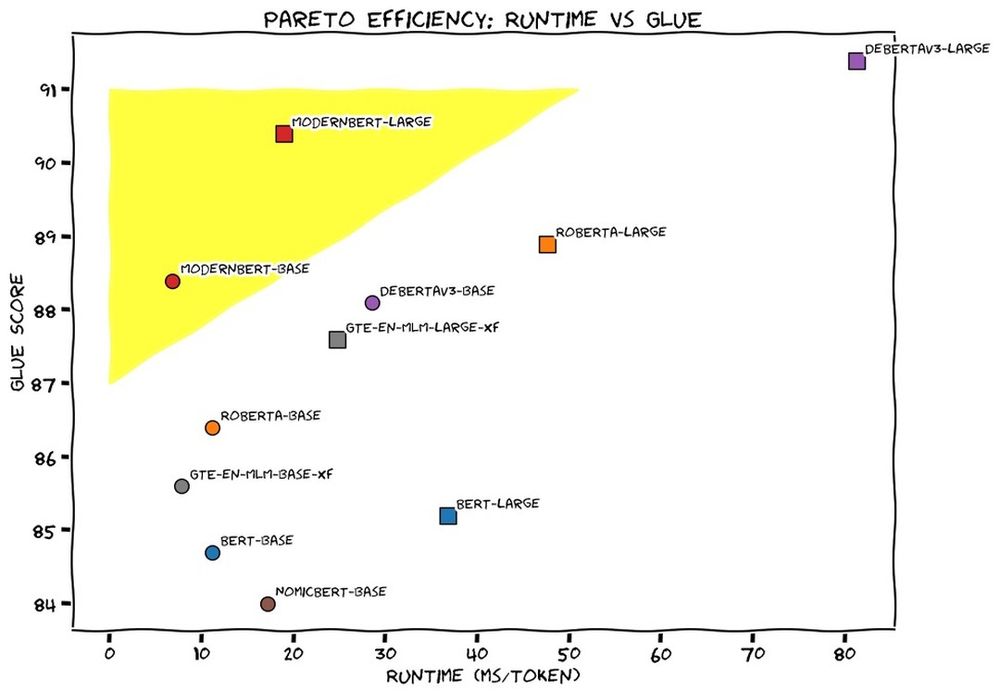

Introducing ModernBERT: 16x larger sequence length, better downstream performance (classification, retrieval), the fastest & most memory efficient encoder on the market.

🧵

Introducing ModernBERT: 16x larger sequence length, better downstream performance (classification, retrieval), the fastest & most memory efficient encoder on the market.

🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

There might be a few LLM releases per week, but there is only one drop-in replacement that brings Pareto improvements over the 6 years old BERT while going at lightspeed

There might be a few LLM releases per week, but there is only one drop-in replacement that brings Pareto improvements over the 6 years old BERT while going at lightspeed

It turns out that training a new, SoTA model from scratch is actually pretty hard. Who knew? 🤷

It turns out that training a new, SoTA model from scratch is actually pretty hard. Who knew? 🤷

Congratulations to @nohtow.bsky.social, Oskar Hallström, Said Taghadouini, Iacopo Poli and all the folks at Answer.ai that made this model a reality.

Congratulations to @nohtow.bsky.social, Oskar Hallström, Said Taghadouini, Iacopo Poli and all the folks at Answer.ai that made this model a reality.

This update pushes the latest features upstream:

- Loading of stanford-nlp models natively

- Serving of embeddings using a FastAPI with dynamic batch processing

- Trained models now include a model card with information about model and training setup

This update pushes the latest features upstream:

- Loading of stanford-nlp models natively

- Serving of embeddings using a FastAPI with dynamic batch processing

- Trained models now include a model card with information about model and training setup