, @maartensap.bsky.social

, @maartensap.bsky.social

#AI #MachineLearning #AIEthics #LLMs #nlp #NLProc #NAACL2025

#AI #MachineLearning #AIEthics #LLMs #nlp #NLProc #NAACL2025

and thanks to our amazing collaborators and advisors for this project

@akhilayerukola.bsky.social

@maartensap.bsky.social

@gneubig.bsky.social

from @ltiatcmu.bsky.social

!🙏

and thanks to our amazing collaborators and advisors for this project

@akhilayerukola.bsky.social

@maartensap.bsky.social

@gneubig.bsky.social

from @ltiatcmu.bsky.social

!🙏

This study sets the state-of-the-art in handling ambiguity in real-world SWE tasks.

🔗 Repo: t.co/QD2A8N4R4J

This study sets the state-of-the-art in handling ambiguity in real-world SWE tasks.

🔗 Repo: t.co/QD2A8N4R4J

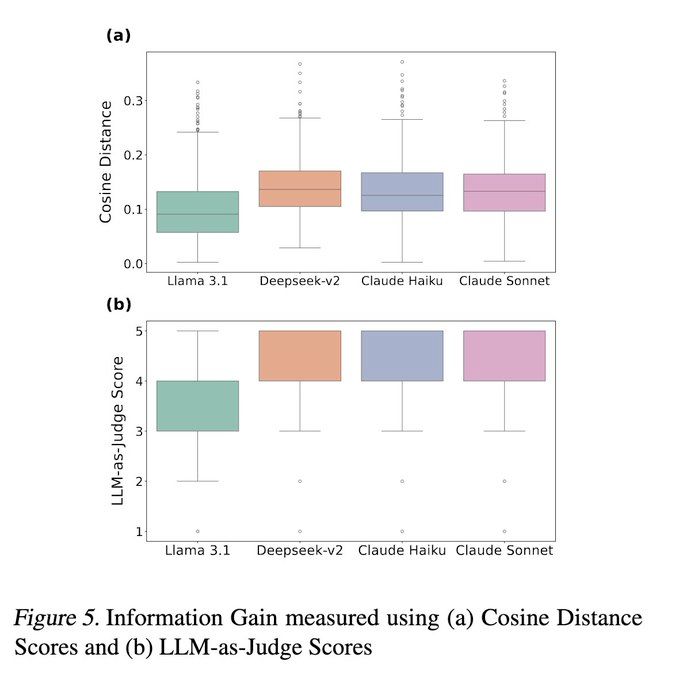

🔹 Llama 3.1 70B asks generic, low-impact questions.

🔹 Claude Haiku 3.5 picks up keywords directly from the input to ask questions.

🔹 Claude Sonnet 3.5 often explores the code first, leading to smarter interactions. 🔍💡

🔹 Llama 3.1 70B asks generic, low-impact questions.

🔹 Claude Haiku 3.5 picks up keywords directly from the input to ask questions.

🔹 Claude Sonnet 3.5 often explores the code first, leading to smarter interactions. 🔍💡

Meanwhile, DeepSeek-V2 can overwhelm users with too many questions. 🤯

Meanwhile, DeepSeek-V2 can overwhelm users with too many questions. 🤯

Only Claude Sonnet 3.5 can make this distinction to a limited degree with the right prompt. 🔍

Only Claude Sonnet 3.5 can make this distinction to a limited degree with the right prompt. 🔍

🔑 (a) Using interactivity to boost performance in ambiguous scenarios

💡 (b) Detecting ambiguity effectively

❓ (c) Asking the right questions

🔑 (a) Using interactivity to boost performance in ambiguous scenarios

💡 (b) Detecting ambiguity effectively

❓ (c) Asking the right questions

We put them to the test on SWE-Bench Verified across three distinct settings to measure the impact. 📊

We put them to the test on SWE-Bench Verified across three distinct settings to measure the impact. 📊

🔗 Link: arxiv.org/abs/2502.13069

🔗 Link: arxiv.org/abs/2502.13069

All Hands' open-source approach and its bold, curious team make it the perfect playground for this exploration. Can't wait to dive in with @gneubig.bsky.social, Xingyao and the amazing team!

All Hands' open-source approach and its bold, curious team make it the perfect playground for this exploration. Can't wait to dive in with @gneubig.bsky.social, Xingyao and the amazing team!