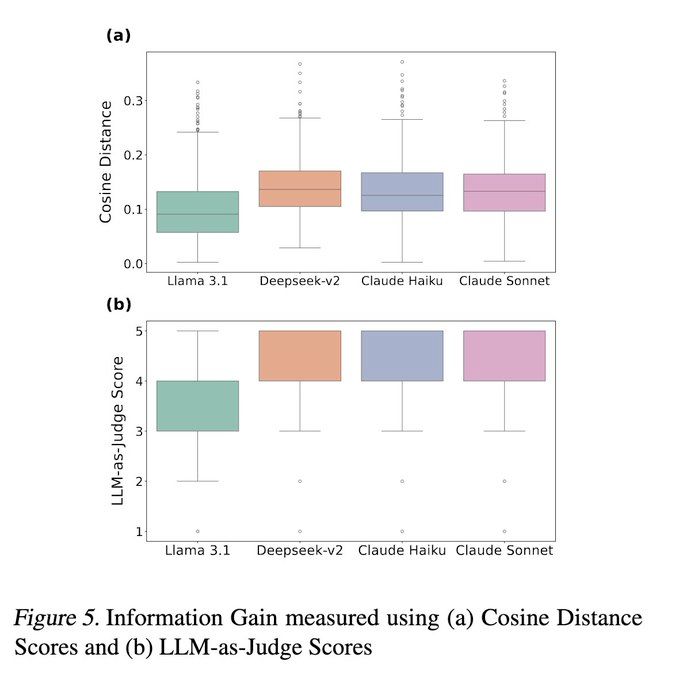

🔹 Llama 3.1 70B asks generic, low-impact questions.

🔹 Claude Haiku 3.5 picks up keywords directly from the input to ask questions.

🔹 Claude Sonnet 3.5 often explores the code first, leading to smarter interactions. 🔍💡

🔹 Llama 3.1 70B asks generic, low-impact questions.

🔹 Claude Haiku 3.5 picks up keywords directly from the input to ask questions.

🔹 Claude Sonnet 3.5 often explores the code first, leading to smarter interactions. 🔍💡

Meanwhile, DeepSeek-V2 can overwhelm users with too many questions. 🤯

Meanwhile, DeepSeek-V2 can overwhelm users with too many questions. 🤯

Only Claude Sonnet 3.5 can make this distinction to a limited degree with the right prompt. 🔍

Only Claude Sonnet 3.5 can make this distinction to a limited degree with the right prompt. 🔍

We put them to the test on SWE-Bench Verified across three distinct settings to measure the impact. 📊

We put them to the test on SWE-Bench Verified across three distinct settings to measure the impact. 📊

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)