Currently: Stats PhD @Harvard

Previously: CS/Soc @Stanford, Stat/ML @Oxford

https://njw.fish

Sequences binned by expression → GDEs provide a powerful embedding space for regulatory sequence design.

🦠 SARS-CoV-2 spike protein dynamics

GDEs embed monthly lineage distributions and recover smooth latent chronologies.

Sequences binned by expression → GDEs provide a powerful embedding space for regulatory sequence design.

🦠 SARS-CoV-2 spike protein dynamics

GDEs embed monthly lineage distributions and recover smooth latent chronologies.

GDEs model phenotype distributions induced by perturbations and generalize to unseen conditions.

🧬 DNA methylation (253M reads)

We learn tissue-specific methylation patterns directly from raw bisulfite reads — no alignment necessary.

GDEs model phenotype distributions induced by perturbations and generalize to unseen conditions.

🧬 DNA methylation (253M reads)

We learn tissue-specific methylation patterns directly from raw bisulfite reads — no alignment necessary.

Each clone is a population of cells → a distribution over expression. GDEs predict clonal fate better than prior approaches.

🧪 CRISPR perturbation effects

GDEs can improve zero-shot prediction of transcriptional response distributions.

Each clone is a population of cells → a distribution over expression. GDEs predict clonal fate better than prior approaches.

🧪 CRISPR perturbation effects

GDEs can improve zero-shot prediction of transcriptional response distributions.

We show that GDE embeddings are asymptotically normal, grounding this with results from empirical process theory.

We show that GDE embeddings are asymptotically normal, grounding this with results from empirical process theory.

GDE latent distances track Wasserstein-2 (W₂) distances across modalities (shown for multinomial distributions)

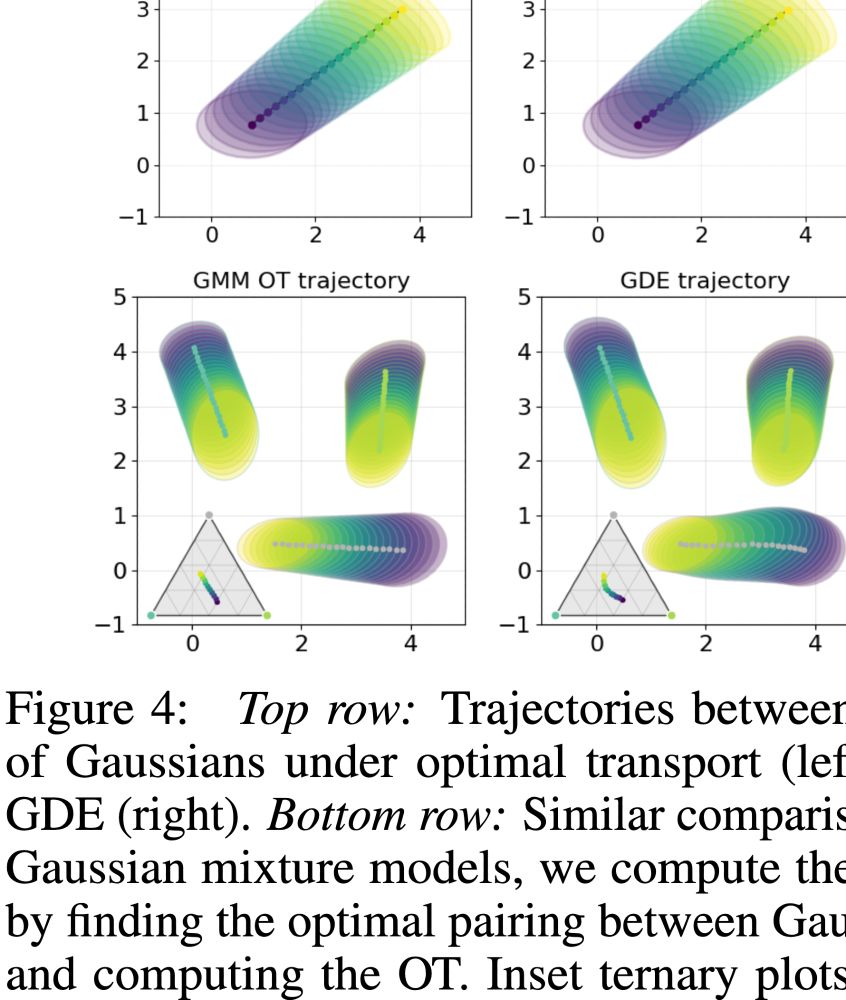

Latent interpolations recover optimal transport paths (shown for Gaussians and Gaussian mixtures)

GDE latent distances track Wasserstein-2 (W₂) distances across modalities (shown for multinomial distributions)

Latent interpolations recover optimal transport paths (shown for Gaussians and Gaussian mixtures)

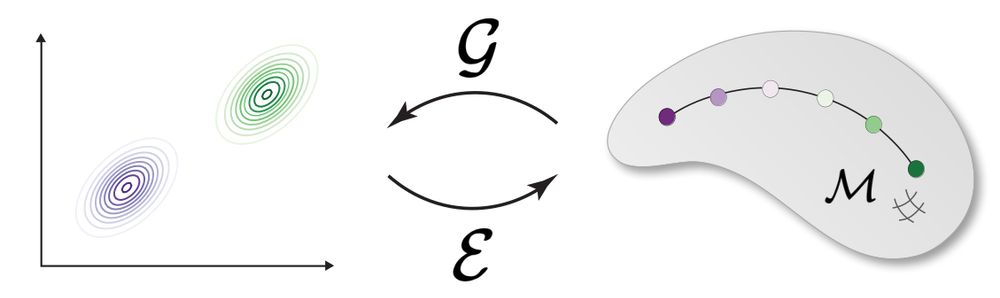

We introduce Generative Distribution Embeddings (GDEs) — a framework for learning representations of distributions, not just datapoints.

GDEs enable multiscale modeling and come with elegant statistical theory and some miraculous geometric results!

🧵

We introduce Generative Distribution Embeddings (GDEs) — a framework for learning representations of distributions, not just datapoints.

GDEs enable multiscale modeling and come with elegant statistical theory and some miraculous geometric results!

🧵