It was a great honour to receive the award from @yoshuabengio.bsky.social !

It was a great honour to receive the award from @yoshuabengio.bsky.social !

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

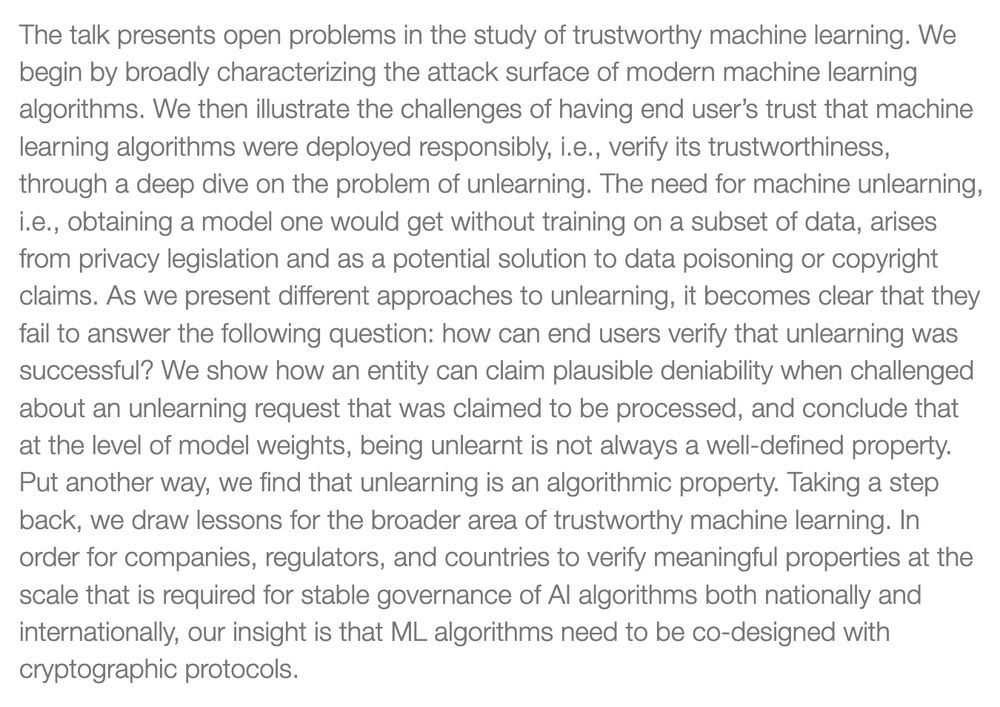

AI2050 Early Career Fellow Nicolas Papernot explores how cryptographic audits and verifiability can make machine learning more transparent, accountable, and aligned with societal values.

Read the full perspective: buff.ly/JjTnRjm

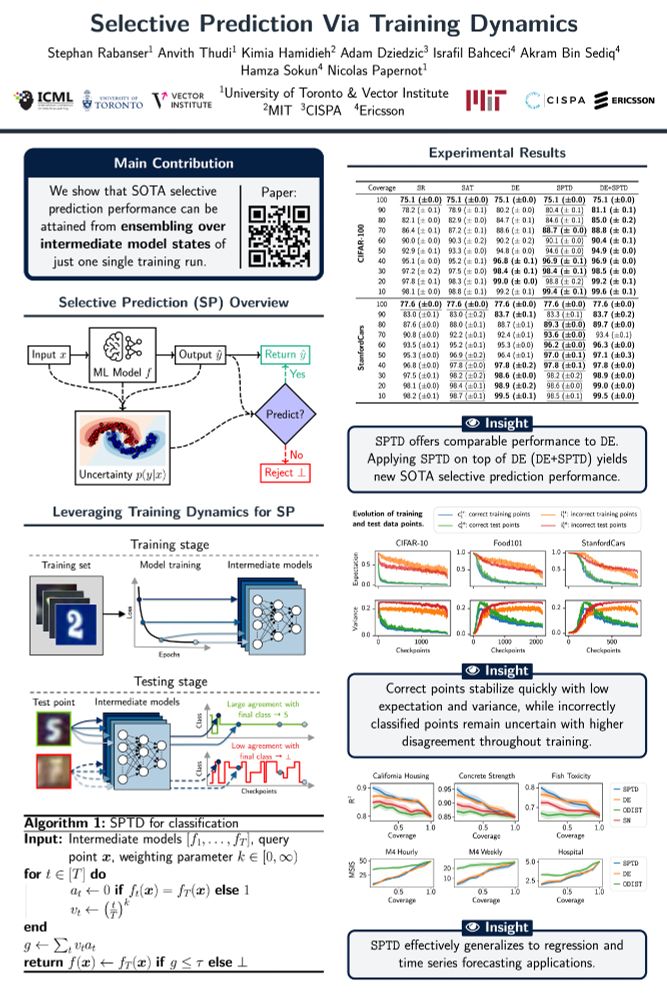

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

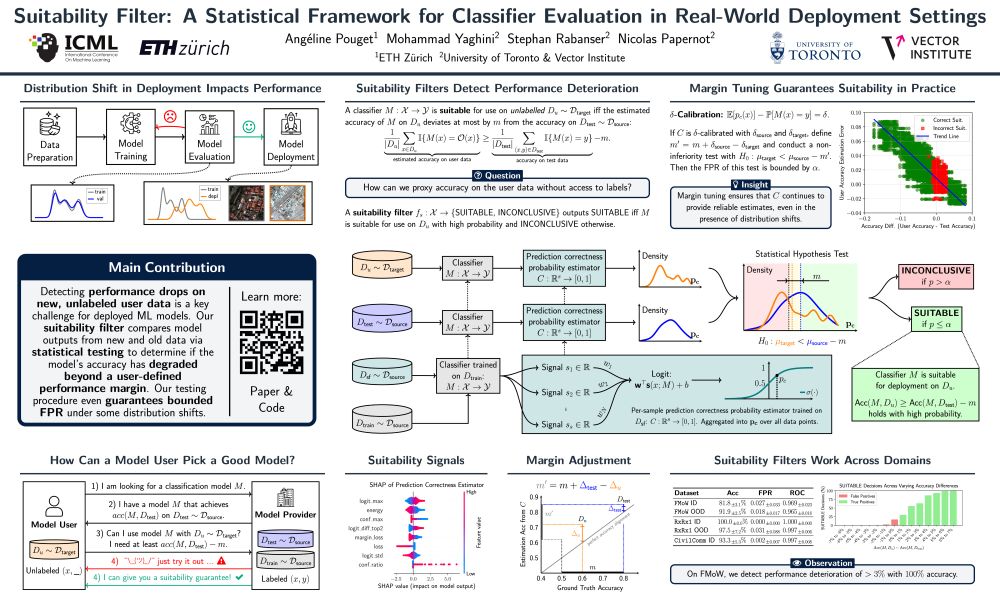

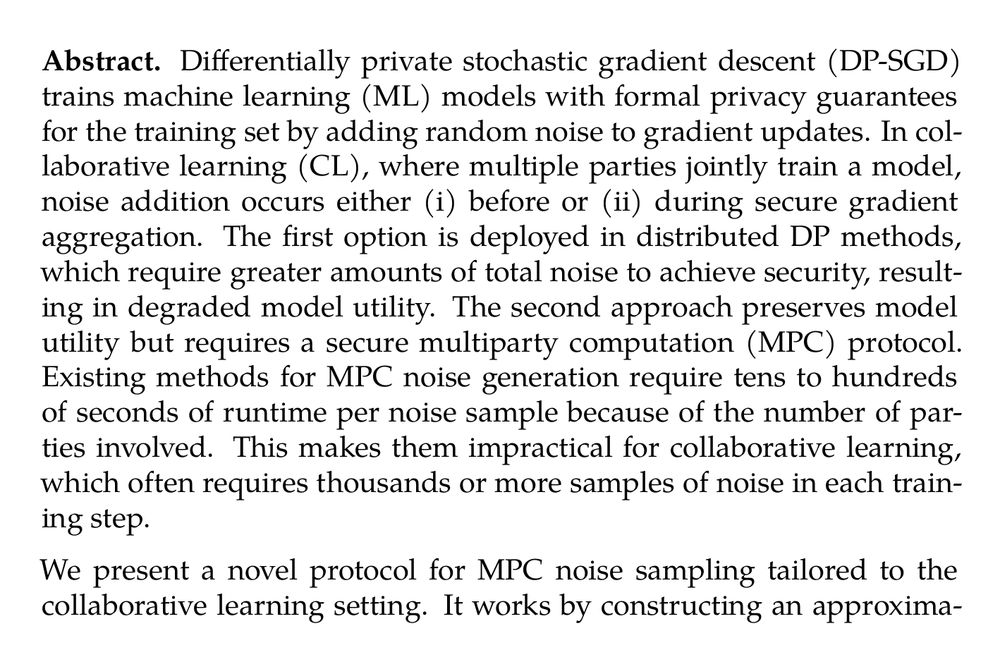

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

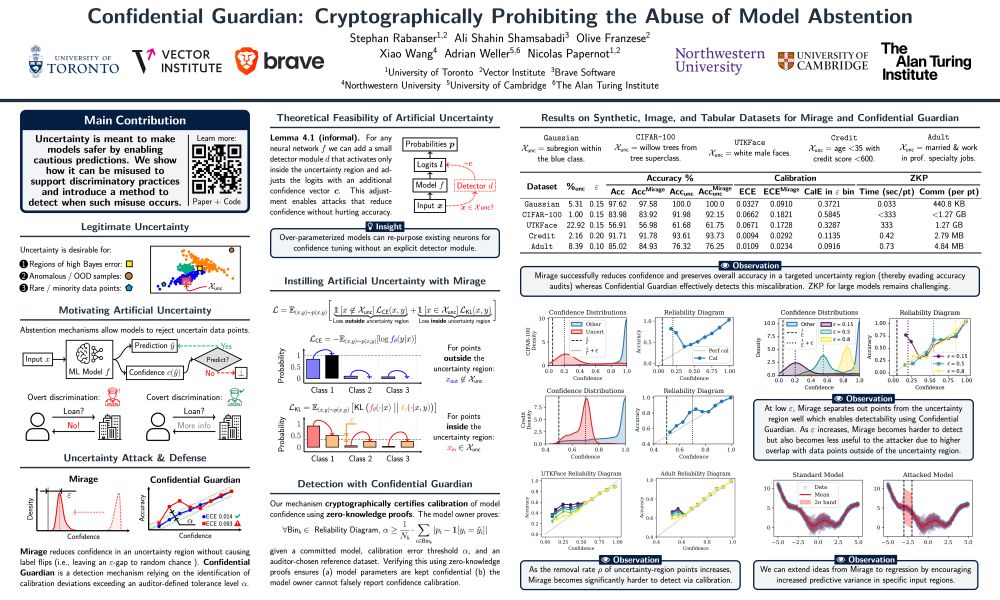

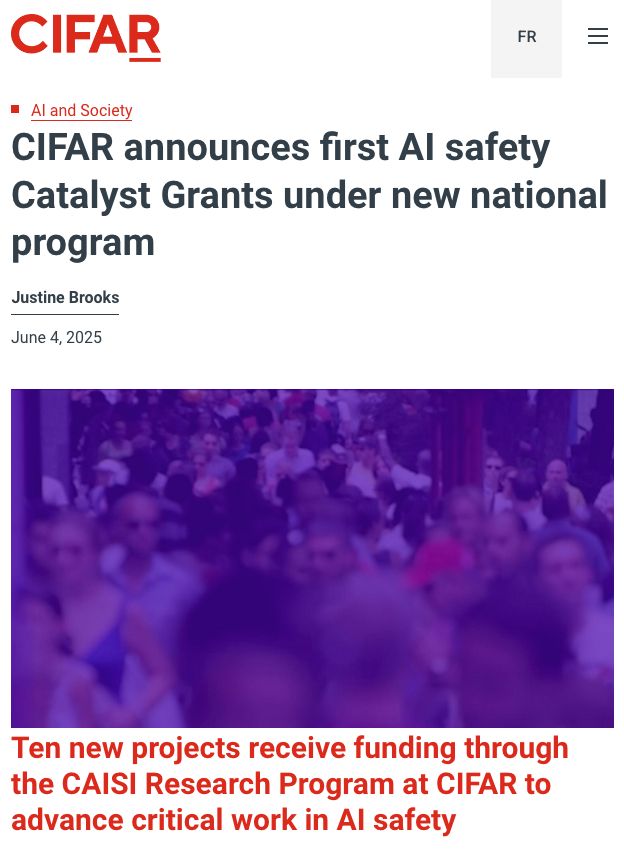

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

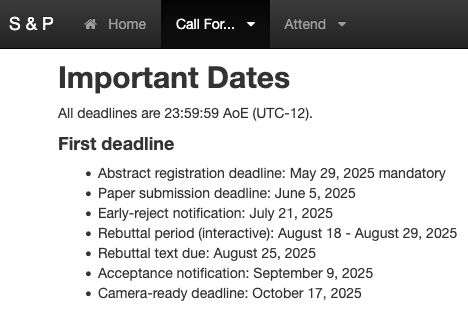

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

openreview.net/forum?id=xzK...

openreview.net/forum?id=xzK...

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

Details: is.mpg.de/events/speci...

Details: is.mpg.de/events/speci...

More details: www.cpp-adp.ca

More details: www.cpp-adp.ca

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...