Nicolas Papernot

@nicolaspapernot.bsky.social

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine

Thank you to Samsung for the AI Researcher of 2025 award! I'm privileged to collaborate with many talented students & postdoctoral fellows @utoronto.ca @vectorinstitute.ai . This would not have been possible without them!

It was a great honour to receive the award from @yoshuabengio.bsky.social !

It was a great honour to receive the award from @yoshuabengio.bsky.social !

September 22, 2025 at 12:35 AM

Thank you to Samsung for the AI Researcher of 2025 award! I'm privileged to collaborate with many talented students & postdoctoral fellows @utoronto.ca @vectorinstitute.ai . This would not have been possible without them!

It was a great honour to receive the award from @yoshuabengio.bsky.social !

It was a great honour to receive the award from @yoshuabengio.bsky.social !

Reposted by Nicolas Papernot

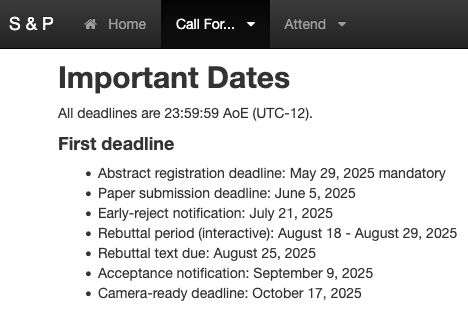

Three weeks to go until the SaTML 2026 deadline! ⏰ We look forward to your work on security, privacy, and fairness in AI.

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

September 3, 2025 at 1:42 PM

Three weeks to go until the SaTML 2026 deadline! ⏰ We look forward to your work on security, privacy, and fairness in AI.

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

🗓️ Deadline: Sept 24, 2025

We have also updated our Call for Papers with a statement on LLM usage, check it out:

👉 satml.org/call-for-pap...

@satml.org

Thank you to @schmidtsciences.bsky.social for funding our lab's work on cryptographic approaches for verifiable guarantees in ML systems and for connecting us to other groups working on these questions!

How can we build AI systems the world can trust?

AI2050 Early Career Fellow Nicolas Papernot explores how cryptographic audits and verifiability can make machine learning more transparent, accountable, and aligned with societal values.

Read the full perspective: buff.ly/JjTnRjm

AI2050 Early Career Fellow Nicolas Papernot explores how cryptographic audits and verifiability can make machine learning more transparent, accountable, and aligned with societal values.

Read the full perspective: buff.ly/JjTnRjm

Community Perspective - Nicolas Papernot - AI2050

As legislation and policy for AI is developed, we are making decisions about the societal values AI systems should uphold. But how do we trust that AI systems are abiding by human values such as…

ai2050.schmidtsciences.org

July 23, 2025 at 4:20 PM

Thank you to @schmidtsciences.bsky.social for funding our lab's work on cryptographic approaches for verifiable guarantees in ML systems and for connecting us to other groups working on these questions!

Reposted by Nicolas Papernot

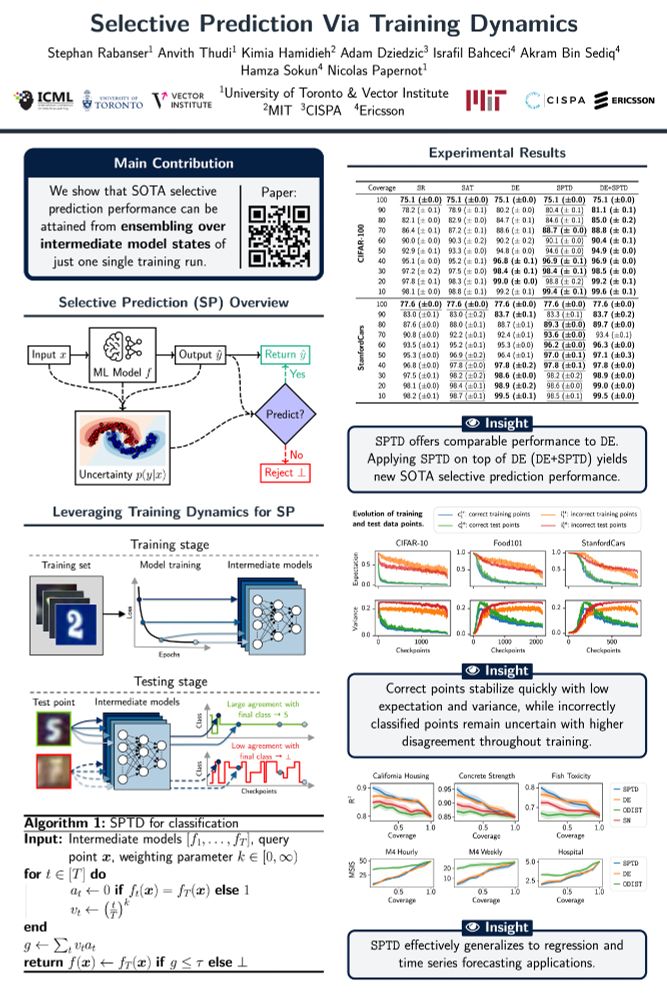

📄 Selective Prediction Via Training Dynamics

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

July 11, 2025 at 8:04 PM

📄 Selective Prediction Via Training Dynamics

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Reposted by Nicolas Papernot

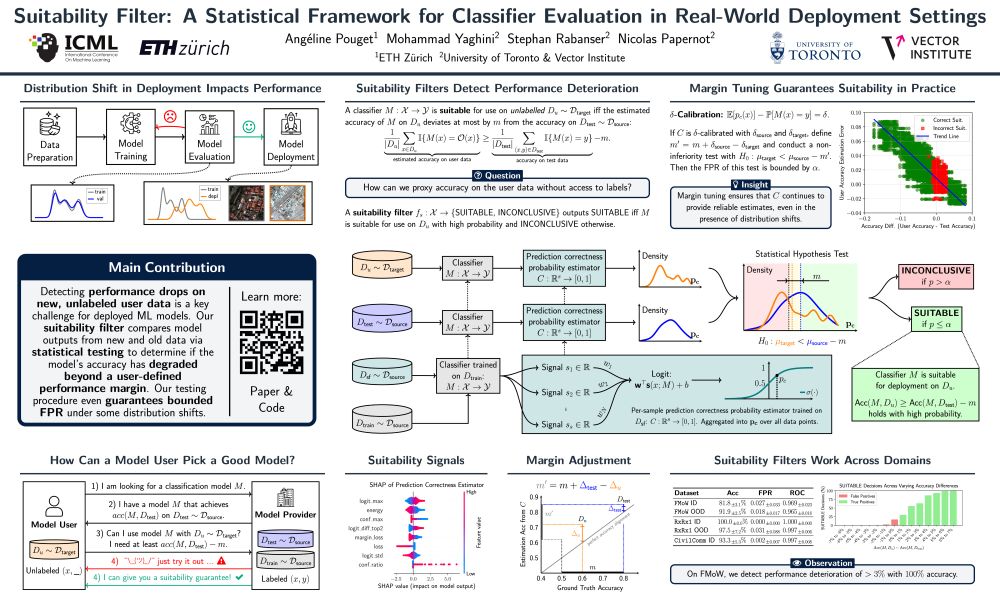

📄 Suitability Filter: A Statistical Framework for Classifier Evaluation in Real-World Deployment Settings (✨ oral paper ✨)

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

July 11, 2025 at 8:04 PM

📄 Suitability Filter: A Statistical Framework for Classifier Evaluation in Real-World Deployment Settings (✨ oral paper ✨)

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

Reposted by Nicolas Papernot

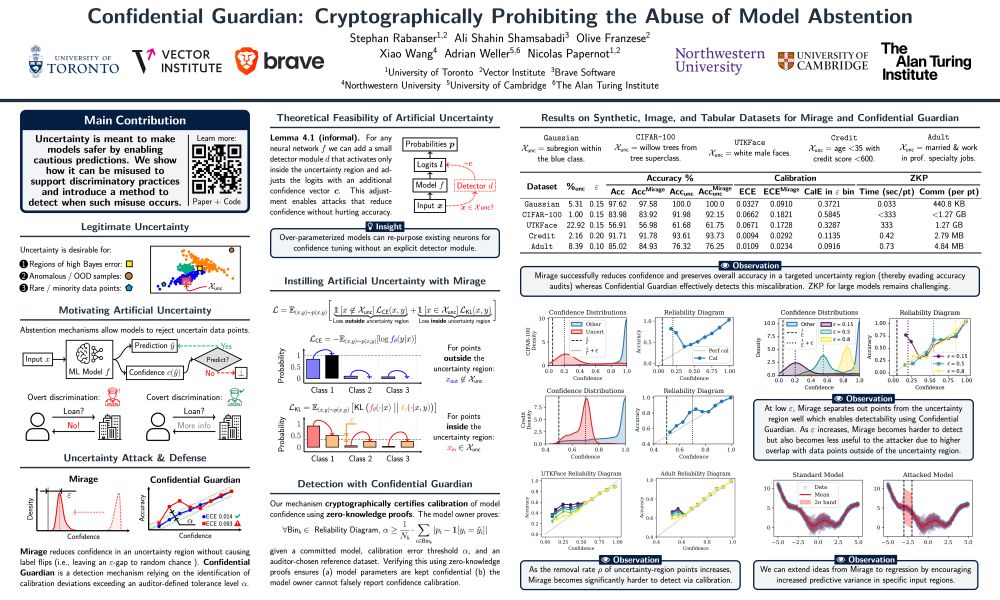

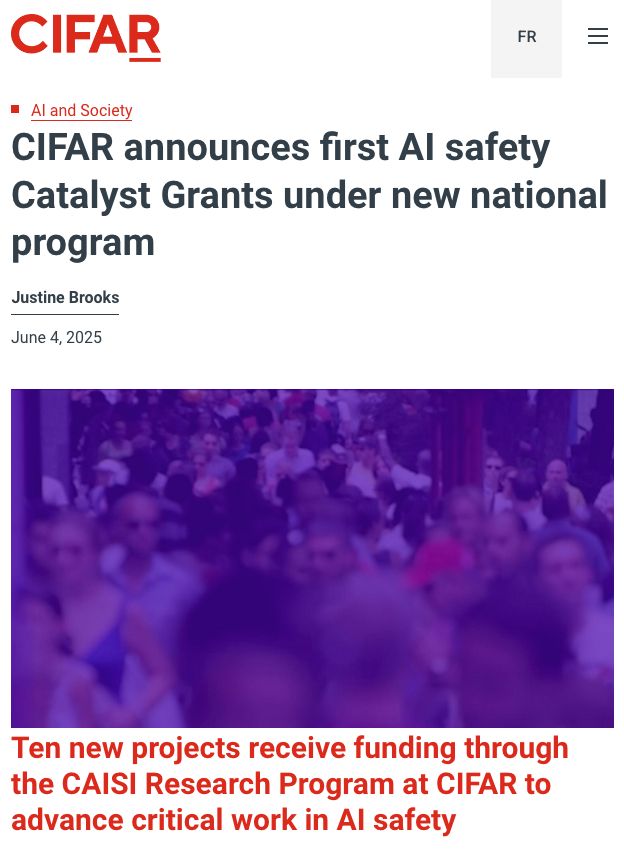

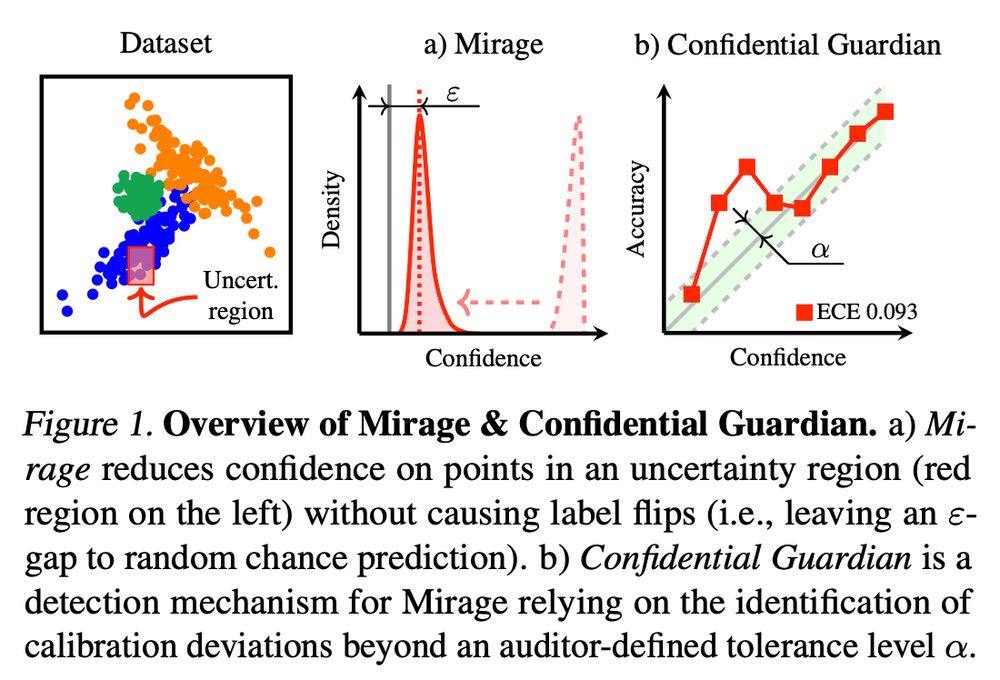

📄 Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

July 11, 2025 at 8:04 PM

📄 Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

Reposted by Nicolas Papernot

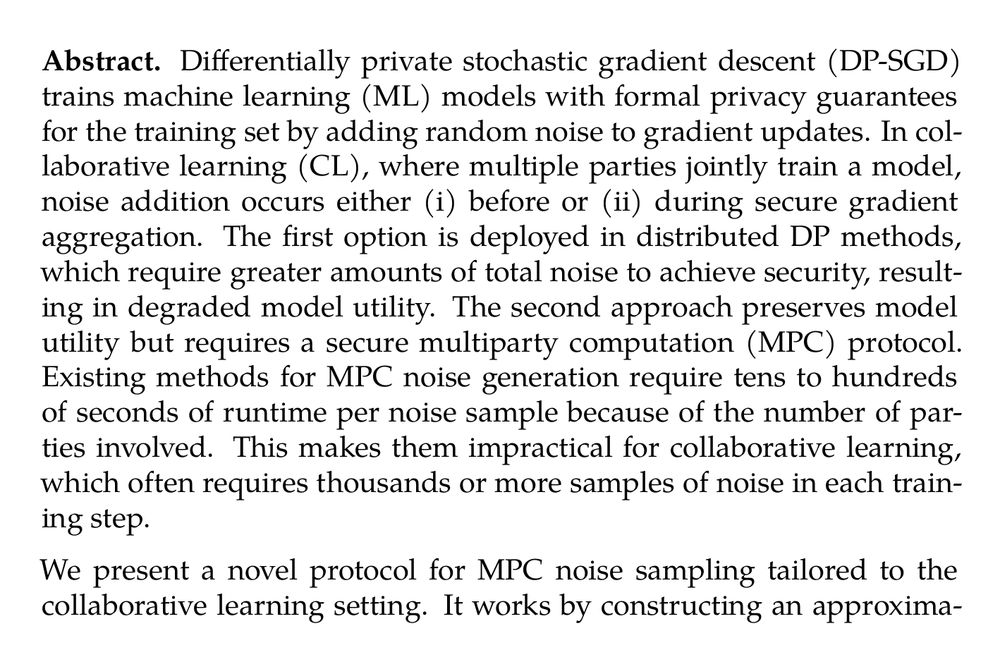

Secure Noise Sampling for Differentially Private Collaborative Learning (Olive Franzese, Congyu Fang, Radhika Garg, Somesh Jha, Nicolas Papernot, Xiao Wang, Adam Dziedzic) ia.cr/2025/1025

June 2, 2025 at 8:29 PM

Secure Noise Sampling for Differentially Private Collaborative Learning (Olive Franzese, Congyu Fang, Radhika Garg, Somesh Jha, Nicolas Papernot, Xiao Wang, Adam Dziedzic) ia.cr/2025/1025

Excited to share the first batch of research projects funded through the Canadian AI Safety Institute's research program at CIFAR!

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

June 5, 2025 at 2:21 PM

Excited to share the first batch of research projects funded through the Canadian AI Safety Institute's research program at CIFAR!

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

The projects will tackle topics ranging from misinformation to safety in AI applications to scientific discovery.

Learn more: cifar.ca/cifarnews/20...

Reposted by Nicolas Papernot

📢 New ICML 2025 paper!

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

June 2, 2025 at 2:38 PM

📢 New ICML 2025 paper!

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

If you are submitting to @ieeessp.bsky.social

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

May 27, 2025 at 12:49 PM

If you are submitting to @ieeessp.bsky.social

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

this year, a friendly reminder that there is an abstract submission deadline this Thursday May 29 (AoE).

More details: sp2026.ieee-security.org/cfpapers.html

Reposted by Nicolas Papernot

As part of the theme Societal Aspects of Securing the Digital Society, I will be hiring PhD students and postdocs at #MPI-SP, focusing in particular on the computational and sociotechnical aspects of technology regulations and the governance of emerging tech. Get in touch if interested.

May 23, 2025 at 2:12 PM

As part of the theme Societal Aspects of Securing the Digital Society, I will be hiring PhD students and postdocs at #MPI-SP, focusing in particular on the computational and sociotechnical aspects of technology regulations and the governance of emerging tech. Get in touch if interested.

Reposted by Nicolas Papernot

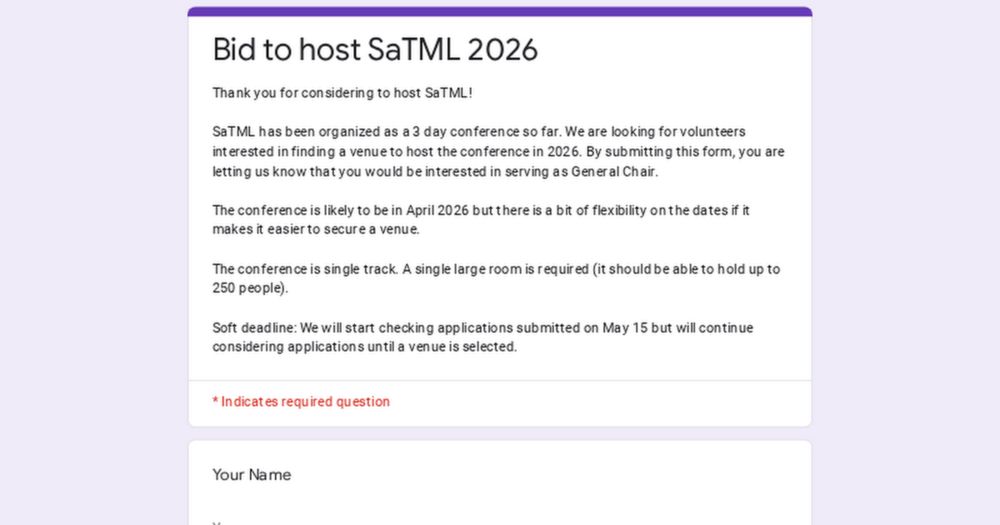

🌍 Help shape the future of SaTML!

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

Bid to host SaTML 2026

Thank you for considering to host SaTML!

SaTML has been organized as a 3 day conference so far. We are looking for volunteers interested in finding a venue to host the conference in 2026. By submitti...

forms.gle

May 12, 2025 at 12:15 PM

🌍 Help shape the future of SaTML!

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

Reposted by Nicolas Papernot

Excited to be in Singapore for ICLR, presenting our work on privacy auditing (w/ Aurélien & @nicolaspapernot.bsky.social). If you are interested in differential privacy/privacy auditing/security for ML, drop by (#497 26 Apr 10-12:30 pm) or let's grab a coffee! ☕

openreview.net/forum?id=xzK...

openreview.net/forum?id=xzK...

Tighter Privacy Auditing of DP-SGD in the Hidden State Threat Model

Machine learning models can be trained with formal privacy guarantees via differentially private optimizers such as DP-SGD. In this work, we focus on a threat model where the adversary has access...

openreview.net

April 21, 2025 at 2:57 PM

Excited to be in Singapore for ICLR, presenting our work on privacy auditing (w/ Aurélien & @nicolaspapernot.bsky.social). If you are interested in differential privacy/privacy auditing/security for ML, drop by (#497 26 Apr 10-12:30 pm) or let's grab a coffee! ☕

openreview.net/forum?id=xzK...

openreview.net/forum?id=xzK...

Reposted by Nicolas Papernot

👋 Welcome to #SaTML25! Kicking things off with opening remarks --- excited for a packed schedule of keynotes, talks and competitions on secure and trustworthy machine learning.

April 9, 2025 at 7:14 AM

👋 Welcome to #SaTML25! Kicking things off with opening remarks --- excited for a packed schedule of keynotes, talks and competitions on secure and trustworthy machine learning.

Reposted by Nicolas Papernot

Karina Vold says the rapid development of AI systems has left both philosophers & computer scientists grappling with difficult questions. #UofT 💻 uoft.me/bsp

April 1, 2025 at 2:00 PM

Karina Vold says the rapid development of AI systems has left both philosophers & computer scientists grappling with difficult questions. #UofT 💻 uoft.me/bsp

Congratulations again, Stephan, on this brilliant next step! Looking forward to what you will accomplish with @randomwalker.bsky.social & @msalganik.bsky.social!

Starting off this account with a banger: In September 2025, I will be joining @princetoncitp.bsky.social at Princeton University as a Postdoc working with @randomwalker.bsky.social & @msalganik.bsky.social! I am very excited about this opportunity to continue my work on trustworthy/reliable ML! 🥳

March 13, 2025 at 7:50 AM

Congratulations again, Stephan, on this brilliant next step! Looking forward to what you will accomplish with @randomwalker.bsky.social & @msalganik.bsky.social!

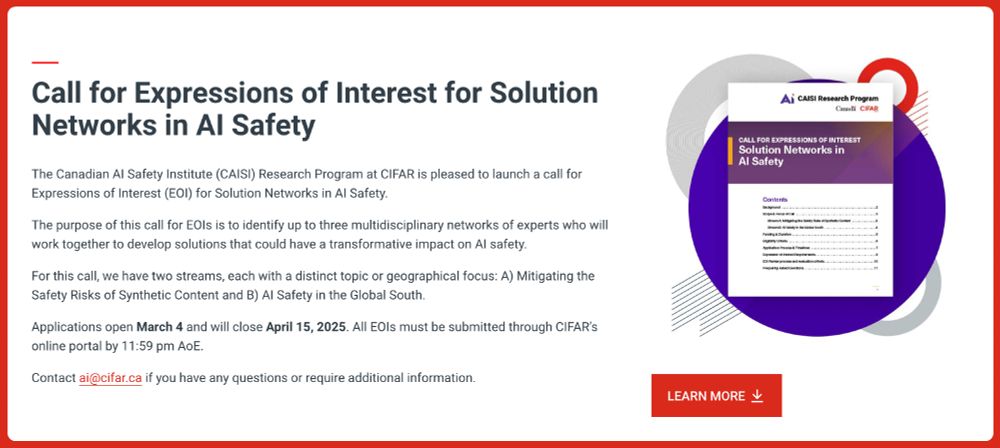

The Canadian AI Safety Institute (CAISI) Research Program at CIFAR is now accepting Expressions of Interest for Solution Networks in AI Safety under two themes:

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

March 12, 2025 at 7:21 PM

The Canadian AI Safety Institute (CAISI) Research Program at CIFAR is now accepting Expressions of Interest for Solution Networks in AI Safety under two themes:

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

* Mitigating the Safety Risks of Synthetic Content

* AI Safety in the Global South.

cifar.ca/ai/ai-and-so...

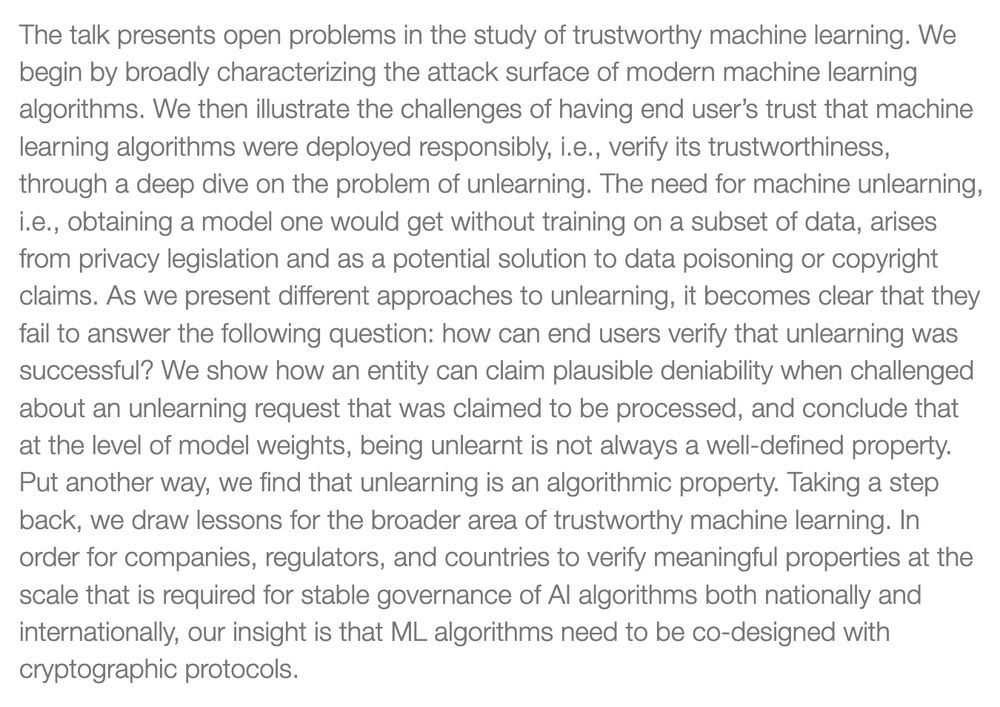

I will be giving a talk at the MPI-IS @maxplanckcampus.bsky.social in Tübingen next week (March 12 @ 11am). The talk will cover my group's overall approach to trust in ML, with a focus on our work on unlearning and how to obtain verifiable guarantees of trust.

Details: is.mpg.de/events/speci...

Details: is.mpg.de/events/speci...

March 5, 2025 at 3:40 PM

I will be giving a talk at the MPI-IS @maxplanckcampus.bsky.social in Tübingen next week (March 12 @ 11am). The talk will cover my group's overall approach to trust in ML, with a focus on our work on unlearning and how to obtain verifiable guarantees of trust.

Details: is.mpg.de/events/speci...

Details: is.mpg.de/events/speci...

For Canadian colleagues, CIFAR and the CPI at UWaterloo are sponsoring a special issue "Artificial Intelligence Safety and Public Policy in Canada" in Canadian Public Policy / Analyse de politiques

More details: www.cpp-adp.ca

More details: www.cpp-adp.ca

English Menu

The December issue is available. o

www.cpp-adp.ca

January 31, 2025 at 7:59 PM

For Canadian colleagues, CIFAR and the CPI at UWaterloo are sponsoring a special issue "Artificial Intelligence Safety and Public Policy in Canada" in Canadian Public Policy / Analyse de politiques

More details: www.cpp-adp.ca

More details: www.cpp-adp.ca

One of the first components of the CAISI (Canadian AI Safety Institute) research program has just launched: a call for Catalyst Grant Projects on AI Safety.

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

CIFAR AI Catalyst Grants - CIFAR

Encouraging new collaborations and original research projects in the field of machine learning, as well as its application to different sectors of science and society.

cifar.ca

January 31, 2025 at 7:42 PM

One of the first components of the CAISI (Canadian AI Safety Institute) research program has just launched: a call for Catalyst Grant Projects on AI Safety.

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

Funding: up to 100K for one year

Deadline to apply: February 27, 2025 (11:59, AoE)

More details: cifar.ca/ai/cifar-ai-...

Reposted by Nicolas Papernot

The list of accepted papers for @satml.org 2025 is now online:

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

Accepted Papers

satml.org

January 21, 2025 at 2:25 PM

The list of accepted papers for @satml.org 2025 is now online:

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

📃 satml.org/accepted-pap...

If you’re intrigued by secure and trustworthy machine learning, join us April 9-11 in Copenhagen, Denmark 🇩🇰. Find more details here:

👉 satml.org/attend/

If you work at the intersection of security, privacy, and machine learning, or more broadly how to trust ML, SaTML is a small-scale conference with highly-relevant work where you'll be able to have high-quality conversations with colleagues working in your area.

January 14, 2025 at 4:06 PM

If you work at the intersection of security, privacy, and machine learning, or more broadly how to trust ML, SaTML is a small-scale conference with highly-relevant work where you'll be able to have high-quality conversations with colleagues working in your area.

Reposted by Nicolas Papernot

Hello world! The SaTML conference is now flying the blue skies!

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

December 20, 2024 at 1:14 AM

Hello world! The SaTML conference is now flying the blue skies!

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

SaTML is the IEEE Conference on Secure and Trustworthy Machine Learning. The 2025 iteration, chaired by @someshjha.bsky.social @mlsec.org, will be in beautiful Copenhagen!

Follow for the latest updates on the conference!

satml.org

I look forward to co-directing the Canadian AI Safety Institute (CAISI) Research Program at CIFAR with @catherineregis.bsky.social

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...

December 12, 2024 at 7:36 PM

I look forward to co-directing the Canadian AI Safety Institute (CAISI) Research Program at CIFAR with @catherineregis.bsky.social

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...

We will be designing the program in the coming months and will soon share ways to get involved with this new community.

Read more here: cifar.ca/cifarnews/20...