https://myracheng.github.io/

📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

📢 I'm recruiting PhD students in Computer Science or Information Science @cornellbowers.bsky.social!

If you're interested, apply to either department (yes, either program!) and list me as a potential advisor!

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

@neilrathi.bsky.social will be presenting our work on multilingual overconfidence in language models and the effects on human overreliance!

arxiv.org/pdf/2507.06306

@neilrathi.bsky.social will be presenting our work on multilingual overconfidence in language models and the effects on human overreliance!

arxiv.org/pdf/2507.06306

Across 3 experiments (n = 3,285), we found that interacting with sycophantic (or overly agreeable) AI chatbots entrenched attitudes and led to inflated self-perceptions.

Yet, people preferred sycophantic chatbots and viewed them as unbiased!

osf.io/preprints/ps...

Thread 🧵

Across 3 experiments (n = 3,285), we found that interacting with sycophantic (or overly agreeable) AI chatbots entrenched attitudes and led to inflated self-perceptions.

Yet, people preferred sycophantic chatbots and viewed them as unbiased!

osf.io/preprints/ps...

Thread 🧵

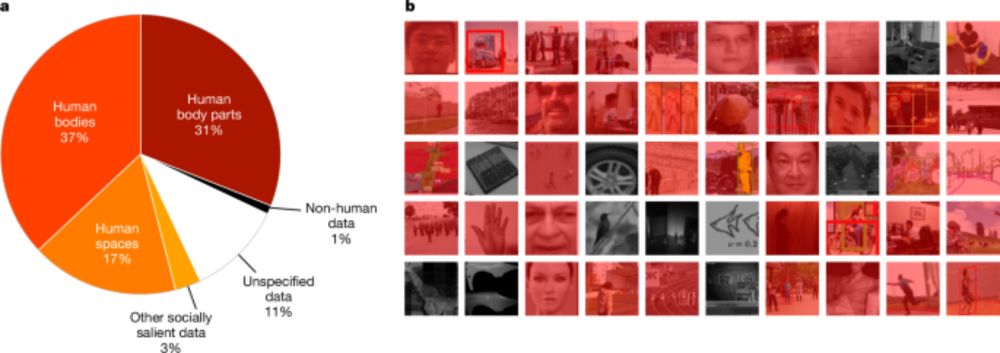

We analysed over 40,000 computer vision papers from CVPR (the longest standing CV conf) & associated patents tracing pathways from research to application. We found that 90% of papers & 86% of downstream patents power surveillance

1/

We analysed over 40,000 computer vision papers from CVPR (the longest standing CV conf) & associated patents tracing pathways from research to application. We found that 90% of papers & 86% of downstream patents power surveillance

1/

While Reddit users might say yes, your favorite LLM probably won’t.

We present Social Sycophancy: a new way to understand and measure sycophancy as how LLMs overly preserve users' self-image.

While Reddit users might say yes, your favorite LLM probably won’t.

We present Social Sycophancy: a new way to understand and measure sycophancy as how LLMs overly preserve users' self-image.

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n