Did I miss any important points?

justinjackson.ca/twitter-blue...

Did I miss any important points?

justinjackson.ca/twitter-blue...

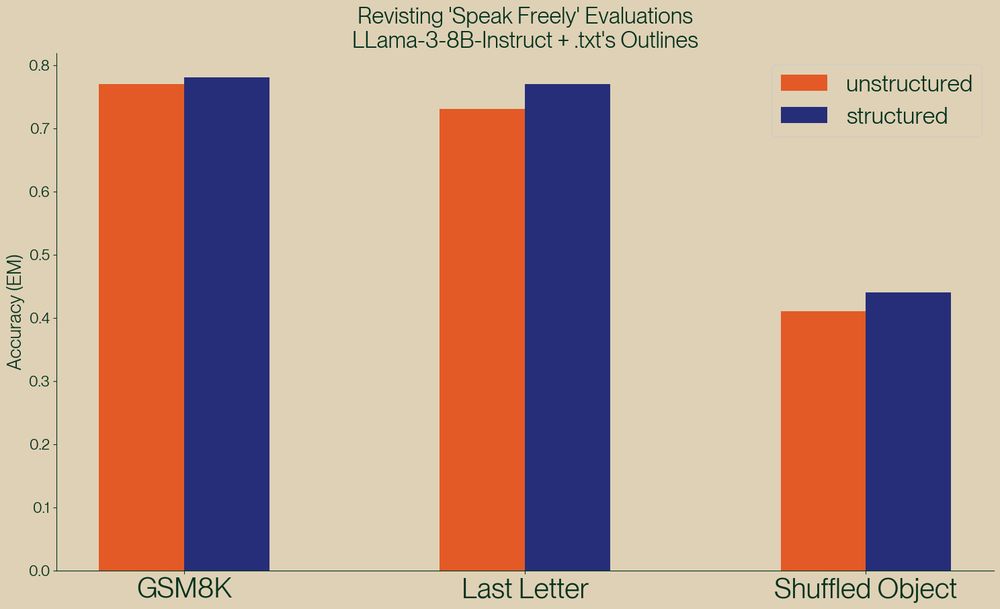

@willkurt.bsky.social provides a rebuttal for a reasonably well known paper which concluded that structured generation with LLMs always resulted in worse performance.

We do not find the same thing.

blog.dottxt.co/say-what-you...

@willkurt.bsky.social provides a rebuttal for a reasonably well known paper which concluded that structured generation with LLMs always resulted in worse performance.

We do not find the same thing.

blog.dottxt.co/say-what-you...