Shitposter

Data wrangler

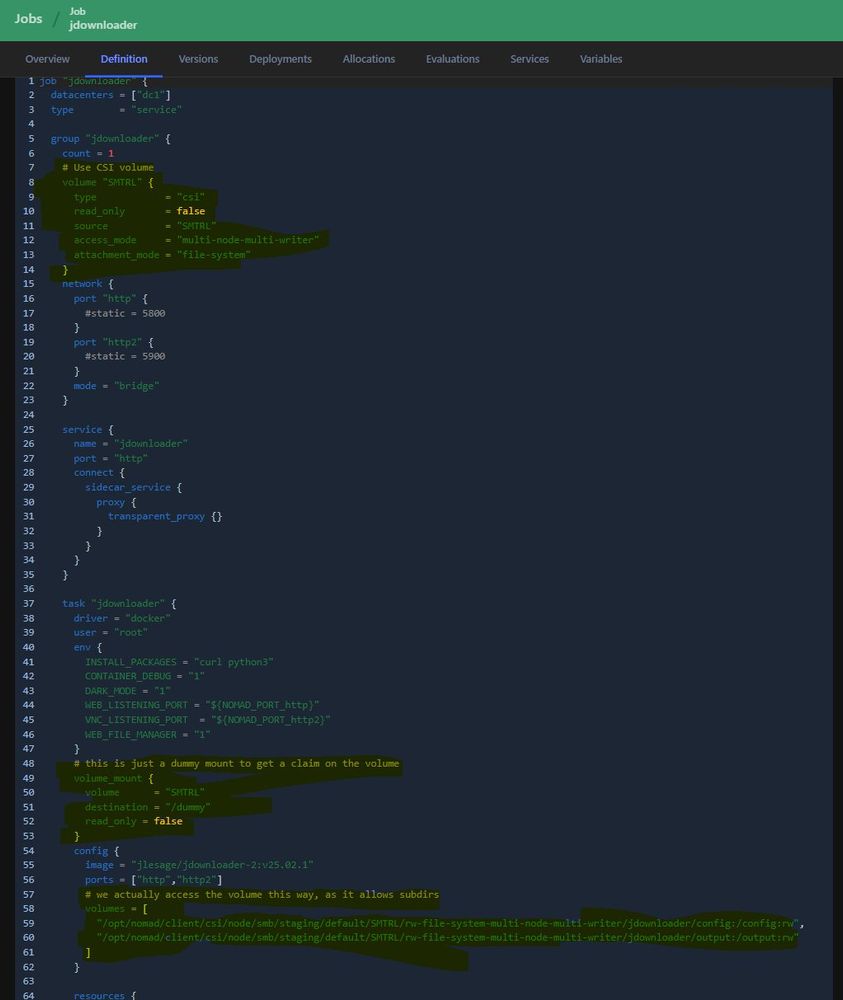

#Nomad #Hashistack #CSI #Docker

#Nomad #Hashistack #CSI #Docker

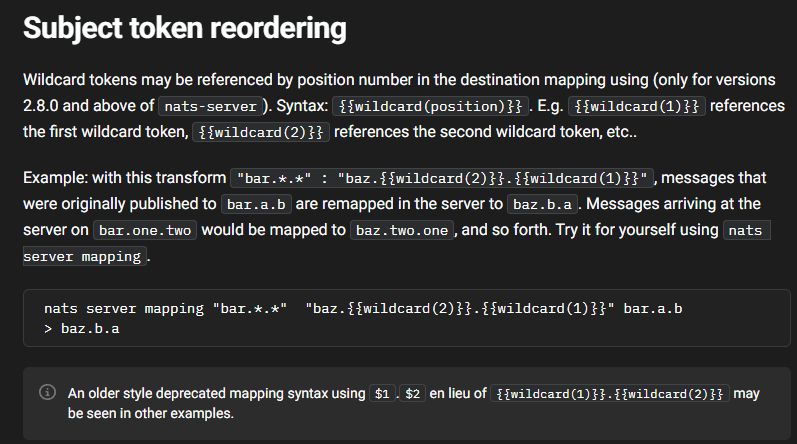

If you're running your own jetstream, would it make sense to simply put those links in the subject (w/o re-emitting) and just re-order via NATS subject mapping for letting others access by did on ws?

If you're running your own jetstream, would it make sense to simply put those links in the subject (w/o re-emitting) and just re-order via NATS subject mapping for letting others access by did on ws?

So, the default jetstream is something like `app.bsky.feed.like`, and you're prepending the `target` account?

Does that mean you can subscribe to just updates on a particular user?

So, the default jetstream is something like `app.bsky.feed.like`, and you're prepending the `target` account?

Does that mean you can subscribe to just updates on a particular user?

Docker builds used to take 10min but simply replacing

```sh

python setup.py install

```

with

```sh

uv pip install . --system

```

In my docker file resulted in the build only taking 26s!

Docker builds used to take 10min but simply replacing

```sh

python setup.py install

```

with

```sh

uv pip install . --system

```

In my docker file resulted in the build only taking 26s!

Realized you get way more recording time if you disable real-time stacking! (duh)

Bonus: pug in heated bike trailer 😁

Realized you get way more recording time if you disable real-time stacking! (duh)

Bonus: pug in heated bike trailer 😁

Fish head nebula was particularly nice!

Fish head nebula was particularly nice!

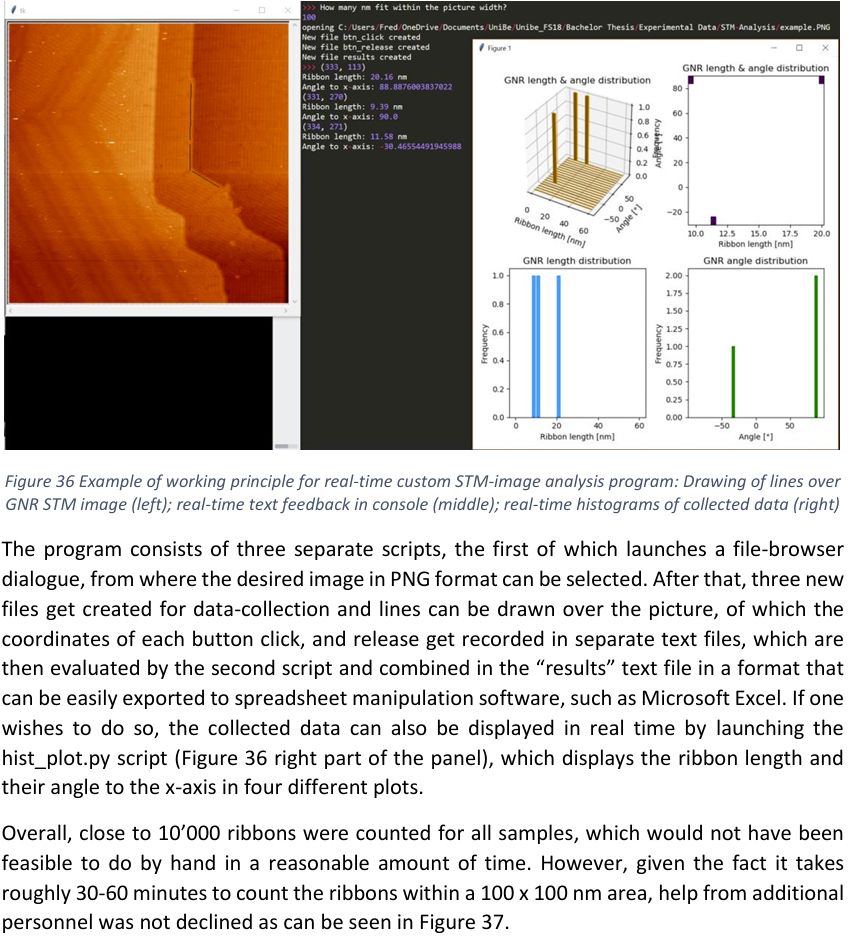

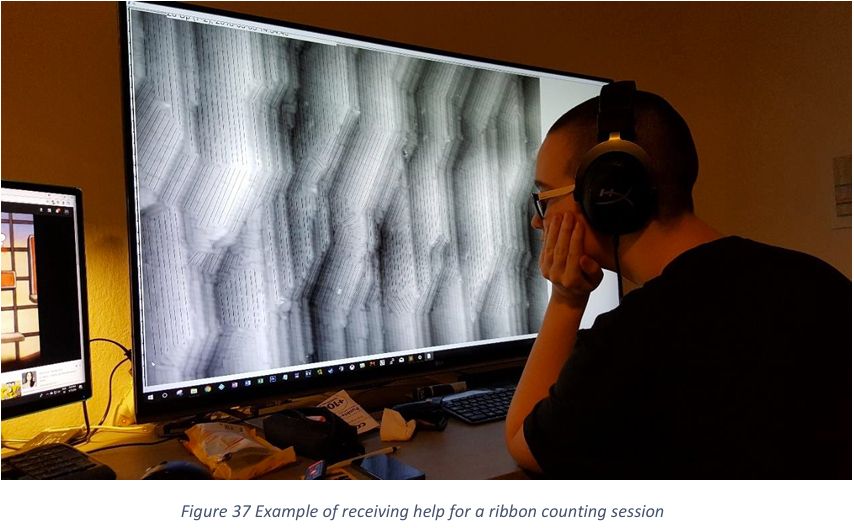

Was looking up something from old thesis and stumbled across my first time writing a Python GUI, and @ninasch.bsky.social helping to mark nanoribbons 😅

Was looking up something from old thesis and stumbled across my first time writing a Python GUI, and @ninasch.bsky.social helping to mark nanoribbons 😅

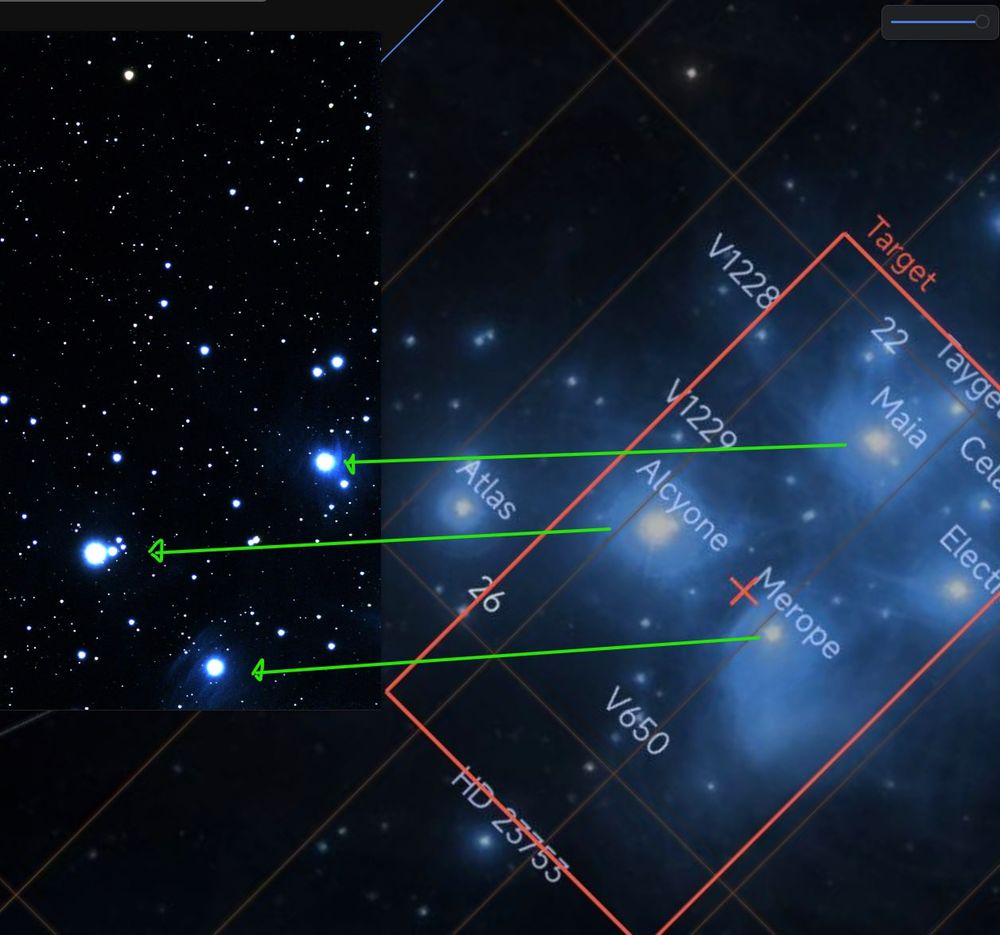

10min integration of pleiades & postprocessing by @ninasch.bsky.social

10min integration of pleiades & postprocessing by @ninasch.bsky.social

Jetstream saves data in fixed-size blocks (default is 8MB).

I suppose one could set up a watcher script, and move done blocks to a different storage tier, then symlink those files to the original directory to achieve tiered storage for Jetstream?

Jetstream saves data in fixed-size blocks (default is 8MB).

I suppose one could set up a watcher script, and move done blocks to a different storage tier, then symlink those files to the original directory to achieve tiered storage for Jetstream?

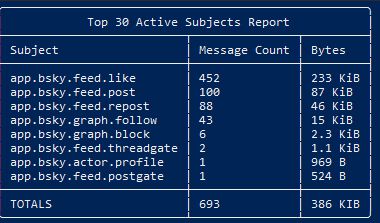

- subscribe to Bsky Jetstream

- filter by collections: app.bsky.*

- reset cursor by 30s (in case of restarts)

- filter commit msgs

- use collection as subject name

- create stream

- use CID to deduplicate

- publish to local Jetstream instance

- subscribe to Bsky Jetstream

- filter by collections: app.bsky.*

- reset cursor by 30s (in case of restarts)

- filter commit msgs

- use collection as subject name

- create stream

- use CID to deduplicate

- publish to local Jetstream instance

- Founded Material Indicators in 2019

- Gave up PhD in Materials Science to pursue own business full-time in 2020

- Wearing all hats from data collection, analysis, processing-pipelines, all the way to data serving

- Self-taught & self-hosting

- Founded Material Indicators in 2019

- Gave up PhD in Materials Science to pursue own business full-time in 2020

- Wearing all hats from data collection, analysis, processing-pipelines, all the way to data serving

- Self-taught & self-hosting

So, now bumped up the volume nodes' RAM.

So, now bumped up the volume nodes' RAM.

Is it possible Kafka just loses segments sometimes?

Pic 1 was live at the time (Kafka local storage).

Pic2 is a replay (Kafka remote storage).

Pic 3 shows that Kafka managed to write meta, but log file is 0 bytes for the missing parts???

Is it possible Kafka just loses segments sometimes?

Pic 1 was live at the time (Kafka local storage).

Pic2 is a replay (Kafka remote storage).

Pic 3 shows that Kafka managed to write meta, but log file is 0 bytes for the missing parts???

The docker registry at home haha:

The docker registry at home haha: