mohawastaken.github.io

🧵 10/10

🧵 10/10

How achieve correct scaling with arbitrary gradient-based perturbation rules? 🤔

✨In 𝝁P, scale perturbations like updates in every layer.✨

💡Gradients and incoming activations generally scale LLN-like, as they are correlated.

➡️ Perturbations and updates have similar scaling properties.

🧵 9/10

How achieve correct scaling with arbitrary gradient-based perturbation rules? 🤔

✨In 𝝁P, scale perturbations like updates in every layer.✨

💡Gradients and incoming activations generally scale LLN-like, as they are correlated.

➡️ Perturbations and updates have similar scaling properties.

🧵 9/10

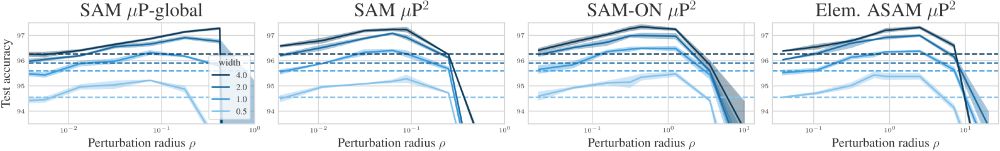

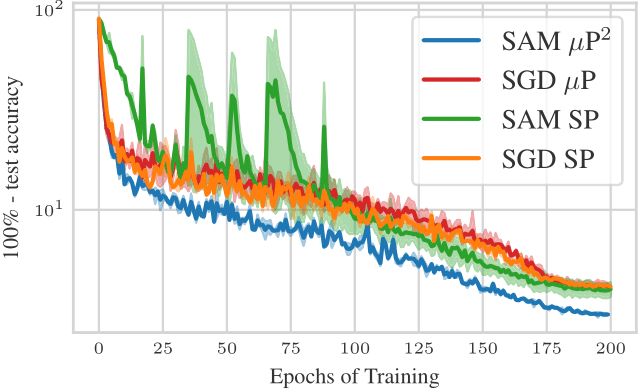

In experiments across MLPs and ResNets on CIFAR10 and ViTs on ImageNet1K, we show that 𝝁P² indeed jointly transfers optimal learning rate and perturbation radius across model scales and can improve training stability and generalization.

🧵 8/10

In experiments across MLPs and ResNets on CIFAR10 and ViTs on ImageNet1K, we show that 𝝁P² indeed jointly transfers optimal learning rate and perturbation radius across model scales and can improve training stability and generalization.

🧵 8/10

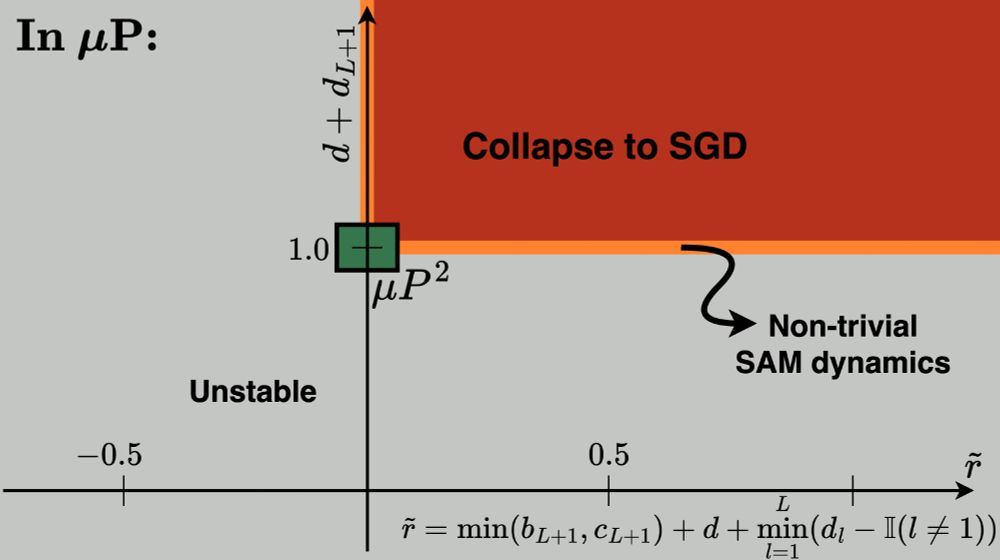

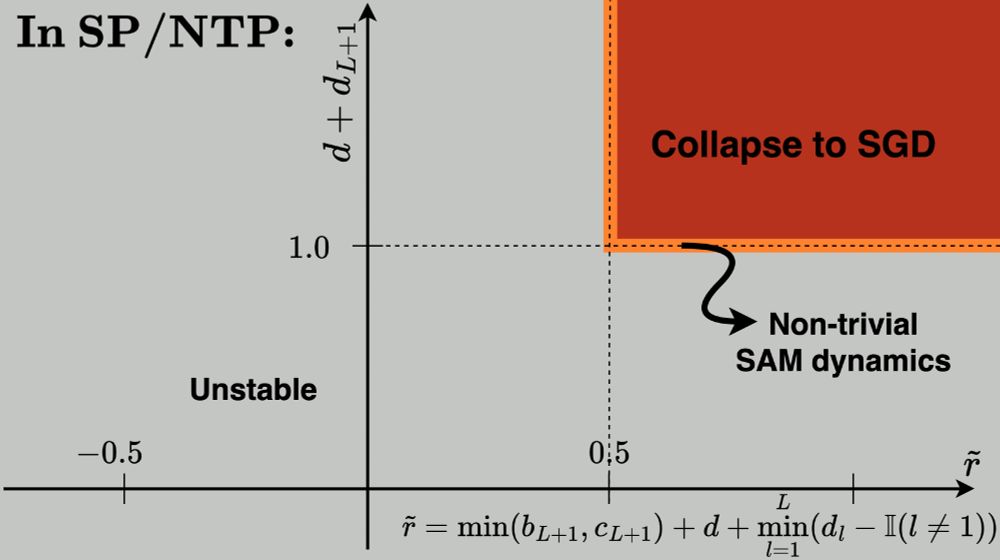

(1) stability,

(2) feature learning in all layers,

(3) effective perturbations in all layers.

We call it the ✨Maximal Update and Perturbation Parameterization (𝝁P²)✨.

🧵 7/10

(1) stability,

(2) feature learning in all layers,

(3) effective perturbations in all layers.

We call it the ✨Maximal Update and Perturbation Parameterization (𝝁P²)✨.

🧵 7/10

For us, an ideal parametrization should fulfill: updates and perturbations of all weights should have a non-vanishing and non-exploding effect on the output function. 💡

We show that ...

🧵 6/10

For us, an ideal parametrization should fulfill: updates and perturbations of all weights should have a non-vanishing and non-exploding effect on the output function. 💡

We show that ...

🧵 6/10

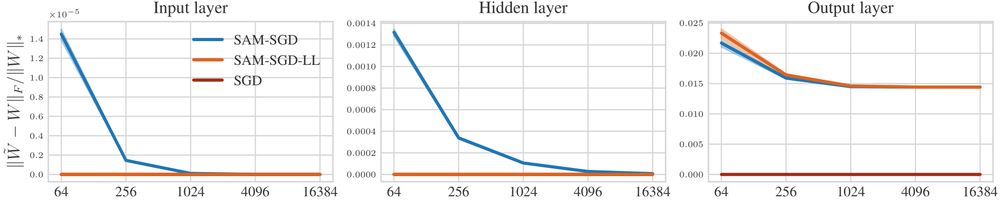

... we show that 𝝁P is not able to consistently improve generalization or to transfer SAM's perturbation radius, because it effectively only perturbs the last layer. ❌

💡So we need to allow layerwise perturbation scaling!

🧵 5/10

... we show that 𝝁P is not able to consistently improve generalization or to transfer SAM's perturbation radius, because it effectively only perturbs the last layer. ❌

💡So we need to allow layerwise perturbation scaling!

🧵 5/10

🧵 4/10

🧵 4/10

🧵 3/10

🧵 3/10

arxiv.org/pdf/2411.00075

A thread on our Mamba scaling will be coming soon by

🔜 @leenacvankadara.bsky.social

🧵2/10

arxiv.org/pdf/2411.00075

A thread on our Mamba scaling will be coming soon by

🔜 @leenacvankadara.bsky.social

🧵2/10