mohawastaken.github.io

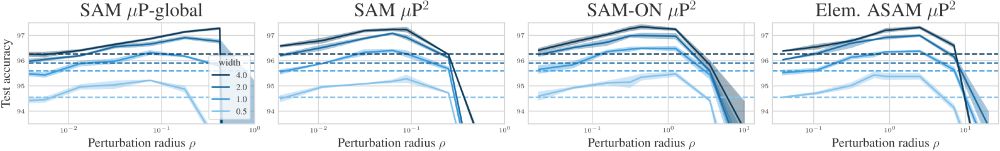

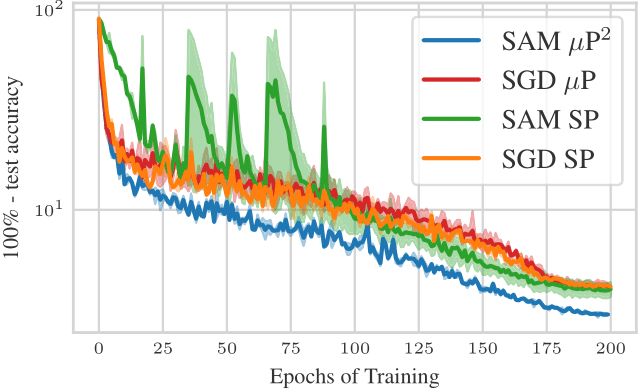

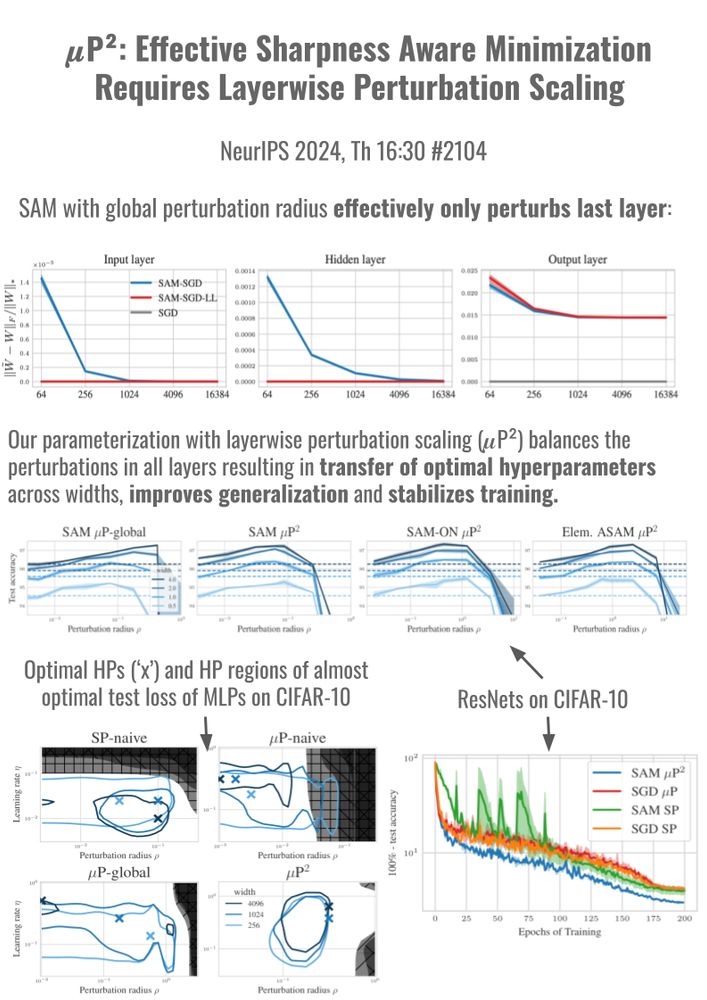

In experiments across MLPs and ResNets on CIFAR10 and ViTs on ImageNet1K, we show that 𝝁P² indeed jointly transfers optimal learning rate and perturbation radius across model scales and can improve training stability and generalization.

🧵 8/10

In experiments across MLPs and ResNets on CIFAR10 and ViTs on ImageNet1K, we show that 𝝁P² indeed jointly transfers optimal learning rate and perturbation radius across model scales and can improve training stability and generalization.

🧵 8/10

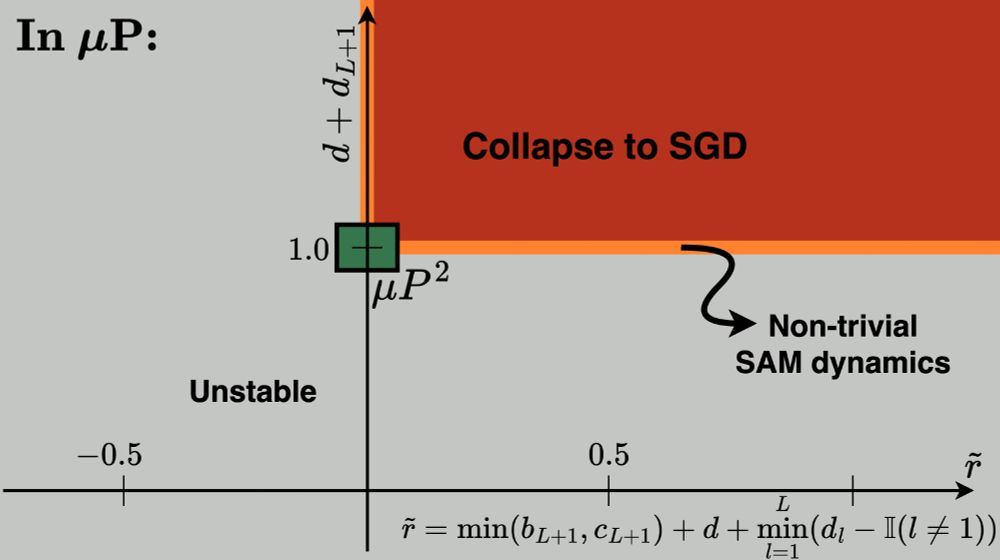

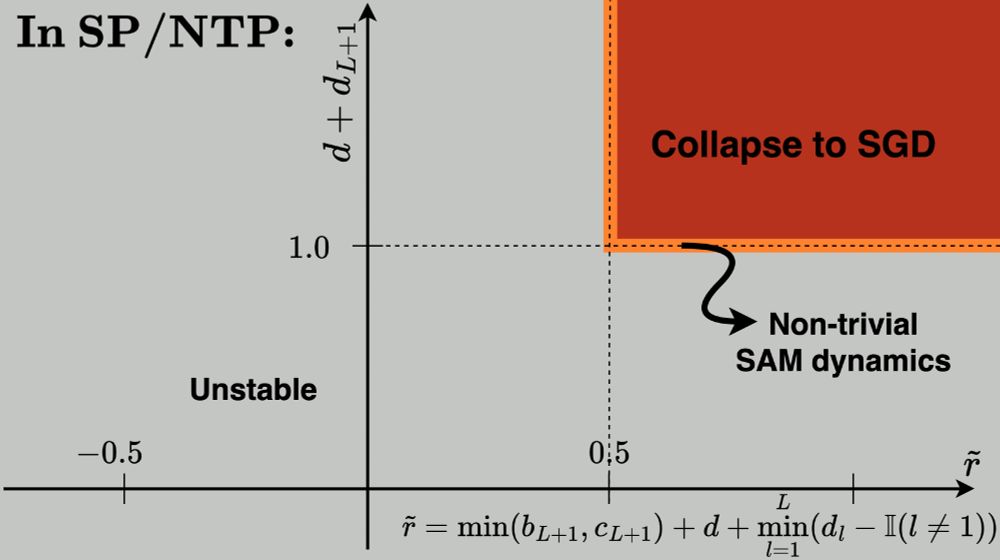

(1) stability,

(2) feature learning in all layers,

(3) effective perturbations in all layers.

We call it the ✨Maximal Update and Perturbation Parameterization (𝝁P²)✨.

🧵 7/10

(1) stability,

(2) feature learning in all layers,

(3) effective perturbations in all layers.

We call it the ✨Maximal Update and Perturbation Parameterization (𝝁P²)✨.

🧵 7/10

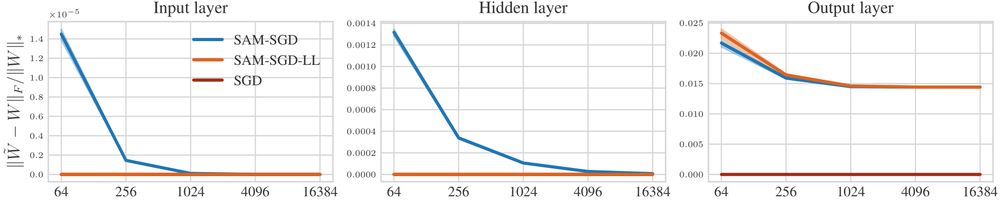

... we show that 𝝁P is not able to consistently improve generalization or to transfer SAM's perturbation radius, because it effectively only perturbs the last layer. ❌

💡So we need to allow layerwise perturbation scaling!

🧵 5/10

... we show that 𝝁P is not able to consistently improve generalization or to transfer SAM's perturbation radius, because it effectively only perturbs the last layer. ❌

💡So we need to allow layerwise perturbation scaling!

🧵 5/10

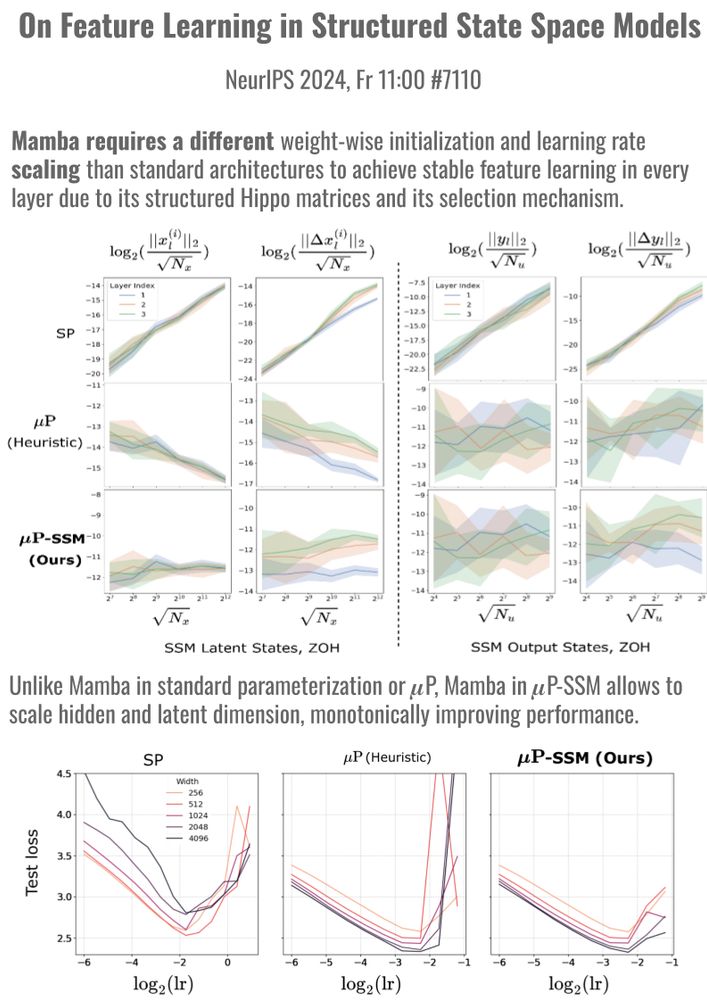

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10