MLCommons

@mlcommons.org

MLCommons is an AI engineering consortium, built on a philosophy of open collaboration to improve AI systems. Through our collective engineering efforts, we continually measure and improve AI technologies' accuracy, safety, speed, and efficiency.

Don’t miss #MLCommons Endpoints in San Diego, Dec 1–2!

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

November 10, 2025 at 10:17 PM

Don’t miss #MLCommons Endpoints in San Diego, Dec 1–2!

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

IEEE Spectrum's analysis shows how our benchmarks capture the real industry challenge - LLMs scale exponentially while hardware improves incrementally.

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

AI Model Growth Outpaces Hardware Improvements

AI training races are heating up as benchmarks get tougher.

spectrum.ieee.org

November 3, 2025 at 5:54 PM

IEEE Spectrum's analysis shows how our benchmarks capture the real industry challenge - LLMs scale exponentially while hardware improves incrementally.

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

This pattern highlights why evolving benchmarks are important. Stay tuned for MLPerf Training v5.1, out on 11/12.

spectrum.ieee.org/mlperf-trends

🚨 NEW: We tested 39 AI models for security vulnerabilities.

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

October 15, 2025 at 7:33 PM

🚨 NEW: We tested 39 AI models for security vulnerabilities.

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

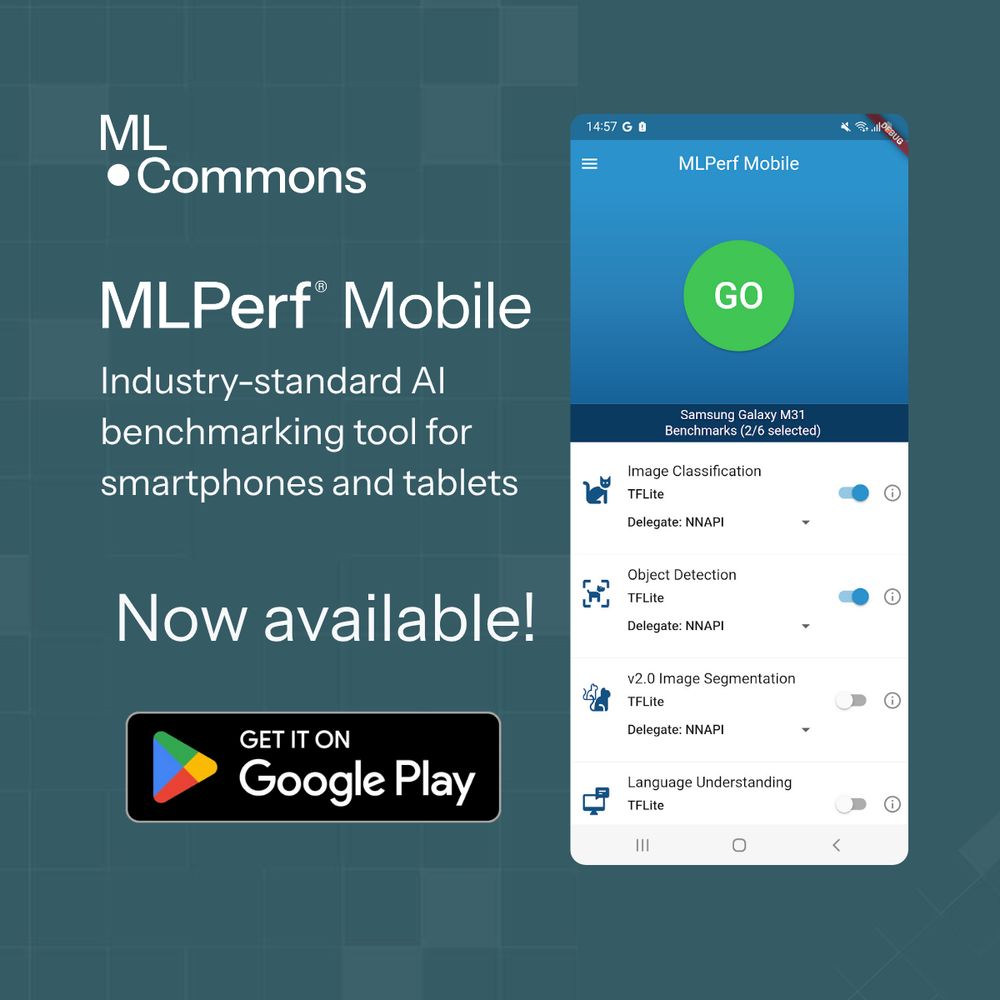

We've just released MLPerf Mobile version 5.0.2 on the Google Play Store and GitHub. This version adds support for devices based on the Samsung Exynos 2500 SoC.

play.google.com/store/apps/d...

play.google.com/store/apps/d...

MLPerf Mobile - Apps on Google Play

An AI benchmark for mobile devices

play.google.com

October 1, 2025 at 4:07 PM

We've just released MLPerf Mobile version 5.0.2 on the Google Play Store and GitHub. This version adds support for devices based on the Samsung Exynos 2500 SoC.

play.google.com/store/apps/d...

play.google.com/store/apps/d...

How can LLMs reliably find, understand, & analyze datasets?

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

Metadata, Meet Datasets: Croissant and MCP in Action - MLCommons

Metadata, Meet Datasets: Croissant and MCP in Action

mlcommons.org

October 1, 2025 at 3:31 PM

How can LLMs reliably find, understand, & analyze datasets?

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

By combining:

📂 #Croissant – AI-ready #metadata

⚡ #MCP – agentic access to data & tools

Our new blog introduces Eclair: tools that let #LLMs discover, download & explore millions of #datasets.

mlcommons.org/2025/10/croi...

TinyML benchmarks finally address real-world deployment with MLCommons' new streaming benchmark in MLPerf Tiny v1.3. Tests 20-minute continuous wake word detection while measuring power and duty cycle.

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

A New TinyML Streaming Benchmark for MLPerf Tiny v1.3 - MLCommons

A New TinyML Streaming Benchmark for MLPerf Tiny v1.3

mlcommons.org

September 24, 2025 at 6:29 PM

TinyML benchmarks finally address real-world deployment with MLCommons' new streaming benchmark in MLPerf Tiny v1.3. Tests 20-minute continuous wake word detection while measuring power and duty cycle.

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

Technical deep dive: mlcommons.org/2025/09/mlpe... #MLPerf #TinyML #EdgeAI

New MLPerf Tiny v1.3 results!

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

MLCommons New MLPerf Tiny 1.3 Benchmark Results Released - MLCommons

New data reveals advances in tiny neural network performance

mlcommons.org

September 17, 2025 at 3:54 PM

New MLPerf Tiny v1.3 results!

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

New streaming wake-word detection test + 70 results measuring sub-100KB neural networks across hardware platforms.

Thanks to Qualcomm , ST Microelectronics , Syntiantcorp & Kai Jiang for pushing tiny ML forward.

View results: mlcommons.org/2025/09/mlpe...

We have released MLPerf Mobile v5.0.1 with support for MediaTek Dimensity 9400 SoCs and a few other improvements.

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

https://play.google.com/store/apps/details?id=org.mlcommons.android.mlperfbench

t.co

September 16, 2025 at 4:22 PM

We have released MLPerf Mobile v5.0.1 with support for MediaTek Dimensity 9400 SoCs and a few other improvements.

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

The app is available for Android phones via the Google Play Store and the MLCommons GitHub repo.

Let us know what you think!

t.co/715ENpV6W4

Reposted by MLCommons

@mlcommons.org dropped MLPerf inference 5.1 with three key benchmarks for enterprise AI: a reasoning model (DeepSeek-R1), speech recognition, and a smaller LLM for summarization tasks.

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

AI Benchmarking Hits New Heights with MLPerf Inference 5.1 Release

Three groundbreaking inference benchmarks debut reasoning models, speech recognition, and ultra-low latency scenarios as 27 organizations deliver record results.

www.techarena.ai

September 9, 2025 at 3:27 PM

@mlcommons.org dropped MLPerf inference 5.1 with three key benchmarks for enterprise AI: a reasoning model (DeepSeek-R1), speech recognition, and a smaller LLM for summarization tasks.

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

Catch up on this essential reading for AI decisions: www.techarena.ai/content/ai-b...

#MLCommons #MLPerf

MLPerf Inference v5.1 results are live!

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

September 9, 2025 at 6:15 PM

MLPerf Inference v5.1 results are live!

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

1/6

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

AVCC and MLCommons Release New MLPerf Automotive v0.5 Benchmark Results - MLCommons

AVCC® and MLCommons® announced new results for their new MLPerf® Automotive v0.5 benchmark

mlcommons.org

August 27, 2025 at 6:15 PM

1/6

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

MLCommons & AVCC announce MLPerf Automotive v0.5 benchmark results—a major step for transparent, reproducible automotive AI performance data. mlcommons.org/2025/08/mlpe...

1/ MLCommons just released results for the MLPerf Storage v2.0 benchmark—an industry-standard suite for measuring storage system performance in #ML workloads. This benchmark remains architecture-neutral, representative, and reproducible.

mlcommons.org/2025/08/mlpe...

mlcommons.org/2025/08/mlpe...

New MLPerf Storage v2.0 Benchmark Results Demonstrate the Critical Role of Storage Performance in AI Training Systems - MLCommons

New checkpoint benchmarks provide “must-have” information for optimizing AI training

mlcommons.org

August 4, 2025 at 5:36 PM

1/ MLCommons just released results for the MLPerf Storage v2.0 benchmark—an industry-standard suite for measuring storage system performance in #ML workloads. This benchmark remains architecture-neutral, representative, and reproducible.

mlcommons.org/2025/08/mlpe...

mlcommons.org/2025/08/mlpe...

MLPerf Client v1.0 is out! 🎉

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

MLCommons Releases MLPerf Client v1.0: A New Standard for AI PC and Client LLM Benchmarking - MLCommons

MLCommons Releases MLPerf Client v1.0 with Expanded Models, Prompts, and Hardware Support, Standardizing AI PC Performance.

mlcommons.org

July 30, 2025 at 3:12 PM

MLPerf Client v1.0 is out! 🎉

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

The new benchmark for LLMs on PCs and client systems is now available—featuring expanded model support, new workload scenarios, and broad hardware integration.

Thank you to all submitters! #AMD, #Intel, @microsoft.com, #NVIDIA, #Qualcomm

mlcommons.org/2025/07/mlpe...

MLCommons just launched MLPerf Mobile on the Google Play Store! 📱

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

July 10, 2025 at 7:01 PM

MLCommons just launched MLPerf Mobile on the Google Play Store! 📱

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

Today, MLCommons is announcing a new collaboration with contributors from across academia, civil society, and industry to co-develop an open agent reliability evaluation standard to operationalize trust in agentic deployments.

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

MLCommons Builds New Agentic Reliability Evaluation Standard in Collaboration with Industry Leaders - MLCommons

MLCommons and partners unite to create actionable reliability standards for next-generation AI agents.

mlcommons.org

June 27, 2025 at 7:07 PM

Today, MLCommons is announcing a new collaboration with contributors from across academia, civil society, and industry to co-develop an open agent reliability evaluation standard to operationalize trust in agentic deployments.

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

🔗https://mlcommons.org/2025/06/ares-announce/

1/3

Reposted by MLCommons

We're all about acceleration! 😉

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

April 3, 2025 at 11:15 AM

We're all about acceleration! 😉

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

Watch @priya-kasimbeg.bsky.social & @fsschneider.bsky.social speedrun an explanation of the AlgoPerf benchmark, rules, and results all within a tight 5 minutes for our #ICLR2025 paper video on "Accelerating Neural Network Training". See you in Singapore!

Companies are deploying AI tools that haven't been pressure-tested, and it's already backfiring.

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

AI is posing immediate threats to your business. Here’s how to protect yourself

The AI threats your busines is facing right now, and how to prevent them

www.fastcompany.com

June 20, 2025 at 4:03 PM

Companies are deploying AI tools that haven't been pressure-tested, and it's already backfiring.

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

In her new op-ed, our President, Rebecca Weiss, breaks down how industry-led AI reliability standards can help executives avoid costly, high-profile failures.

📖 More: bit.ly/3FP0kjg

@fastcompany.com

Call for Submissions!

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

June 5, 2025 at 6:12 PM

Call for Submissions!

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

1/ The MLPerf Training v5.0 results are here—Let’s have a fresh look at the state of large-scale AI training! This round set a new record: 201 performance results from across the industry.

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

June 4, 2025 at 3:35 PM

1/ The MLPerf Training v5.0 results are here—Let’s have a fresh look at the state of large-scale AI training! This round set a new record: 201 performance results from across the industry.

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

🔗https://mlcommons.org/2025/06/mlperf-training-v5-0-results/

Reposted by MLCommons

Call for papers!

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

1st Workshop on Multilingual Data Quality Signals

wmdqs.org

May 29, 2025 at 5:18 PM

Call for papers!

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

MLCommons is partnering with Nasscom to develop globally recognized AI reliability benchmarks, including India-specific, Hindi-language evaluations. Together, we are advancing trustworthy AI.

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

May 29, 2025 at 3:07 PM

MLCommons is partnering with Nasscom to develop globally recognized AI reliability benchmarks, including India-specific, Hindi-language evaluations. Together, we are advancing trustworthy AI.

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

MLCommons' MLPerf Training suite has a new #pretraining #benchmark based on #Meta’s Llama 3.1 405B model. We use the same dataset with a bigger model and longer context, offering a more relevant and challenging measure for today’s #AI systems. mlcommons.org/2025/05/trai...

MLCommons MLPerf Training Expands with Llama 3.1 405B - MLCommons

MLCommons MLPerf Training Expands with Llama 3.1 405B

mlcommons.org

May 5, 2025 at 4:21 PM

MLCommons' MLPerf Training suite has a new #pretraining #benchmark based on #Meta’s Llama 3.1 405B model. We use the same dataset with a bigger model and longer context, offering a more relevant and challenging measure for today’s #AI systems. mlcommons.org/2025/05/trai...

As AI models grow, storage is key to #ML performance. MLCommons' @dkanter.bsky.social joins #Nutanix’s Tech Barometer podcast to explain why and how the #MLPerf #Storage #benchmark guides smarter #data #infrastructure for #AI.

Listen: www.nutanix.com/theforecastb...

#DataStorage #EnterpriseIT

Listen: www.nutanix.com/theforecastb...

#DataStorage #EnterpriseIT

David Kanter MLPerf Measures AI Data Storage Performance

In this Tech Barometer podcast, MLCommons Co-founder David Kanter talks about creating the MLPerf benchmark to help enterprises understand AI workload performance of various data storage technologies.

www.nutanix.com

April 30, 2025 at 4:20 PM

As AI models grow, storage is key to #ML performance. MLCommons' @dkanter.bsky.social joins #Nutanix’s Tech Barometer podcast to explain why and how the #MLPerf #Storage #benchmark guides smarter #data #infrastructure for #AI.

Listen: www.nutanix.com/theforecastb...

#DataStorage #EnterpriseIT

Listen: www.nutanix.com/theforecastb...

#DataStorage #EnterpriseIT

1/ MLCommons announces the release of MLPerf Client v0.6, the first open benchmark to support NPU and GPU acceleration on consumer AI PCs.

Read more: mlcommons.org/2025/04/mlpe...

Read more: mlcommons.org/2025/04/mlpe...

April 28, 2025 at 3:12 PM

1/ MLCommons announces the release of MLPerf Client v0.6, the first open benchmark to support NPU and GPU acceleration on consumer AI PCs.

Read more: mlcommons.org/2025/04/mlpe...

Read more: mlcommons.org/2025/04/mlpe...

#MLCommons just released two new French prompt #datasets for #AILuminate:

🔹Demo set: 1,200+ prompts, free for AI safety testing

🔹Practice set: 12,000 prompts for deeper evaluation (on request)

Native speakers made both and are ready for #ModelBench. Details: mlcommons.org/2025/04/ailu...

#AI #AIRR

🔹Demo set: 1,200+ prompts, free for AI safety testing

🔹Practice set: 12,000 prompts for deeper evaluation (on request)

Native speakers made both and are ready for #ModelBench. Details: mlcommons.org/2025/04/ailu...

#AI #AIRR

MLCommons Releases French AILuminate Benchmark Demo Prompt Dataset to Github - MLCommons

MLCommons announces the release of two French language datasets for the AILuminate benchmark. A 1,200 prompt Creative-Commons licensed version, and 12,000 Practice Test prompts.

mlcommons.org

April 17, 2025 at 3:44 PM

#MLCommons just released two new French prompt #datasets for #AILuminate:

🔹Demo set: 1,200+ prompts, free for AI safety testing

🔹Practice set: 12,000 prompts for deeper evaluation (on request)

Native speakers made both and are ready for #ModelBench. Details: mlcommons.org/2025/04/ailu...

#AI #AIRR

🔹Demo set: 1,200+ prompts, free for AI safety testing

🔹Practice set: 12,000 prompts for deeper evaluation (on request)

Native speakers made both and are ready for #ModelBench. Details: mlcommons.org/2025/04/ailu...

#AI #AIRR