Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Learn, connect, and shape the future of AI with top experts at Qualcomm Hall.

🗓 Dec 1–2 | 🎟 Free tickets available now!

www.eventbrite.com/e/mlcommons-...

#AI #MachineLearning #SanDiego

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

Not a single one was as secure as it was "safe."

Today, we're releasing the industry's first standardized jailbreak benchmark. Here's what we found 🧵1/6

mlcommons.org/2025/10/ailu...

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

Record 27 organizations submitted 1,472 performance results across new and established AI workloads.

Three new benchmarks debut:

Reasoning with Deepseek R1

Speech to text with Whisper

Small LLM with Llama 3.1 8B

Read More: mlcommons.org/2025/09/mlpe...

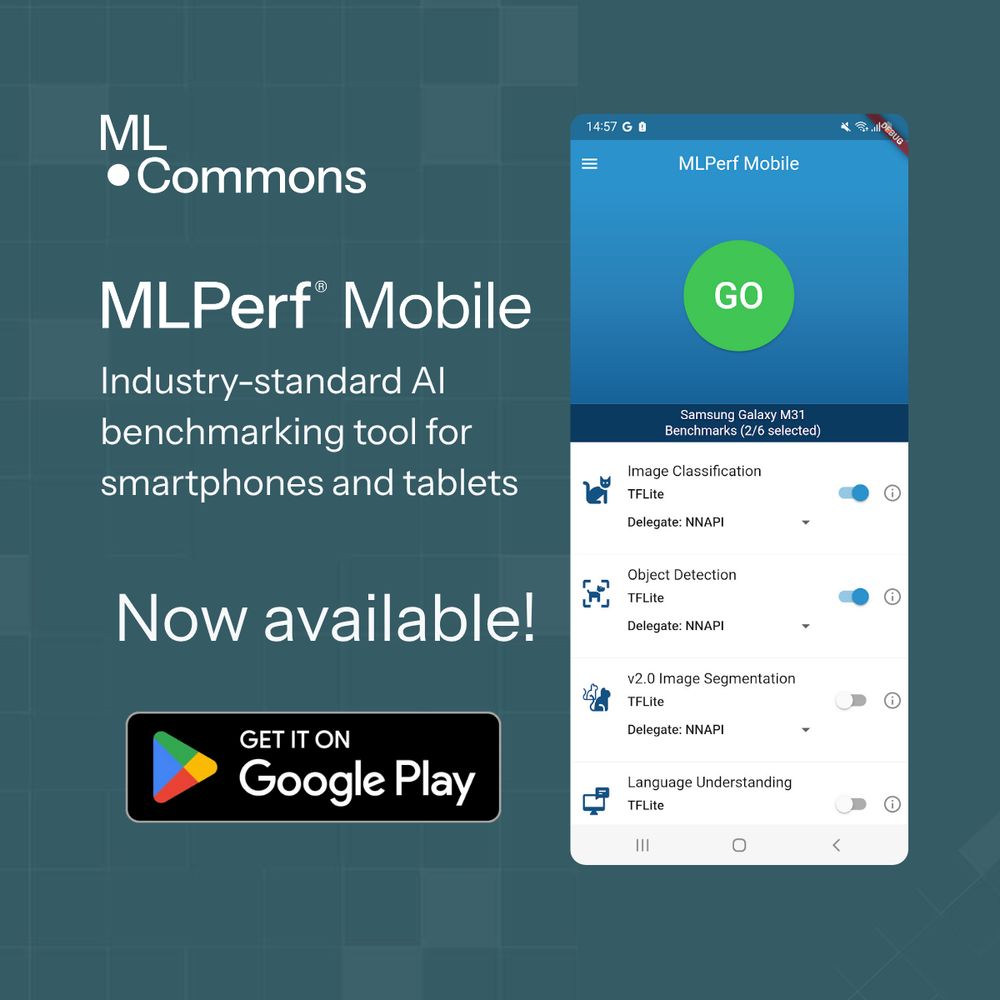

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

Benchmark your Android device’s AI performance on real-world ML tasks with this free, open-source app.

Try it now: play.google.com/store/apps/d...

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

#MLCommons & @AVCConsortium are accepting submissions for the #MLPerf Automotive Benchmark Suite! Help drive fair comparisons & optimize AI systems in vehicles. Focus is on camera sensor perception.

📅 Submissions close June 13th, 2025

Join: mlcommons.org/community/su...

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

🔗 mlcommons.org/2025/05/nass...

#AIForAll #IndiaAI #ResponsibleAI #Nasscom #MLCommons

Read more: mlcommons.org/2025/04/mlpe...

Read more: mlcommons.org/2025/04/mlpe...

mlcommons.org/2025/04/mlperf-inference-v5-0-results/

mlcommons.org/2025/04/mlperf-inference-v5-0-results/

#MedPerf is an open framework for benchmarking medical AI using real-world private datasets to ensure transparency and privacy.

mlcommons.org/2025/03/medp...

#MedicalAI #smartcontracts

#MedPerf is an open framework for benchmarking medical AI using real-world private datasets to ensure transparency and privacy.

mlcommons.org/2025/03/medp...

#MedicalAI #smartcontracts

We are excited to add a new pretraining benchmark, llama3.1 405B, to showcase the latest innovations in AI.

To participate, join the Working Group

mlcommons.org/working-grou...

We are excited to add a new pretraining benchmark, llama3.1 405B, to showcase the latest innovations in AI.

To participate, join the Working Group

mlcommons.org/working-grou...

The MLCommons Croissant working group co-chairs shared insights on its rapid adoption and future plans.

mlcommons.org/2025/02/croi...

The MLCommons Croissant working group co-chairs shared insights on its rapid adoption and future plans.

mlcommons.org/2025/02/croi...

Learn more: mlcommons.org/2025/02/ailu...

#ailuminate #parisaiactionsummit #aiverifyfoundation

Learn more: mlcommons.org/2025/02/ailu...

#ailuminate #parisaiactionsummit #aiverifyfoundation

1M+ hours of multilingual audio

821K+ hours of detected speech

89 languages

48+ TB of data

Empowering research in:

✅ Speech recognition

✅ Language ID

✅ Global communication tech

Learn more: mlcommons.org/2025/01/new-...

#nlp #datasets

1M+ hours of multilingual audio

821K+ hours of detected speech

89 languages

48+ TB of data

Empowering research in:

✅ Speech recognition

✅ Language ID

✅ Global communication tech

Learn more: mlcommons.org/2025/01/new-...

#nlp #datasets

mlcommons.org/2024/12/mlco...

(1/4)

mlcommons.org/2024/12/mlco...

(1/4)