Congrats to all the authors who did an amazing job! 3/4

Congrats to all the authors who did an amazing job! 3/4

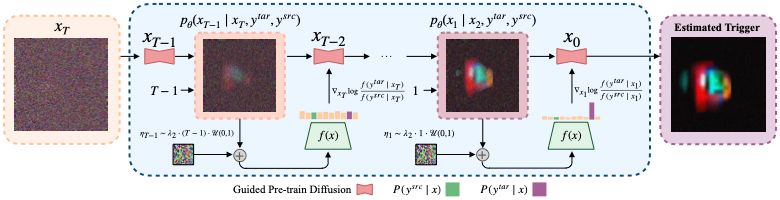

New! Accepted to #ICCV2025 we introduce #DISTIL - led by amazing PhD student @mirzious.bsky.social - we propose a trigger-inversion method for DNNs that reconstructs malicious backdoor triggers 1/4

New! Accepted to #ICCV2025 we introduce #DISTIL - led by amazing PhD student @mirzious.bsky.social - we propose a trigger-inversion method for DNNs that reconstructs malicious backdoor triggers 1/4

By @shaokaiye.bsky.social Haozhe Qi, @trackingskills.bsky.social and me!

📝 arxiv.org/abs/2503.18712

🤖 mmathislab.github.io/llavaction/

1/n

By @shaokaiye.bsky.social Haozhe Qi, @trackingskills.bsky.social and me!

📝 arxiv.org/abs/2503.18712

🤖 mmathislab.github.io/llavaction/

1/n

So proud of @mirzious.bsky.social - what an awesome way to kick off his first grad school project 👌👌

Check out the arxiv version of the paper, open code (including python package) below ⬇️

TL;DR need more robustness?! #PutARingOnIt 💍

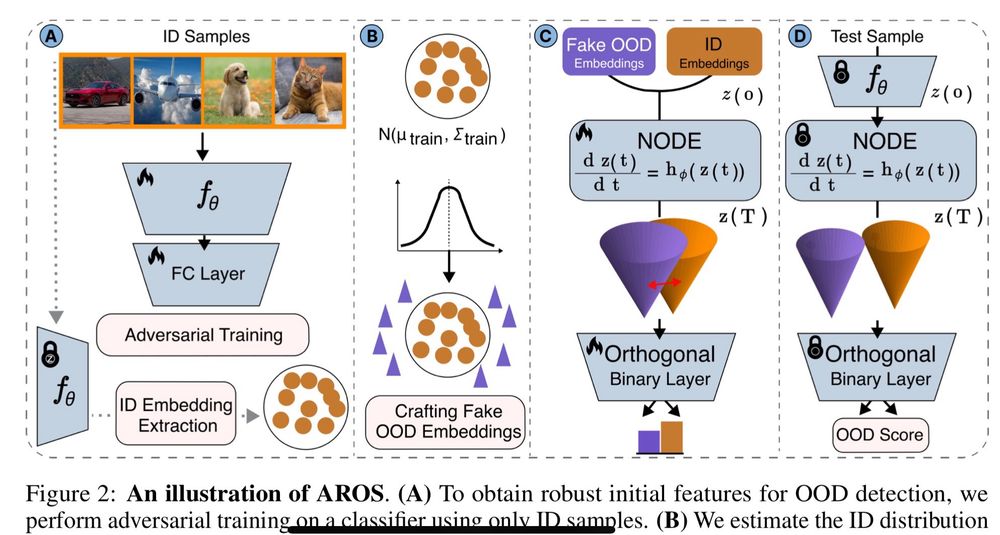

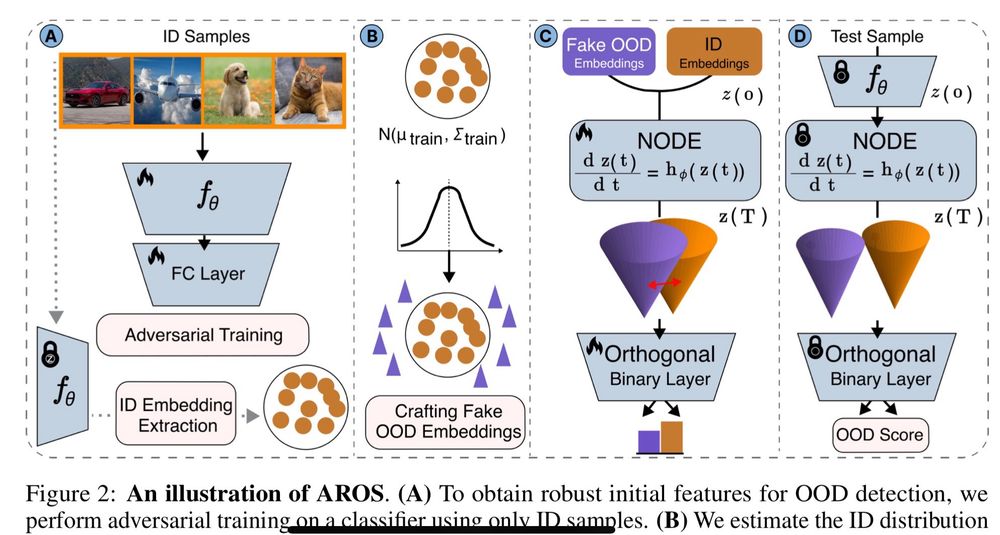

🔥 NEW work by @mirzious.bsky.social a super talented PhD student in my group 🧠🧪 🚀

📊➡️ #AROS💍 arxiv.org/abs/2410.10744

1/2

So proud of @mirzious.bsky.social - what an awesome way to kick off his first grad school project 👌👌

Check out the arxiv version of the paper, open code (including python package) below ⬇️

TL;DR need more robustness?! #PutARingOnIt 💍

github.com/AdaptiveMoto...

Demo it in Colab, etc!

Stars ⭐️ appreciated! Always helpful to know when to support a code base 😉🥰🍾

github.com/AdaptiveMoto...

Demo it in Colab, etc!

Stars ⭐️ appreciated! Always helpful to know when to support a code base 😉🥰🍾

🔥🚀 we are excited to keep pushing this line of work 💪

🔥🚀 we are excited to keep pushing this line of work 💪

🔥 NEW work by @mirzious.bsky.social a super talented PhD student in my group 🧠🧪 🚀

📊➡️ #AROS💍 arxiv.org/abs/2410.10744

1/2

🔥 NEW work by @mirzious.bsky.social a super talented PhD student in my group 🧠🧪 🚀

📊➡️ #AROS💍 arxiv.org/abs/2410.10744

1/2