Building 3D humans.

https://ps.is.mpg.de/person/black

https://meshcapade.com/

https://scholar.google.com/citations?user=6NjbexEAAAAJ&hl=en&oi=ao

Conference papers:

PromptHMR: Promptable Human Mesh Recovery

yufu-wang.github.io/phmr-page/

DiffLocks: Generating 3D Hair from a Single Image using Diffusion Models

radualexandru.github.io/difflocks/

Conference papers:

PromptHMR: Promptable Human Mesh Recovery

yufu-wang.github.io/phmr-page/

DiffLocks: Generating 3D Hair from a Single Image using Diffusion Models

radualexandru.github.io/difflocks/

Turn any video into precise 3D people: occlusions, crowds, world coords solved. State of the art accuracy. 💯

Artists, devs, researchers get instant digital bodies.

Visit our booth 1333 @ CVPR to learn more about PromptHMR!

#AI #3D #DigitalHumans

Learn more about it at our booth 1333 @ #CVPR2025!

Paper link + author names in thread.

#3DBody #SMPL #MachineLearning #HairTech #GenerativeAI @cvprconference.bsky.social

5 papers accepted, 5 going live 🚀

Catch PromptHMR, DiffLocks, ChatHuman, ChatGarment & PICO at Booth 1333, June 11–15.

Details about the papers in the thread! 👇

#3DBody #SMPL #GenerativeAI #MachineLearning

5 papers accepted, 5 going live 🚀

Catch PromptHMR, DiffLocks, ChatHuman, ChatGarment & PICO at Booth 1333, June 11–15.

Details about the papers in the thread! 👇

#3DBody #SMPL #GenerativeAI #MachineLearning

#mocapsuit #mocapvideo #3danimation

See how to turn video or text into ready-to-use 3D motion—no suits or markers needed.

Workshop: May 8, 10:00 AM.

Perfect for animation, VFX & game dev!

📍 Info: fmx.de/en/program/p...

#FMX2025 #3DMotion

See how to turn video or text into ready-to-use 3D motion—no suits or markers needed.

Workshop: May 8, 10:00 AM.

Perfect for animation, VFX & game dev!

📍 Info: fmx.de/en/program/p...

#FMX2025 #3DMotion

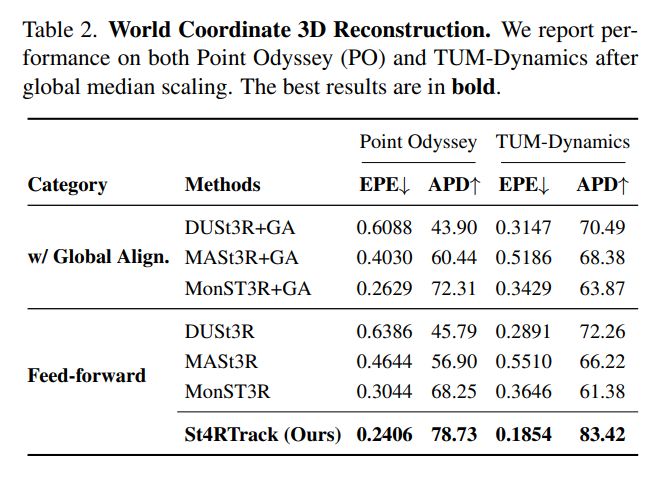

Haiwen Feng, @junyi42.bsky.social, @qianqianwang.bsky.social, Yufei Ye, Pengcheng Yu, @michael-j-black.bsky.social, Trevor Darrell, @akanazawa.bsky.social

DUSt3R-like framework

arxiv.org/abs/2504.13152

Haiwen Feng, @junyi42.bsky.social, @qianqianwang.bsky.social, Yufei Ye, Pengcheng Yu, @michael-j-black.bsky.social, Trevor Darrell, @akanazawa.bsky.social

DUSt3R-like framework

arxiv.org/abs/2504.13152

Watch Part 1 of our talk here 👉 youtu.be/0jCTiQMutow

🚶 Motion capture with MoCapade

🎮 Import directly into Unreal Engine

🎭 Retarget to any character

👀 Bonus: sneak peek at 3D hair & realtime single-cam mocap

🕴️ No suits. 📍 No markers. 🤳 Just one camera.

Watch Part 1 of our talk here 👉 youtu.be/0jCTiQMutow

🚶 Motion capture with MoCapade

🎮 Import directly into Unreal Engine

🎭 Retarget to any character

👀 Bonus: sneak peek at 3D hair & realtime single-cam mocap

🕴️ No suits. 📍 No markers. 🤳 Just one camera.

perceiving-systems.blog/en/news/what...

on Medium

medium.com/@black_51980...

perceiving-systems.blog/en/news/what...

on Medium

medium.com/@black_51980...

This one-take Unreal animation uses Meshcapade to bring every character to life. No mocap suits, just seamless motion from start to finish.

Stylized, cinematic, and full of vibes; exactly how digital animation should feel 📹✨

#3DAnimation #MarkerlessMocap

This one-take Unreal animation uses Meshcapade to bring every character to life. No mocap suits, just seamless motion from start to finish.

Stylized, cinematic, and full of vibes; exactly how digital animation should feel 📹✨

#3DAnimation #MarkerlessMocap

Check out our latest work, PRIMAL, in collaboration with Max Planck Institute for Intelligent Systems & Stanford University - live demo at @officialgdc.bsky.social! 🎮

www.youtube.com/watch?v=-Gcp...

Check out our latest work, PRIMAL, in collaboration with Max Planck Institute for Intelligent Systems & Stanford University - live demo at @officialgdc.bsky.social! 🎮

www.youtube.com/watch?v=-Gcp...

Link: github.com/eth-ait/Gaus...

Gaussian Garments uses a combination of 3D meshes and Gaussian splatting to reconstruct photorealistic simulation-ready digital garments from multi-view videos. 🧵

Link: github.com/eth-ait/Gaus...

Gaussian Garments uses a combination of 3D meshes and Gaussian splatting to reconstruct photorealistic simulation-ready digital garments from multi-view videos. 🧵

youtu.be/v3WzpjXpknc

youtu.be/v3WzpjXpknc

Experience real-time reactive behavior—characters adapt instantly to your input. With controllable generative 3D motion, every move is unique. Real-time motion blending keeps it smooth.

🚀 See it at Booth C1821!

#3DAnimation #MotionGeneration #GameDev #GDC

Experience real-time reactive behavior—characters adapt instantly to your input. With controllable generative 3D motion, every move is unique. Real-time motion blending keeps it smooth.

🚀 See it at Booth C1821!

#3DAnimation #MotionGeneration #GameDev #GDC

Stream real-time motion to 3D characters in Unreal—no suits, no markers, just seamless animation. See it LIVE at Booth C1821!

#GDC2025 #MotionCapture #Realtime

From facial animation to 3D hair, creating digital humans has never been easier.

See it at #GDC2025! Visit Booth C1821 and experience motion, detail, expression.

#UnrealEngine #3DAnimation #MotionCapture #FacialAnimation #genAI #Meshcapade

From facial animation to 3D hair, creating digital humans has never been easier.

See it at #GDC2025! Visit Booth C1821 and experience motion, detail, expression.

#UnrealEngine #3DAnimation #MotionCapture #FacialAnimation #genAI #Meshcapade

See our demos & Unreal Engine plugin in action—live!

We’re bringing next-gen markerless motion capture to game dev.

Come experience it for yourself—can't wait to see you all at GDC 2025!

#GameDevelopment #UnrealEngine #MotionCapture #GDC

The podcast highlights the diversity of ideas shaping the future - something we’re very proud to part of!

▶️ Watch on Youtube: www.youtube.com/watch?v=gN0X...

The podcast highlights the diversity of ideas shaping the future - something we’re very proud to part of!

▶️ Watch on Youtube: www.youtube.com/watch?v=gN0X...

👯 Multi-person capture

🎥 3D camera motion

🙌 Detailed hands & gestures

⏫ New GLB, MP4 and SMPL export formats

All from a single camera. Any camera! 📹 🤳 📸

Ready, set, CAPTURE! 🏂

youtu.be/jizULlZTAR8

👯 Multi-person capture

🎥 3D camera motion

🙌 Detailed hands & gestures

⏫ New GLB, MP4 and SMPL export formats

All from a single camera. Any camera! 📹 🤳 📸

Ready, set, CAPTURE! 🏂

youtu.be/jizULlZTAR8

www.spiegel.de/wissenschaft...