language and vision in brains & machines

cognitive science 🤝 AI 🤝 cognitive neuroscience

michaheilbron.github.io

so how to represent the novel words? v. interesting test case

so how to represent the novel words? v. interesting test case

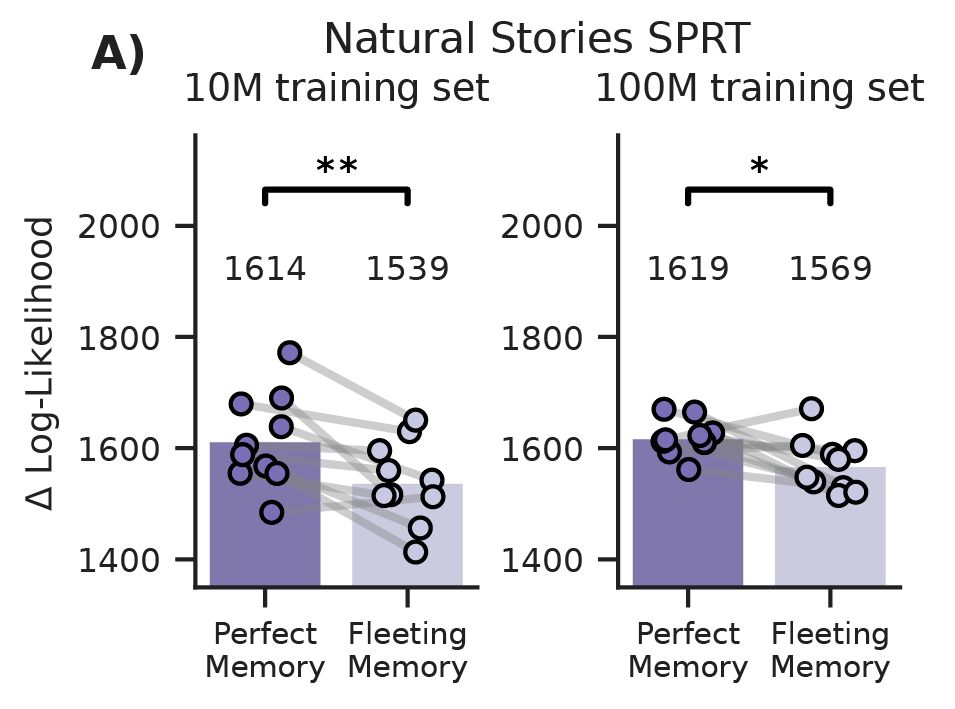

Yet they also reveal a curious distinction: a model with more human-like *constraints* is not necessarily more human-like in its predictions

Yet they also reveal a curious distinction: a model with more human-like *constraints* is not necessarily more human-like in its predictions

Strikingly these same models that were demonstrably better at the language task, were worse at predicting human reading behaviour

Strikingly these same models that were demonstrably better at the language task, were worse at predicting human reading behaviour

Fleeting memory models achieved better next-token prediction (lower loss) and better syntactic knowledge (higher accuracy) on the BLiMP benchmark

This was consistent across seeds and for both 10M and 100M training sets

Fleeting memory models achieved better next-token prediction (lower loss) and better syntactic knowledge (higher accuracy) on the BLiMP benchmark

This was consistent across seeds and for both 10M and 100M training sets

Human memory has a brief 'echoic' buffer that perfectly preserves the immediate past. When we added this – a short window of perfect retention before the decay -- the pattern flipped

Now, fleeting memory *helped* (lower loss)

Human memory has a brief 'echoic' buffer that perfectly preserves the immediate past. When we added this – a short window of perfect retention before the decay -- the pattern flipped

Now, fleeting memory *helped* (lower loss)

We applied a power-law memory decay to the self-attention scores, simulating how access to past words fades over time, and ran controlled experiments on the developmentally realistic BabyLM corpus

We applied a power-law memory decay to the self-attention scores, simulating how access to past words fades over time, and ran controlled experiments on the developmentally realistic BabyLM corpus

Would the blessing of fleeting memory still hold in transformer language models?

Would the blessing of fleeting memory still hold in transformer language models?

It may actually help at learning language by forcing a focus on the recent past and providing an incentive to discover abstract structure rather than surface details

It may actually help at learning language by forcing a focus on the recent past and providing an incentive to discover abstract structure rather than surface details

First results from a much larger project on visual and linguistic meaning in brains and machines, with many collaborators -- more to come!

t.ly/TWsyT

First results from a much larger project on visual and linguistic meaning in brains and machines, with many collaborators -- more to come!

t.ly/TWsyT

t.ly/fTJqy

t.ly/fTJqy