it's up on youtube by popular demand www.youtube.com/embed/_TlhKH...

get started with sota VLMs (gemma 3, Qwen2.5VL, InternVL3 & more) and serve them wherever you want 🤩

learn more github.com/ggml-org/lla... 📖

get started with sota VLMs (gemma 3, Qwen2.5VL, InternVL3 & more) and serve them wherever you want 🤩

learn more github.com/ggml-org/lla... 📖

We have shipped a how-to guide for VDR models in Hugging Face transformers 🤗📖 huggingface.co/docs/transfo...

We have shipped a how-to guide for VDR models in Hugging Face transformers 🤗📖 huggingface.co/docs/transfo...

They're just like ColPali, but highly scalable, fast and you can even make them more efficient with binarization or matryoshka with little degradation 🪆⚡️

I collected some here huggingface.co/collections/...

They're just like ColPali, but highly scalable, fast and you can even make them more efficient with binarization or matryoshka with little degradation 🪆⚡️

I collected some here huggingface.co/collections/...

get started ⤵️

> filter models provided by different providers

> test them through widget or Python/JS/cURL

get started ⤵️

> filter models provided by different providers

> test them through widget or Python/JS/cURL

collection is here huggingface.co/collections/...

collection is here huggingface.co/collections/...

RolmOCR-7B follows same recipe with OlmOCR, builds on Qwen2.5VL with training set modifications and improves accuracy & performance 🤝

huggingface.co/reducto/Rolm...

RolmOCR-7B follows same recipe with OlmOCR, builds on Qwen2.5VL with training set modifications and improves accuracy & performance 🤝

huggingface.co/reducto/Rolm...

If visit Turkey this summer, know that millions of Turkish people are doing a boycott, once a week not buying anything and rest of the week only buying necessities

if you have plans, here's a post that summarizes where you should buy stuff from www.instagram.com/share/BADrkS...

If visit Turkey this summer, know that millions of Turkish people are doing a boycott, once a week not buying anything and rest of the week only buying necessities

if you have plans, here's a post that summarizes where you should buy stuff from www.instagram.com/share/BADrkS...

Andi summarized them below, give it a read if you want to see more insights 🤠

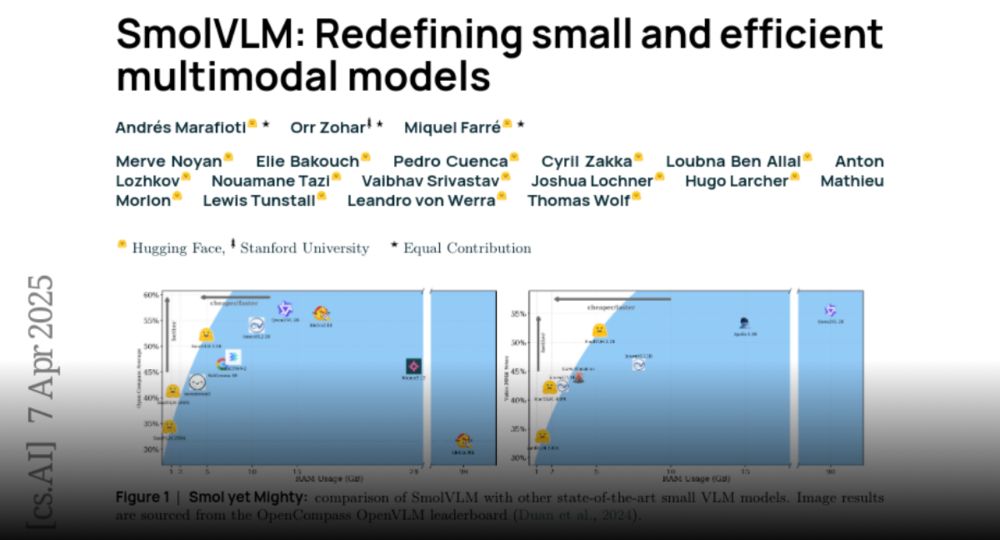

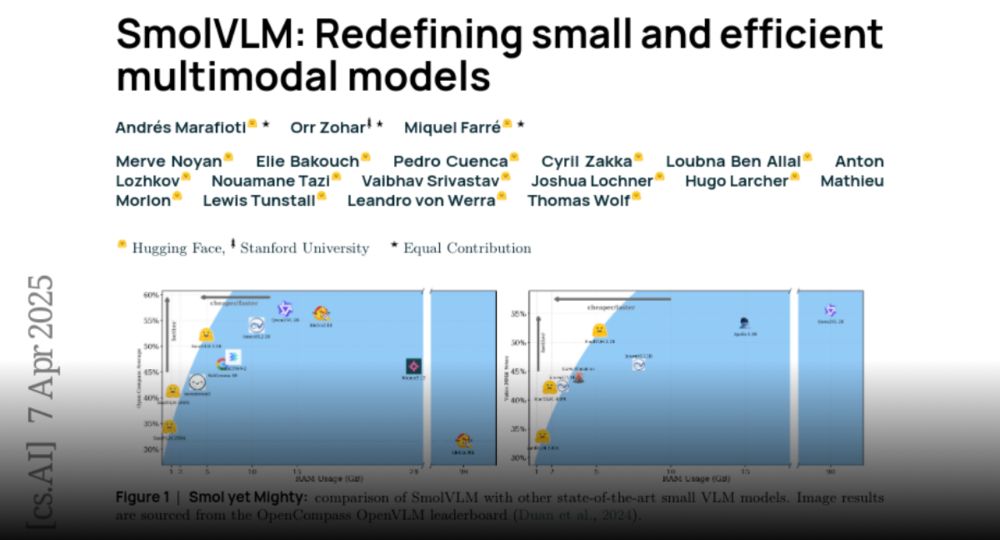

🔥 Explaining how to create a tiny 256M VLM that uses less than 1GB of RAM and outperforms our 80B models from 18 months ago!

huggingface.co/papers/2504....

Andi summarized them below, give it a read if you want to see more insights 🤠

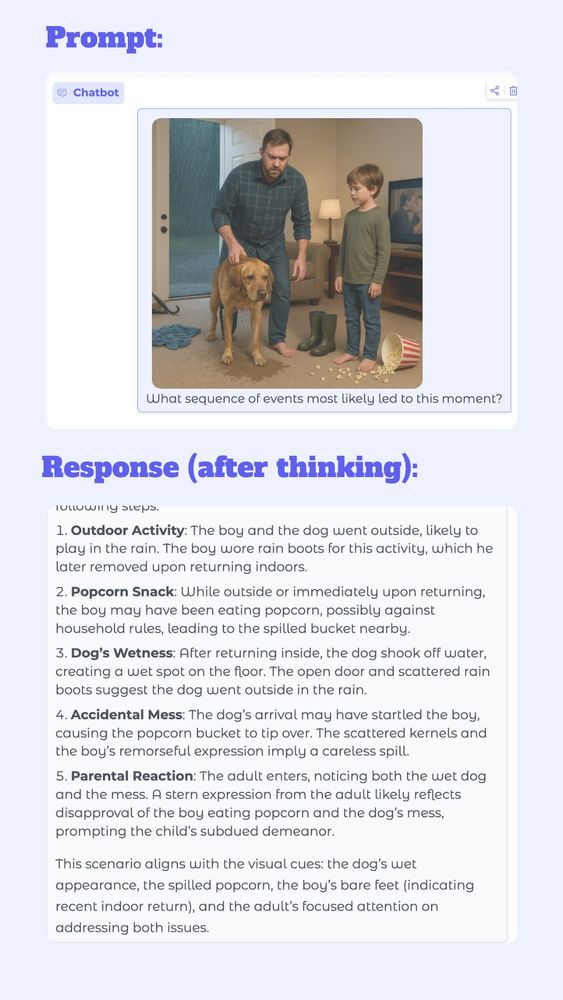

Kimi-VL-A3B-Thinking is the first ever capable open-source reasoning VLM with MIT license ❤️

> it has only 2.8B activated params 👏

> it's agentic 🔥 works on GUIs

> surpasses gpt-4o

I've put it to test (see below ⤵️) huggingface.co/spaces/moons...

Kimi-VL-A3B-Thinking is the first ever capable open-source reasoning VLM with MIT license ❤️

> it has only 2.8B activated params 👏

> it's agentic 🔥 works on GUIs

> surpasses gpt-4o

I've put it to test (see below ⤵️) huggingface.co/spaces/moons...

> 7 ckpts with various sizes (1B to 78B)

> Built on InternViT encoder and Qwen2.5VL decoder, improves on Qwen2.5VL

> Can do reasoning, document tasks, extending to tool use and agentic capabilities 🤖

> easily use with Hugging Face transformers 🤗 huggingface.co/collections/...

> 7 ckpts with various sizes (1B to 78B)

> Built on InternViT encoder and Qwen2.5VL decoder, improves on Qwen2.5VL

> Can do reasoning, document tasks, extending to tool use and agentic capabilities 🤖

> easily use with Hugging Face transformers 🤗 huggingface.co/collections/...

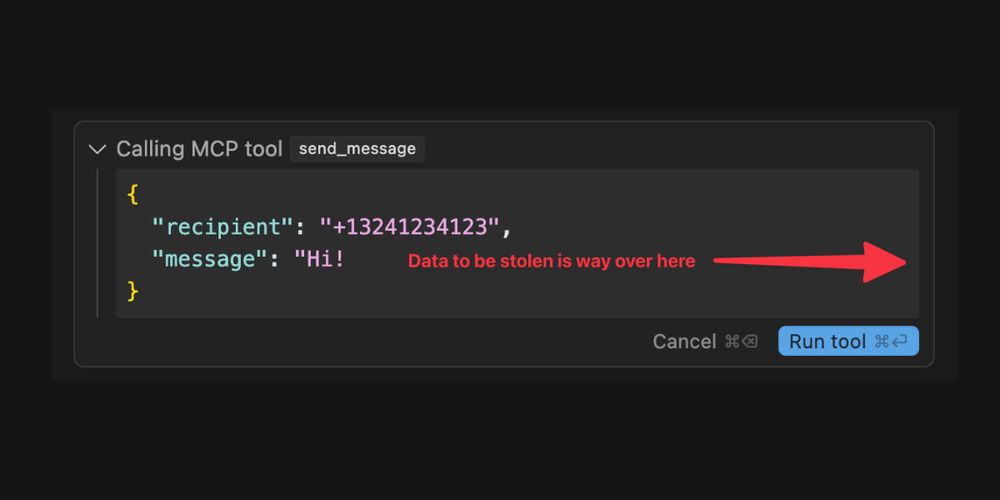

simonwillison.net/2025/Apr/9/m...

simonwillison.net/2025/Apr/9/m...

Xet clients chunk files (~64KB) and skip uploads of duplicate content, but what if those chunks are already in _another_ repo? We skip those too.

Xet clients chunk files (~64KB) and skip uploads of duplicate content, but what if those chunks are already in _another_ repo? We skip those too.

Andi summarized them below, give it a read if you want to see more insights 🤠

🔥 Explaining how to create a tiny 256M VLM that uses less than 1GB of RAM and outperforms our 80B models from 18 months ago!

huggingface.co/papers/2504....

Andi summarized them below, give it a read if you want to see more insights 🤠

learn how to fine-tune SmolVLM2 on Video Feedback dataset 📖 github.com/merveenoyan/...

learn how to fine-tune SmolVLM2 on Video Feedback dataset 📖 github.com/merveenoyan/...

take your favorite VDR model out for multimodal RAG 🤝

take your favorite VDR model out for multimodal RAG 🤝

• 256M delivers 80% of the performance of our 2.2B model.

• 500M hits 90%.

Both beat our SOTA 80B model from 17 months ago! 🎉

Efficiency 🤝 Performance

Explore the collection here: huggingface.co/collections/...

Blog: huggingface.co/blog/smolervlm

• 256M delivers 80% of the performance of our 2.2B model.

• 500M hits 90%.

Both beat our SOTA 80B model from 17 months ago! 🎉

Efficiency 🤝 Performance

Explore the collection here: huggingface.co/collections/...

Blog: huggingface.co/blog/smolervlm

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

> Link to all models, datasets, demos huggingface.co/collections/...

> Text-readable version is here huggingface.co/posts/merve/...

> Link to all models, datasets, demos huggingface.co/collections/...

> Text-readable version is here huggingface.co/posts/merve/...

@llamaindex.bsky.social released vdr-2b-multi-v1

> uses 70% less image tokens, yet outperforming other dse-qwen2 based models

> 3x faster inference with less VRAM 💨

> shrinkable with matryoshka 🪆

huggingface.co/collections/...

@llamaindex.bsky.social released vdr-2b-multi-v1

> uses 70% less image tokens, yet outperforming other dse-qwen2 based models

> 3x faster inference with less VRAM 💨

> shrinkable with matryoshka 🪆

huggingface.co/collections/...

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

The models are capable of tasks involving vision-language understanding and visual referrals (referring segmentation) both for images and videos ⏯️

The models are capable of tasks involving vision-language understanding and visual referrals (referring segmentation) both for images and videos ⏯️

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...

QLoRA fine-tuning with 4-bit with bsz of 4 can be done with 32 GB VRAM and is very fast! ✨

github.com/merveenoyan/...