at The University of Edinburgh - mehmetaygun.github.io

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

📢 Call for Participation: sites.google.com/g.harvard.ed...

Confirmed speakers from Mistral AI, DeepMind, ETH Zurich, LSCE & more.

Looking forward to meeting and discussing in Copenhagen!

📢 Call for Participation: sites.google.com/g.harvard.ed...

Confirmed speakers from Mistral AI, DeepMind, ETH Zurich, LSCE & more.

Looking forward to meeting and discussing in Copenhagen!

Do automated monocular depth estimation methods use similar visual cues to humans?

To learn more, stop by poster #405 in the evening session (17:00 to 19:00) today at #CVPR2025.

Do automated monocular depth estimation methods use similar visual cues to humans?

To learn more, stop by poster #405 in the evening session (17:00 to 19:00) today at #CVPR2025.

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

It consists of 6 depth related tasks to assess response to visual cues.

They test 20 pretrained models.

As expected DAv2, DUSt3R, DINOv2 do well, but SigLIP is not bad

danier97.github.io/depthcues/

It consists of 6 depth related tasks to assess response to visual cues.

They test 20 pretrained models.

As expected DAv2, DUSt3R, DINOv2 do well, but SigLIP is not bad

danier97.github.io/depthcues/

The CMT page is now online and we are looking forward to your submissions on AI systems for expert-tasks and fine-grained analysis.

More info at: sites.google.com/view/fgvc12/...

#CVPR #CVPR2025 #AI

@cvprconference.bsky.social

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

The CMT page is now online and we are looking forward to your submissions on AI systems for expert-tasks and fine-grained analysis.

More info at: sites.google.com/view/fgvc12/...

#CVPR #CVPR2025 #AI

@cvprconference.bsky.social

The scope of the workshop is quite broad, e.g. fine-grained learning, multi-modal, human in the loop, etc.

More info here:

sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

The scope of the workshop is quite broad, e.g. fine-grained learning, multi-modal, human in the loop, etc.

More info here:

sites.google.com/view/fgvc12/...

@cvprconference.bsky.social

Also really proud that our SINR species range estimation models serve as the underlying technology for this.

Spatial Implicit Neural Representations for Global-Scale Species Mapping

arxiv.org/abs/2306.02564

To mark this milestone, we're making model-generated distribution data even more accessible. Explore, analyze, and use this data to power biodiversity research! 🌍🔍

www.inaturalist.org/posts/106918

Also really proud that our SINR species range estimation models serve as the underlying technology for this.

Spatial Implicit Neural Representations for Global-Scale Species Mapping

arxiv.org/abs/2306.02564

Gabe is a fantastic PhD advisor!

Gabe is a fantastic PhD advisor!

mlsystems.uk

Application deadline is 22nd January (next week).

mlsystems.uk

Application deadline is 22nd January (next week).

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

We discuss domains where expert knowledge is typically required and investigate artificial systems that can efficiently distinguish a large number of very similar visual concepts.

#CVPR #CVPR2025 #AI

Max Hamilton @max-ham.bsky.social will be at #NeurIPS this week to present it.

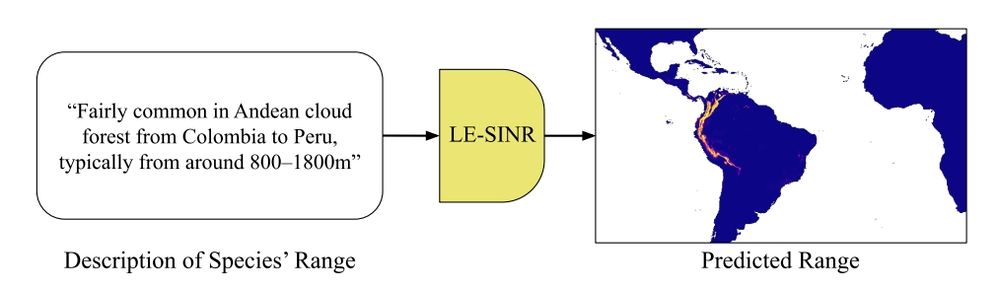

✨Introducing Le-SINR: A text to range map model that can enable scientists to produce more accurate range maps with fewer observations.

Thread 🧵

Max Hamilton @max-ham.bsky.social will be at #NeurIPS this week to present it.

Introducing INQUIRE: A benchmark testing if AI vision-language models can help scientists find biodiversity patterns- from disease symptoms to rare behaviors- hidden in vast image collections.

Thread👇🧵