Postdoctoral Research Scientist @ ETH Zürich

↳ https://matteosaponati.github.io

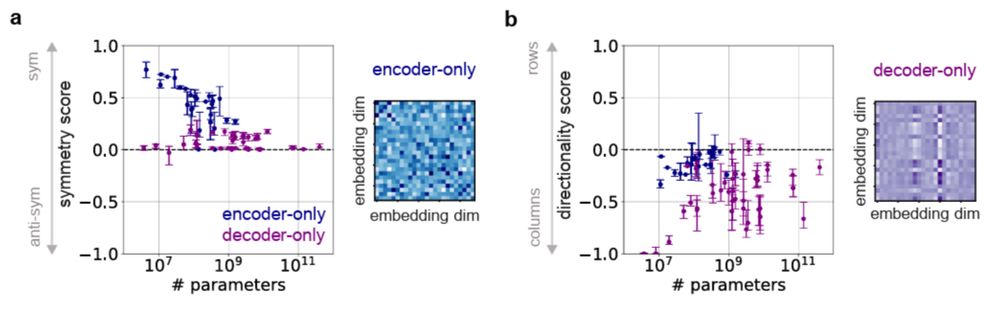

- Initializing self-attention matrices symmetrically improves training efficiency for bidirectional models, leading to faster convergence.

This suggest that imposing structures at initialization can enhance training dynamics.

⬇️

- Initializing self-attention matrices symmetrically improves training efficiency for bidirectional models, leading to faster convergence.

This suggest that imposing structures at initialization can enhance training dynamics.

⬇️

- ModernBERT, GPT, LLaMA3, Mistral, etc

- Text, vision, and audio models

- Different model sizes, and architectures

⬇️

- ModernBERT, GPT, LLaMA3, Mistral, etc

- Text, vision, and audio models

- Different model sizes, and architectures

⬇️