PSID & DPAD (Nat Neuro 2021 & 2024), IPSID (PNAS 2024), PGLDM (NeurIPS 2024), BRAID (ICLR 2025)

📜 Paper: openreview.net/pdf?id=k4KVh...

💻Code: github.com/shanechiLab/...

PSID & DPAD (Nat Neuro 2021 & 2024), IPSID (PNAS 2024), PGLDM (NeurIPS 2024), BRAID (ICLR 2025)

📜 Paper: openreview.net/pdf?id=k4KVh...

💻Code: github.com/shanechiLab/...

✅ Self-attention improves neural-behavior predictions by learning long-range patterns while convolutions learn local ones

✅ Two-stage learning improves behavior prediction by disentangling behaviorally relevant dynamics

✅ Self-attention improves neural-behavior predictions by learning long-range patterns while convolutions learn local ones

✅ Two-stage learning improves behavior prediction by disentangling behaviorally relevant dynamics

✅ Operates directly on raw images & avoids preprocessing.

✅ Combines self-attention and convolutional layers to model both global and local patterns.

✅ Uses two-stage learning of convolutional RNNs (ConvRNNs) to disentangle behaviorally relevant and other neural dynamics.

✅ Operates directly on raw images & avoids preprocessing.

✅ Combines self-attention and convolutional layers to model both global and local patterns.

✅ Uses two-stage learning of convolutional RNNs (ConvRNNs) to disentangle behaviorally relevant and other neural dynamics.

See Parsa Vahidi at #ICLR2025!

📍Poster Session 5, Hall 3+Hall 2B #57 | Sat 4/26 | 10AM - 12:30PM

📜 openreview.net/forum?id=3us...

💻 github.com/ShanechiLab/...

See Parsa Vahidi at #ICLR2025!

📍Poster Session 5, Hall 3+Hall 2B #57 | Sat 4/26 | 10AM - 12:30PM

📜 openreview.net/forum?id=3us...

💻 github.com/ShanechiLab/...

✅ Disentangles intrinsic behaviorally relevant neural dynamics from input, neural-specific & behavior-specific dynamics

✅ Captures nonlinearity

It is a multi-stage RNN: each stage learns a subtype of dynamics & combines a predictor network w/ a generative network to learn intrinsic dynamics.

✅ Disentangles intrinsic behaviorally relevant neural dynamics from input, neural-specific & behavior-specific dynamics

✅ Captures nonlinearity

It is a multi-stage RNN: each stage learns a subtype of dynamics & combines a predictor network w/ a generative network to learn intrinsic dynamics.

📍 Poster Session 1, Hall 3 + Hall 2B, #68 | Thu, Apr 24 | 10 AM - 12:30 PM

Poster: iclr.cc/virtual/2025...

📜 💻Paper and code: openreview.net/pdf?id=mkDam...

📍 Poster Session 1, Hall 3 + Hall 2B, #68 | Thu, Apr 24 | 10 AM - 12:30 PM

Poster: iclr.cc/virtual/2025...

📜 💻Paper and code: openreview.net/pdf?id=mkDam...

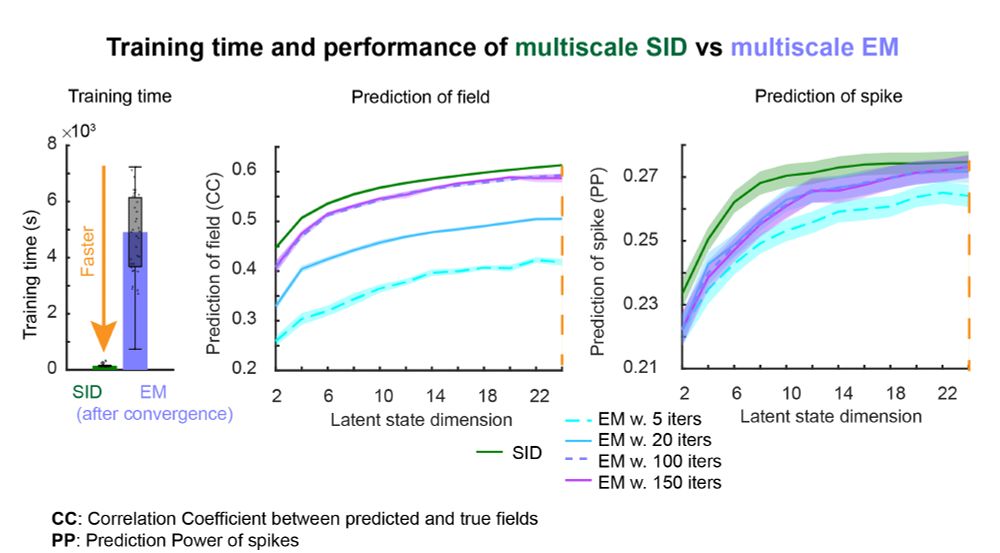

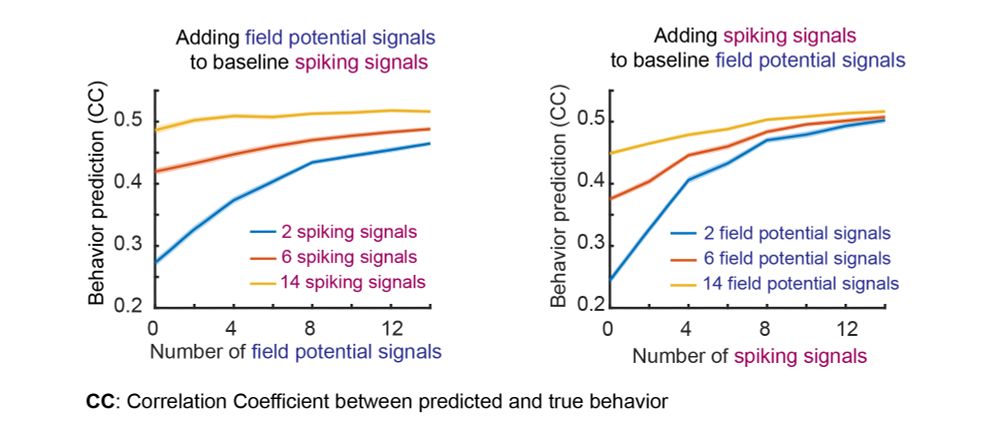

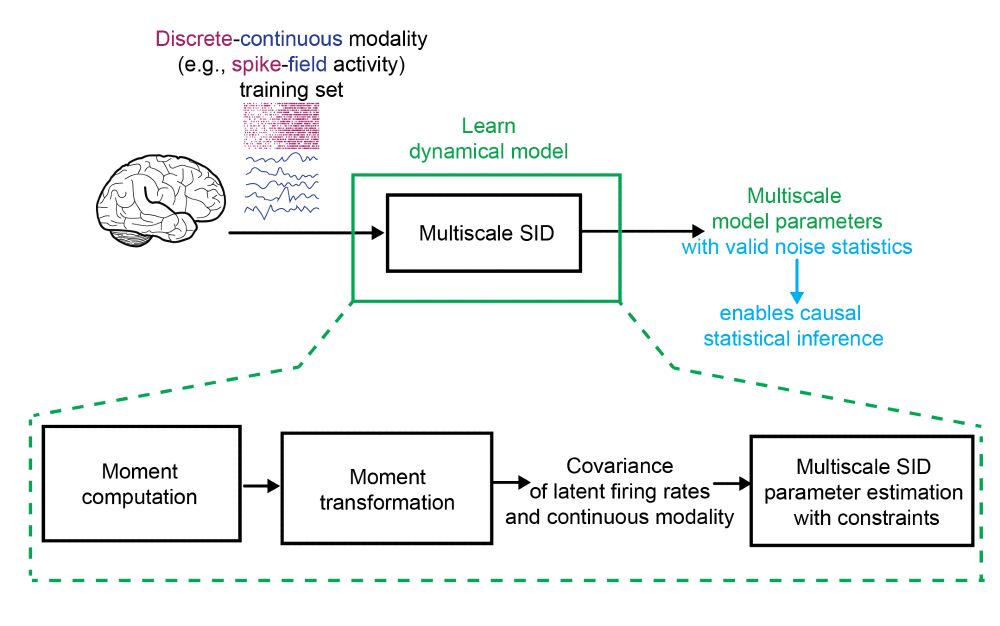

👏 Congrats Parima Ahmadipour & Omid Sani. Thanks to collaborator Bijan Pesaran.

📜Paper: iopscience.iop.org/article/10.1...

💻Code: github.com/ShanechiLab/...

👏 Congrats Parima Ahmadipour & Omid Sani. Thanks to collaborator Bijan Pesaran.

📜Paper: iopscience.iop.org/article/10.1...

💻Code: github.com/ShanechiLab/...

You can see first-author, Lucine Oganesian, present at East Exhibit Hall A-C #3808, Fri Dec 13 11am-2pm @neuripsconf.bsky.social

📜 Paper: openreview.net/pdf?id=DupvY...

💻 Code: github.com/ShanechiLab/...

You can see first-author, Lucine Oganesian, present at East Exhibit Hall A-C #3808, Fri Dec 13 11am-2pm @neuripsconf.bsky.social

📜 Paper: openreview.net/pdf?id=DupvY...

💻 Code: github.com/ShanechiLab/...