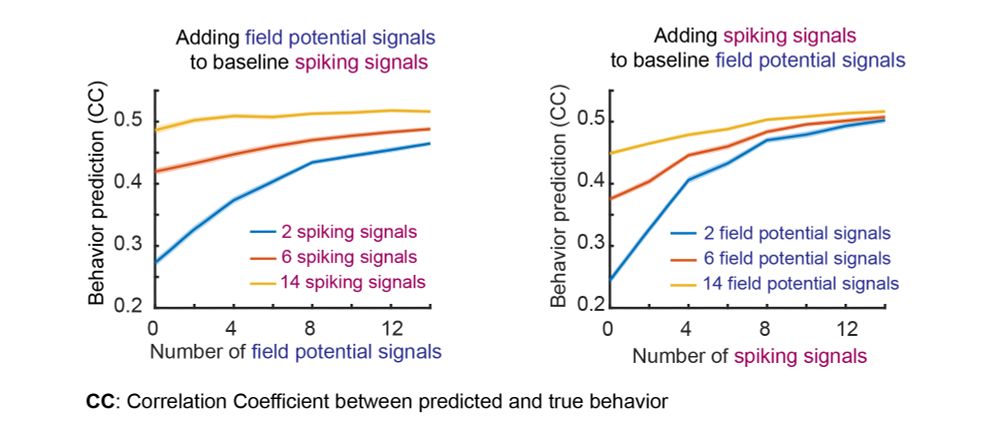

✅ Improves real-time behavior decoding via multimodal fusion

✅ Outperforms recent multimodal neural models

It also:

✅ Generalizes to a high-dim dataset with Neuropixels spikes and calcium imaging

✅ Improves real-time behavior decoding via multimodal fusion

✅ Outperforms recent multimodal neural models

It also:

✅ Generalizes to a high-dim dataset with Neuropixels spikes and calcium imaging

🔸 Models each modality at its own timescale & forward-predicts its dynamics to infer fast latent factors

🔸 Nonlinearly fuses modality-specific factors

🔸 Enables real-time inference via its linear state-space model (SSM) backbone

🔸 Models each modality at its own timescale & forward-predicts its dynamics to infer fast latent factors

🔸 Nonlinearly fuses modality-specific factors

🔸 Enables real-time inference via its linear state-space model (SSM) backbone

But fusion is hard: modalities differ in timescales & distributions, and BCIs require real-time inference.

But fusion is hard: modalities differ in timescales & distributions, and BCIs require real-time inference.

In our third paper at #NeurIPS2025, we present MRINE, which does exactly that — improving decoding even for modalities w/ distinct timescales & distributions.

👏 Eray Erturk

🧵 Paper Code ⬇️

In our third paper at #NeurIPS2025, we present MRINE, which does exactly that — improving decoding even for modalities w/ distinct timescales & distributions.

👏 Eray Erturk

🧵 Paper Code ⬇️

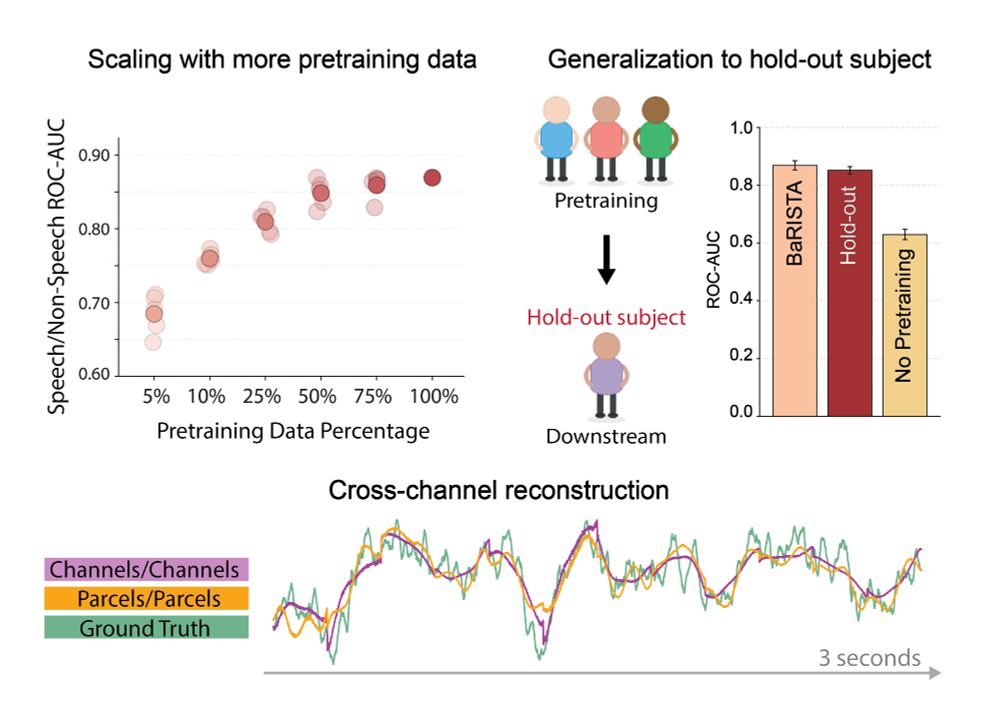

✅ Scales with increased pretraining data

✅ Generalizes to held-out subjects

✅ Can use spatial scales larger than channel level without sacrificing channel reconstruction performance

✅ Scales with increased pretraining data

✅ Generalizes to held-out subjects

✅ Can use spatial scales larger than channel level without sacrificing channel reconstruction performance

✅ BaRISTA shows that spatial encoding at scales larger than individual channels improves downstream decoding of auditory or visual features

✅ BaRISTA outperforms state-of-the-art iEEG models given its flexible spatial encoding

✅ BaRISTA shows that spatial encoding at scales larger than individual channels improves downstream decoding of auditory or visual features

✅ BaRISTA outperforms state-of-the-art iEEG models given its flexible spatial encoding

In our second paper at #NeurIPS2025, we present BaRISTA ☕ — a self-supervised multi-subject model that enables flexible spatial encoding & boosts downstream decoding.

🧵 Paper Code ⬇️

In our second paper at #NeurIPS2025, we present BaRISTA ☕ — a self-supervised multi-subject model that enables flexible spatial encoding & boosts downstream decoding.

🧵 Paper Code ⬇️

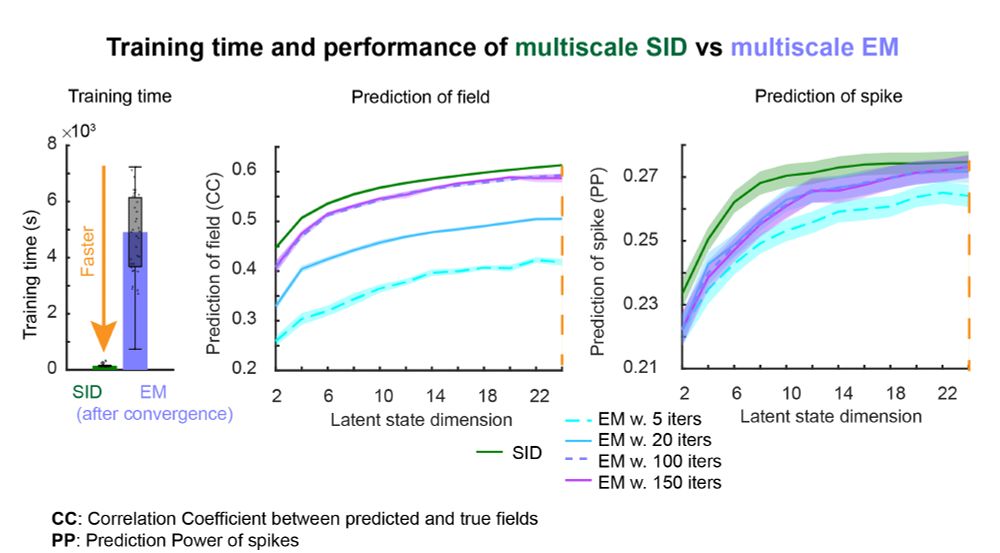

✅ Substantial decoding performance improvement over several LFP-only baselines

✅ Consistent improvements in unsupervised, supervised & multi-session distillation setups

✅ Generalization to unseen sessions without additional distillation

✅ Spike-aligned LFP latent structure

✅ Substantial decoding performance improvement over several LFP-only baselines

✅ Consistent improvements in unsupervised, supervised & multi-session distillation setups

✅ Generalization to unseen sessions without additional distillation

✅ Spike-aligned LFP latent structure

1️⃣ Pretrains a multi-session spike model

2️⃣ Fine-tunes the multi-session spike model on new spike signals

3️⃣ Trains the Distilled LFP model via cross-modal representation alignment

🔥 This produces spike-informed LFP models with significantly improved decoding.

1️⃣ Pretrains a multi-session spike model

2️⃣ Fine-tunes the multi-session spike model on new spike signals

3️⃣ Trains the Distilled LFP model via cross-modal representation alignment

🔥 This produces spike-informed LFP models with significantly improved decoding.

We show that high-fidelity spike transformer models can teach LFP models to substantially enhance LFP decoding. #BCI

👏 Eray Erturk

🧵 Paper, Code ⬇️

We show that high-fidelity spike transformer models can teach LFP models to substantially enhance LFP decoding. #BCI

👏 Eray Erturk

🧵 Paper, Code ⬇️

✅ Self-attention improves neural-behavior predictions by learning long-range patterns while convolutions learn local ones

✅ Two-stage learning improves behavior prediction by disentangling behaviorally relevant dynamics

✅ Self-attention improves neural-behavior predictions by learning long-range patterns while convolutions learn local ones

✅ Two-stage learning improves behavior prediction by disentangling behaviorally relevant dynamics

✅ Operates directly on raw images & avoids preprocessing.

✅ Combines self-attention and convolutional layers to model both global and local patterns.

✅ Uses two-stage learning of convolutional RNNs (ConvRNNs) to disentangle behaviorally relevant and other neural dynamics.

✅ Operates directly on raw images & avoids preprocessing.

✅ Combines self-attention and convolutional layers to model both global and local patterns.

✅ Uses two-stage learning of convolutional RNNs (ConvRNNs) to disentangle behaviorally relevant and other neural dynamics.

SBIND learns local and global spatiotemporal patterns in raw widefield calcium and functional ultrasound neural images.

👏M Hoseini

🧵Paper, Code⬇️

SBIND learns local and global spatiotemporal patterns in raw widefield calcium and functional ultrasound neural images.

👏M Hoseini

🧵Paper, Code⬇️

✅ Disentangles intrinsic behaviorally relevant neural dynamics from input, neural-specific & behavior-specific dynamics

✅ Captures nonlinearity

It is a multi-stage RNN: each stage learns a subtype of dynamics & combines a predictor network w/ a generative network to learn intrinsic dynamics.

✅ Disentangles intrinsic behaviorally relevant neural dynamics from input, neural-specific & behavior-specific dynamics

✅ Captures nonlinearity

It is a multi-stage RNN: each stage learns a subtype of dynamics & combines a predictor network w/ a generative network to learn intrinsic dynamics.

BRAID disentangles the intrinsic dynamics shared between modalities from input dynamics and modality-specific dynamics.

👏 Parsa Vahidi & Omid Sani

@iclr-conf.bsky.social

🧵, Paper & Code ⬇️

BRAID disentangles the intrinsic dynamics shared between modalities from input dynamics and modality-specific dynamics.

👏 Parsa Vahidi & Omid Sani

@iclr-conf.bsky.social

🧵, Paper & Code ⬇️

👏 Han-Lin Hsieh

@iclr-conf.bsky.social

Paper, Code, 🧵⬇️

👏 Han-Lin Hsieh

@iclr-conf.bsky.social

Paper, Code, 🧵⬇️

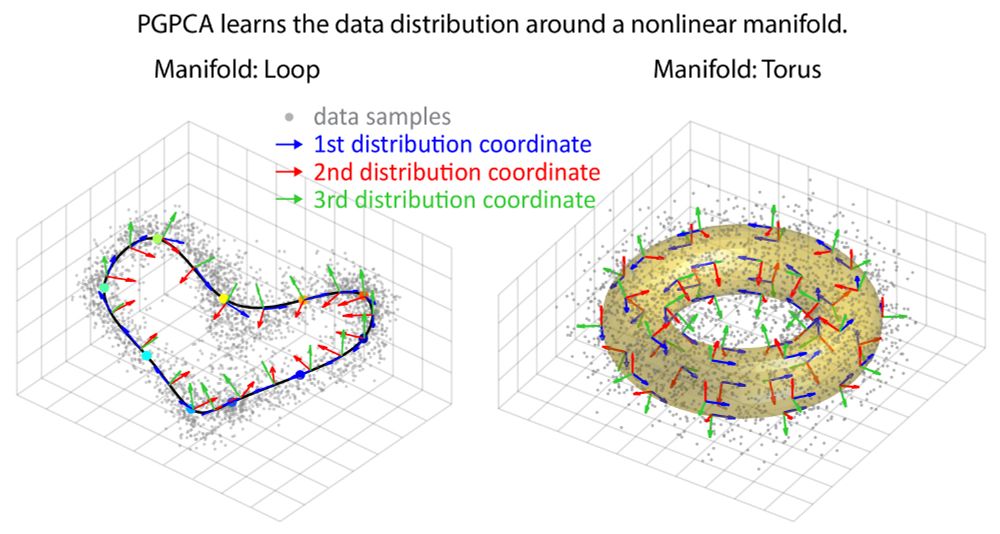

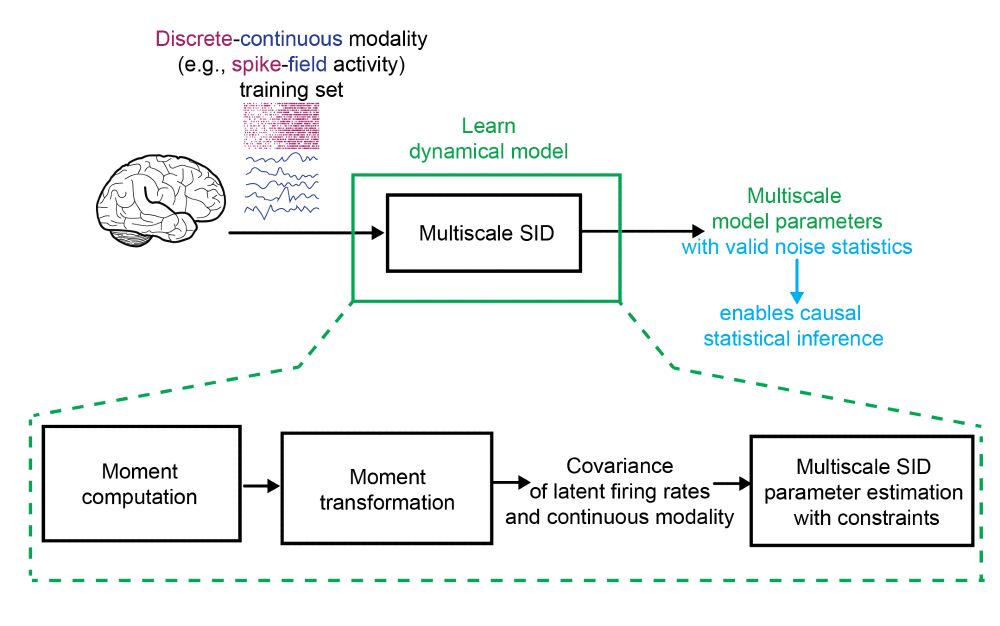

We develop a multimodal subspace identification method to do so & enable causal multimodal decoding!

👏P Ahmadipour!

📜J Neural Eng Paper: iopscience.iop.org/article/10.1...

Code+🧵⬇️

We develop a multimodal subspace identification method to do so & enable causal multimodal decoding!

👏P Ahmadipour!

📜J Neural Eng Paper: iopscience.iop.org/article/10.1...

Code+🧵⬇️