Marcel Hussing

@marcelhussing.bsky.social

PhD student at the University of Pennsylvania. Prev, intern at MSR, currently at Meta FAIR. Interested in reliable and replicable reinforcement learning, robotics and knowledge discovery: https://marcelhussing.github.io/

All posts are my own.

All posts are my own.

Pinned

Replicable Reinforcement Learning with Linear Function Approximation

Replication of experimental results has been a challenge faced by many scientific disciplines, including the field of machine learning. Recent work on the theory of machine learning has formalized rep...

arxiv.org

I think I posted about it before but never with a thread. We recently put a new preprint on arxiv.

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

It's mind-boggling to my how many of the papers I reviewed don't cite a single paper that is older than like 2020. It's like we try to collectively forget what people did in the past so we can publish more.

October 31, 2025 at 7:19 PM

It's mind-boggling to my how many of the papers I reviewed don't cite a single paper that is older than like 2020. It's like we try to collectively forget what people did in the past so we can publish more.

Am at Columbia today giving a talk about (in part) this work. Have a few hours to kill afterwards. If anyone is around there and wants to chat, DM me.

I think I posted about it before but never with a thread. We recently put a new preprint on arxiv.

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

Replicable Reinforcement Learning with Linear Function Approximation

Replication of experimental results has been a challenge faced by many scientific disciplines, including the field of machine learning. Recent work on the theory of machine learning has formalized rep...

arxiv.org

October 27, 2025 at 3:13 PM

Am at Columbia today giving a talk about (in part) this work. Have a few hours to kill afterwards. If anyone is around there and wants to chat, DM me.

I think I posted about it before but never with a thread. We recently put a new preprint on arxiv.

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

Replicable Reinforcement Learning with Linear Function Approximation

Replication of experimental results has been a challenge faced by many scientific disciplines, including the field of machine learning. Recent work on the theory of machine learning has formalized rep...

arxiv.org

October 26, 2025 at 2:16 PM

I think I posted about it before but never with a thread. We recently put a new preprint on arxiv.

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

📖 Replicable Reinforcement Learning with Linear Function Approximation

🔗 arxiv.org/abs/2509.08660

In this paper, we study formal replicability in RL with linear function approximation. The... (1/6)

Reposted by Marcel Hussing

I have been told I need to get more modern in my paper promotion! github.com/cvoelcker/reppo / arxiv.org/abs/2507.11019 @marcelhussing.bsky.social

September 26, 2025 at 2:51 PM

I have been told I need to get more modern in my paper promotion! github.com/cvoelcker/reppo / arxiv.org/abs/2507.11019 @marcelhussing.bsky.social

Super stoked for the New York RL workshop tomorrow. Will be presenting 2 orals:

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

September 11, 2025 at 2:28 PM

Super stoked for the New York RL workshop tomorrow. Will be presenting 2 orals:

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

(Maybe) unpopular opinion: There should not be *any* new experiments in a rebuttal. A rebuttal is for clarifications and incorrect statements in a review. You should not be allowed to add new content at that point. Either your paper is done or it isn't. It should not be written during rebuttals.

An inherent problem with asking for experiments in a rebuttal is that running a baseline in a week is highly likely to be sloppy work

August 16, 2025 at 4:12 PM

(Maybe) unpopular opinion: There should not be *any* new experiments in a rebuttal. A rebuttal is for clarifications and incorrect statements in a review. You should not be allowed to add new content at that point. Either your paper is done or it isn't. It should not be written during rebuttals.

New ChatGPT data just dropped

August 7, 2025 at 11:29 PM

New ChatGPT data just dropped

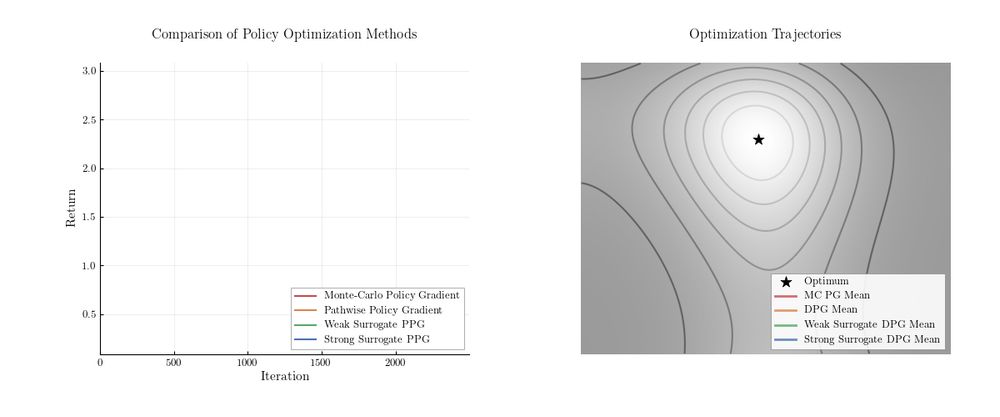

My PhD journey started with me fine-tuning hparams of PPO which ultimately led to my research on stability. With REPPO, we've made a huge step in the right direction. Stable learning, no tuning on a new benchmark, amazing performance. REPPO has the potential to be the PPO killer we all waited for.

July 17, 2025 at 7:41 PM

My PhD journey started with me fine-tuning hparams of PPO which ultimately led to my research on stability. With REPPO, we've made a huge step in the right direction. Stable learning, no tuning on a new benchmark, amazing performance. REPPO has the potential to be the PPO killer we all waited for.

Reposted by Marcel Hussing

🔥 Presenting Relative Entropy Pathwise Policy Optimization #REPPO 🔥

Off-policy #RL (eg #TD3) trains by differentiating a critic, while on-policy #RL (eg #PPO) uses Monte-Carlo gradients. But is that necessary? Turns out: No! We show how to get critic gradients on-policy. arxiv.org/abs/2507.11019

Off-policy #RL (eg #TD3) trains by differentiating a critic, while on-policy #RL (eg #PPO) uses Monte-Carlo gradients. But is that necessary? Turns out: No! We show how to get critic gradients on-policy. arxiv.org/abs/2507.11019

July 17, 2025 at 7:11 PM

Reposted by Marcel Hussing

Works that use #VAML/ #MuZero losses often use deterministic models. But if we want to use stochastic models to measure uncertainty or because we want to leverage current SOTA models such as #transformers and #diffusion, we need to take care! Naively translating the loss functions leads to mistakes!

Would you be surprised to learn that many empirical implementations of value-aware model learning (VAML) algos, including MuZero, lead to incorrect model & value functions when training stochastic models 🤕? In our new @icmlconf.bsky.social 2025 paper, we show why this happens and how to fix it 🦾!

June 19, 2025 at 3:20 PM

Works that use #VAML/ #MuZero losses often use deterministic models. But if we want to use stochastic models to measure uncertainty or because we want to leverage current SOTA models such as #transformers and #diffusion, we need to take care! Naively translating the loss functions leads to mistakes!

Reposted by Marcel Hussing

Dhruv Rohatgi will be giving a lecture on our recent work on comp-stat tradeoffs in next-token prediction at the RL Theory virtual seminar series (rl-theory.bsky.social) tomorrow at 2pm EST! Should be a fun talk---come check it out!!

May 26, 2025 at 7:19 PM

Dhruv Rohatgi will be giving a lecture on our recent work on comp-stat tradeoffs in next-token prediction at the RL Theory virtual seminar series (rl-theory.bsky.social) tomorrow at 2pm EST! Should be a fun talk---come check it out!!

Just arrived in Montreal for my internship at FAIR. So far Montreal has been amazing, great walkable areas, good food and nice people! Although I must say I have to get used to being addressed in French 😅

May 26, 2025 at 4:23 PM

Just arrived in Montreal for my internship at FAIR. So far Montreal has been amazing, great walkable areas, good food and nice people! Although I must say I have to get used to being addressed in French 😅

We'll be presenting our work on Oracle-Efficient Reinforcement Learning for Max Value Ensembles at the RL theory seminar! Been following this series for a while, super excited we get to present some of our work. 🥳

Last seminars before the summer break:

04/29: Max Simchowitz (CMU)

05/06: Jeongyeol Kwon (Univ. of Widsconsin-Madison)

05/20: Sikata Sengupta & Marcel Hussing (Univ. of Pennsylvania)

05/27: Dhruv Rohatgi (MIT)

06/03: David Janz (Univ. of Oxford)

06/10: Nneka Okolo (MIT)

04/29: Max Simchowitz (CMU)

05/06: Jeongyeol Kwon (Univ. of Widsconsin-Madison)

05/20: Sikata Sengupta & Marcel Hussing (Univ. of Pennsylvania)

05/27: Dhruv Rohatgi (MIT)

06/03: David Janz (Univ. of Oxford)

06/10: Nneka Okolo (MIT)

April 25, 2025 at 2:22 PM

We'll be presenting our work on Oracle-Efficient Reinforcement Learning for Max Value Ensembles at the RL theory seminar! Been following this series for a while, super excited we get to present some of our work. 🥳

Reposted by Marcel Hussing

Many great papers from Mila!

Two by my team at the Adaptive Agents Lab (Adage) together with collaborators:

A Truncated Newton Method for Optimal Transport

openreview.net/forum?id=gWr...

MAD-TD: Model-Augmented Data stabilizes High Update Ratio RL

openreview.net/forum?id=6Rt...

#ICLR2025

Two by my team at the Adaptive Agents Lab (Adage) together with collaborators:

A Truncated Newton Method for Optimal Transport

openreview.net/forum?id=gWr...

MAD-TD: Model-Augmented Data stabilizes High Update Ratio RL

openreview.net/forum?id=6Rt...

#ICLR2025

This week, Mila researchers will present more than 90 papers at @iclr-conf.bsky.social in Singapore. Every day, we will share a schedule featuring Mila-affiliated presentations.

Day 1 👇 #ICLR2025

mila.quebec/en/news/foll...

Day 1 👇 #ICLR2025

mila.quebec/en/news/foll...

April 24, 2025 at 2:19 AM

Many great papers from Mila!

Two by my team at the Adaptive Agents Lab (Adage) together with collaborators:

A Truncated Newton Method for Optimal Transport

openreview.net/forum?id=gWr...

MAD-TD: Model-Augmented Data stabilizes High Update Ratio RL

openreview.net/forum?id=6Rt...

#ICLR2025

Two by my team at the Adaptive Agents Lab (Adage) together with collaborators:

A Truncated Newton Method for Optimal Transport

openreview.net/forum?id=gWr...

MAD-TD: Model-Augmented Data stabilizes High Update Ratio RL

openreview.net/forum?id=6Rt...

#ICLR2025

Reposted by Marcel Hussing

📢 Deadline Extension Alert! 📢

Good news! We’re extending the #CoLLAs2025 submission deadlines:

📝 Abstracts: Feb 26, 2025, 23:59 AoE

📄 Papers: Mar 3, 2025, 23:59 AoE

More time to refine your work—don't miss this chance to contribute to #lifelong-learning research! 🚀

🔗 lifelong-ml.cc

Good news! We’re extending the #CoLLAs2025 submission deadlines:

📝 Abstracts: Feb 26, 2025, 23:59 AoE

📄 Papers: Mar 3, 2025, 23:59 AoE

More time to refine your work—don't miss this chance to contribute to #lifelong-learning research! 🚀

🔗 lifelong-ml.cc

CoLLAs

Collas

lifelong-ml.cc

February 20, 2025 at 8:18 PM

📢 Deadline Extension Alert! 📢

Good news! We’re extending the #CoLLAs2025 submission deadlines:

📝 Abstracts: Feb 26, 2025, 23:59 AoE

📄 Papers: Mar 3, 2025, 23:59 AoE

More time to refine your work—don't miss this chance to contribute to #lifelong-learning research! 🚀

🔗 lifelong-ml.cc

Good news! We’re extending the #CoLLAs2025 submission deadlines:

📝 Abstracts: Feb 26, 2025, 23:59 AoE

📄 Papers: Mar 3, 2025, 23:59 AoE

More time to refine your work—don't miss this chance to contribute to #lifelong-learning research! 🚀

🔗 lifelong-ml.cc

I was very hyped about this place initially, now I come here, see 5 posts about politics, unfollow 5 people and close the website. Where are the interesting AI posts?

February 22, 2025 at 6:05 PM

I was very hyped about this place initially, now I come here, see 5 posts about politics, unfollow 5 people and close the website. Where are the interesting AI posts?

Reposted by Marcel Hussing

Can you solve group-conditional online conformal prediction with a no-regret learning algorithm? Not with vanilla regret, but -yes- with swap regret. And algorithms from the follow-the-regularized leader family (notably online gradient descent) work really well for other reasons.

February 18, 2025 at 1:19 PM

Can you solve group-conditional online conformal prediction with a no-regret learning algorithm? Not with vanilla regret, but -yes- with swap regret. And algorithms from the follow-the-regularized leader family (notably online gradient descent) work really well for other reasons.

Reposted by Marcel Hussing

Bummed out about recent politics & news drowning out AI and science you want to see on Bluesky?

Well, here is a small "sky thread" (written on a ✈️) about something I recently discovered: e-values!

They are an alternative to the standard p-values as a measure of statistical significance. 1/N

Well, here is a small "sky thread" (written on a ✈️) about something I recently discovered: e-values!

They are an alternative to the standard p-values as a measure of statistical significance. 1/N

February 17, 2025 at 5:54 PM

Bummed out about recent politics & news drowning out AI and science you want to see on Bluesky?

Well, here is a small "sky thread" (written on a ✈️) about something I recently discovered: e-values!

They are an alternative to the standard p-values as a measure of statistical significance. 1/N

Well, here is a small "sky thread" (written on a ✈️) about something I recently discovered: e-values!

They are an alternative to the standard p-values as a measure of statistical significance. 1/N

Throwing compute at things has proven quite powerful in other domains but until recently not as much in #ReinforcementLearning.

Excited to share that out MAD-TD paper got a spotlight at #ICLR25! Check out Claas' thread on how to get the most out of your compute/data buck when training from scratch.

Excited to share that out MAD-TD paper got a spotlight at #ICLR25! Check out Claas' thread on how to get the most out of your compute/data buck when training from scratch.

Do you want to get the most out of your samples, but increasing the update steps just destabilizes RL training? Our #ICLR2025 spotlight 🎉 paper shows that using the values of unseen actions causes instability in continuous state-action domains and how to combat this problem with learned models!

February 11, 2025 at 10:57 PM

Throwing compute at things has proven quite powerful in other domains but until recently not as much in #ReinforcementLearning.

Excited to share that out MAD-TD paper got a spotlight at #ICLR25! Check out Claas' thread on how to get the most out of your compute/data buck when training from scratch.

Excited to share that out MAD-TD paper got a spotlight at #ICLR25! Check out Claas' thread on how to get the most out of your compute/data buck when training from scratch.

Reposted by Marcel Hussing

EC 2025 (S)PC --- lets get ready for the Super Bowl! Every time there is a first down, bid on a paper. Field goal? Bid on two. Touchdown? Bid on 5 papers (10 if its the Eagles!) At the halftime show enter your topic preferences and conflicts. Lets go birds!

February 8, 2025 at 7:33 PM

EC 2025 (S)PC --- lets get ready for the Super Bowl! Every time there is a first down, bid on a paper. Field goal? Bid on two. Touchdown? Bid on 5 papers (10 if its the Eagles!) At the halftime show enter your topic preferences and conflicts. Lets go birds!

Reposted by Marcel Hussing

🚨🚨 RLC deadline has been extended by a week! Abstract deadline is Feb. 21 with a paper deadline of Feb. 28 🚨🚨. Please spread the word!

February 8, 2025 at 6:05 PM

🚨🚨 RLC deadline has been extended by a week! Abstract deadline is Feb. 21 with a paper deadline of Feb. 28 🚨🚨. Please spread the word!

What a future work section should be:

Oh, and here is this interesting and hard open problem that someone should solve.

Future work sections in empirical ML papers:

We leave hyperparameter optimization for future work.

Oh, and here is this interesting and hard open problem that someone should solve.

Future work sections in empirical ML papers:

We leave hyperparameter optimization for future work.

January 29, 2025 at 3:59 PM

What a future work section should be:

Oh, and here is this interesting and hard open problem that someone should solve.

Future work sections in empirical ML papers:

We leave hyperparameter optimization for future work.

Oh, and here is this interesting and hard open problem that someone should solve.

Future work sections in empirical ML papers:

We leave hyperparameter optimization for future work.

My new year's resolution is to spend more time thinking. Last year I found myself deep in the nitty gritty of creating solutions. While that is important it is also necessary to reflect and look at the bigger picture. Entering my 5th year, I will try to focus more on defining the next problems.

January 1, 2025 at 11:51 AM

My new year's resolution is to spend more time thinking. Last year I found myself deep in the nitty gritty of creating solutions. While that is important it is also necessary to reflect and look at the bigger picture. Entering my 5th year, I will try to focus more on defining the next problems.

Apparently I'm in the top 1% of wandb users. Good or bad sign?

December 27, 2024 at 3:06 AM

Apparently I'm in the top 1% of wandb users. Good or bad sign?