NLP, Question Answering, Human AI, LLMs

More at mgor.info

TL;DR: We introduce AdvScore, a human-grounded metric to measure how "adversarial" a dataset really is—by comparing model vs. human performance. It helps build better, lasting benchmarks like AdvQA (proposed) that evolve with AI progress.

TL;DR: We introduce AdvScore, a human-grounded metric to measure how "adversarial" a dataset really is—by comparing model vs. human performance. It helps build better, lasting benchmarks like AdvQA (proposed) that evolve with AI progress.

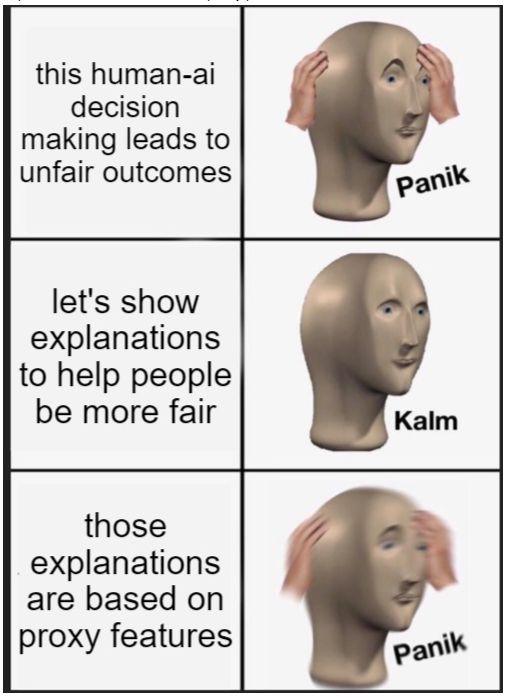

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>