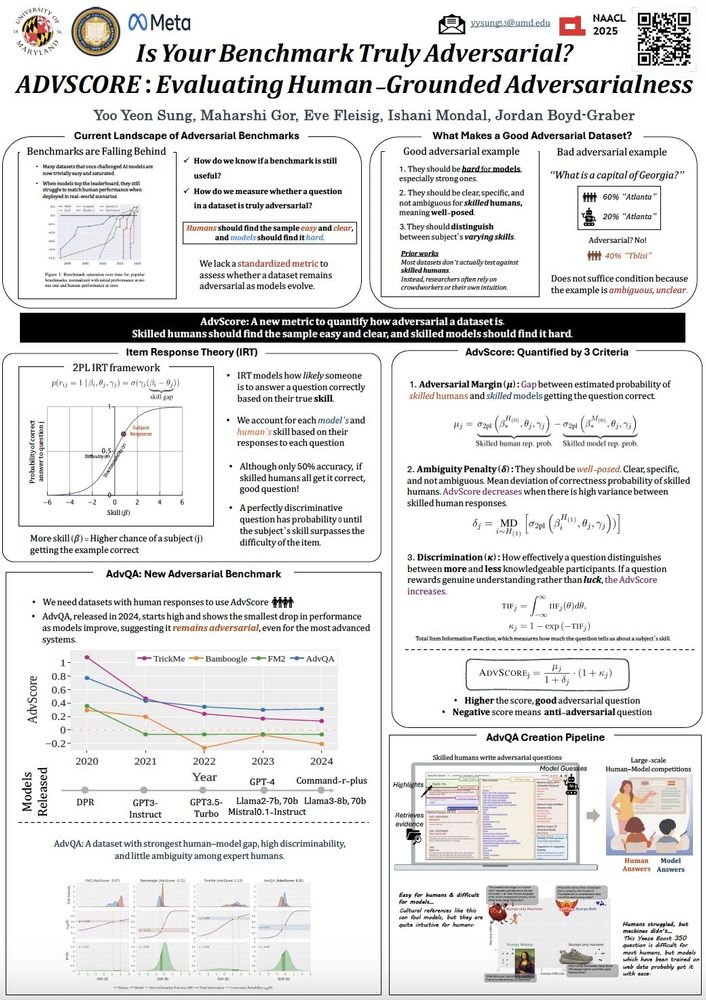

NLP, Question Answering, Human AI, LLMs

More at mgor.info

🚨 Don't miss out on our poster presentation *today at 2 pm* by Yoo Yeon (first author).

📍Poster Session 5 - HC: Human-centered NLP

💼 Highly recommend talking to her if you are hiring and/or interested in Human-focused Al dev and evals!

🚨 Don't miss out on our poster presentation *today at 2 pm* by Yoo Yeon (first author).

📍Poster Session 5 - HC: Human-centered NLP

💼 Highly recommend talking to her if you are hiring and/or interested in Human-focused Al dev and evals!

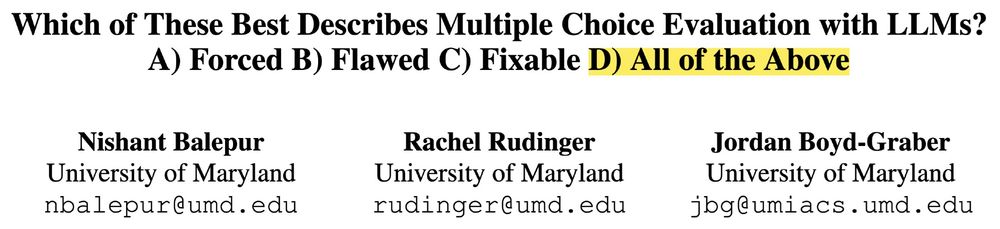

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

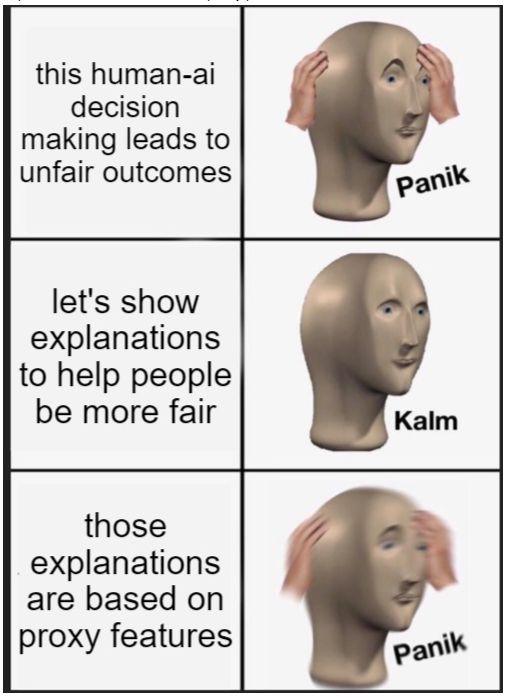

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>

Hallucination is totally the wrong word, implying it is perceiving the world incorrectly.

But it's generating false, plausible sounding statements. Confabulation is literally the perfect word.

So, let's all please start referring to any junk that an LLM makes up as "confabulations".

A hallucination is a false subjective sensory experience. ChatGPT doesn't have experiences!

It's just making up plausible-sounding bs, covering knowledge gaps. That's confabulation

Hallucination is totally the wrong word, implying it is perceiving the world incorrectly.

But it's generating false, plausible sounding statements. Confabulation is literally the perfect word.

So, let's all please start referring to any junk that an LLM makes up as "confabulations".

go.bsky.app/V9qWjEi

go.bsky.app/V9qWjEi