• DPO (preference-based)

• GRPO (verifier-based RL)

→ No architecture changes

→ No expert supervision

→ Big gains on hard tasks

Results (Qwen2.5-3B-Instruct, MATH level-5):

• DPO (preference-based)

• GRPO (verifier-based RL)

→ No architecture changes

→ No expert supervision

→ Big gains on hard tasks

Results (Qwen2.5-3B-Instruct, MATH level-5):

No expert traces. No test-time hacks.

Just: Self-explanation + RL-style training

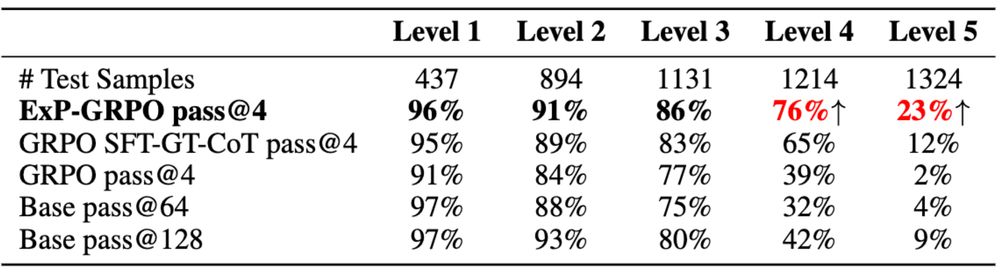

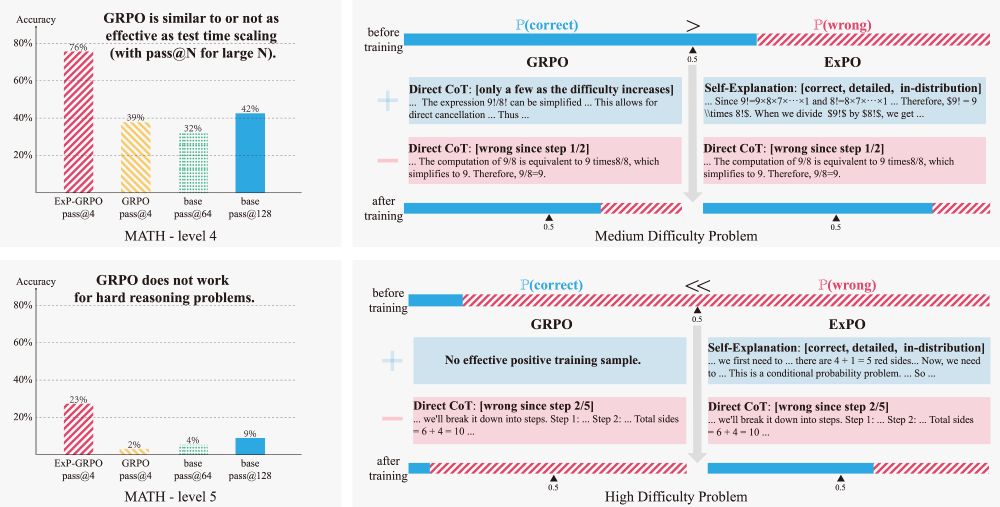

Result? Accuracy on MATH level-5 jumped from 2% → 23%.

No expert traces. No test-time hacks.

Just: Self-explanation + RL-style training

Result? Accuracy on MATH level-5 jumped from 2% → 23%.

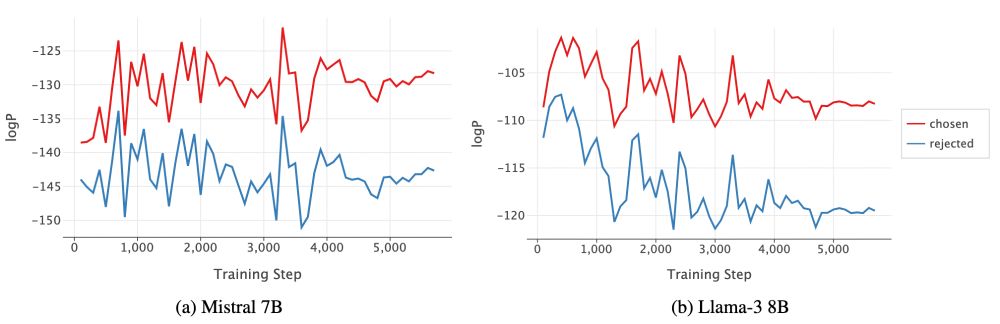

Our answer: Gradient Entanglement!

arxiv.org/abs/2410.13828

Our answer: Gradient Entanglement!

arxiv.org/abs/2410.13828