COSMO Lab

@labcosmo.bsky.social

Computational Science and Modelling of materials and molecules at the atomic-scale, with machine learning.

However, this seems to damage the transferability of highly-preconditioned models such as MACE - less so for more expressive unconstrained models such as PET. Does this match your experience?

September 23, 2025 at 7:26 AM

However, this seems to damage the transferability of highly-preconditioned models such as MACE - less so for more expressive unconstrained models such as PET. Does this match your experience?

This doesn't matter much as most of the fragments that make up the body-order decomposition as deranged soups of highly-correlated electrons. Models with sufficient expressive power *can* learn if presented with the fragments ...

September 23, 2025 at 7:26 AM

This doesn't matter much as most of the fragments that make up the body-order decomposition as deranged soups of highly-correlated electrons. Models with sufficient expressive power *can* learn if presented with the fragments ...

TL;DR: not really. ML potentials learn whatever they want, as long as it allows them good accuracy on the train set. We note in particular that MACE is strongly preconditioned to learn a fast-decaying body-order expansion, whether it decays fast or not.

September 23, 2025 at 7:26 AM

TL;DR: not really. ML potentials learn whatever they want, as long as it allows them good accuracy on the train set. We note in particular that MACE is strongly preconditioned to learn a fast-decaying body-order expansion, whether it decays fast or not.

Anticipating 🧑🚀 Wei Bin's talk at #psik2025 (noon@roomA), 📢 a new #preprint using PET and the MAD dataset to train a universal #ml model for the density of states, giving band gaps for solids, clusters, surfaces and molecules with MAE ~200meV. Go to the talk, or check out arxiv.org/html/2508.17...!

August 28, 2025 at 7:19 AM

Anticipating 🧑🚀 Wei Bin's talk at #psik2025 (noon@roomA), 📢 a new #preprint using PET and the MAD dataset to train a universal #ml model for the density of states, giving band gaps for solids, clusters, surfaces and molecules with MAE ~200meV. Go to the talk, or check out arxiv.org/html/2508.17...!

The reconstructed surface contains different sites with different reactivity. Despite the higher stability, for some sites the disordered surface is *more* reactive with water, one of the main contaminants affecting the stability of LPS batteries. Useful to design better stabilization strategies!

August 27, 2025 at 6:54 AM

The reconstructed surface contains different sites with different reactivity. Despite the higher stability, for some sites the disordered surface is *more* reactive with water, one of the main contaminants affecting the stability of LPS batteries. Useful to design better stabilization strategies!

Reconstructed surfaces become lower in energy, and the surface energy less orientation dependent - and so the Wulff shape of particles become more spherical.

August 27, 2025 at 6:54 AM

Reconstructed surfaces become lower in energy, and the surface energy less orientation dependent - and so the Wulff shape of particles become more spherical.

📢 Now out on @physrevx.bsky.social energy, journals.aps.org/prxenergy/ab... from 🧑🚀 @dtisi.bsky.social and Hanna Türk, our #PET -powered study of the dynamic reconstruction of LPS surfaces, and how it affects their structure, stability and reactivity.

August 27, 2025 at 6:54 AM

📢 Now out on @physrevx.bsky.social energy, journals.aps.org/prxenergy/ab... from 🧑🚀 @dtisi.bsky.social and Hanna Türk, our #PET -powered study of the dynamic reconstruction of LPS surfaces, and how it affects their structure, stability and reactivity.

TL;DR - this is a cross-platform, model-agnostic library to handle atomistic data (handling geometry and property derivatives such as forces and stresses) that lets you package your model into a portable torchscript file.

August 22, 2025 at 7:40 AM

TL;DR - this is a cross-platform, model-agnostic library to handle atomistic data (handling geometry and property derivatives such as forces and stresses) that lets you package your model into a portable torchscript file.

🚨 #machinelearning for #compchem goodies from our 🧑🚀 team incoming! After years of work it's time to share. Go check arxiv.org/abs/2508.15704 and/or metatensor.org to learn about #metatensor and #metatomic. What they are, what they do, why you should use them for all of your atomistic ML projects 🔍.

August 22, 2025 at 7:40 AM

🚨 #machinelearning for #compchem goodies from our 🧑🚀 team incoming! After years of work it's time to share. Go check arxiv.org/abs/2508.15704 and/or metatensor.org to learn about #metatensor and #metatomic. What they are, what they do, why you should use them for all of your atomistic ML projects 🔍.

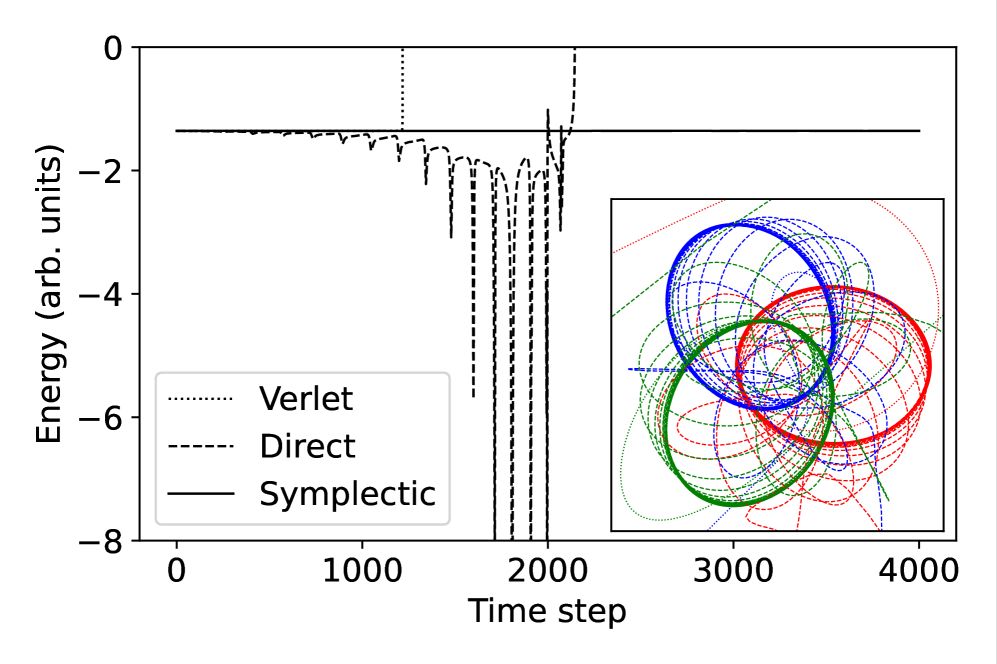

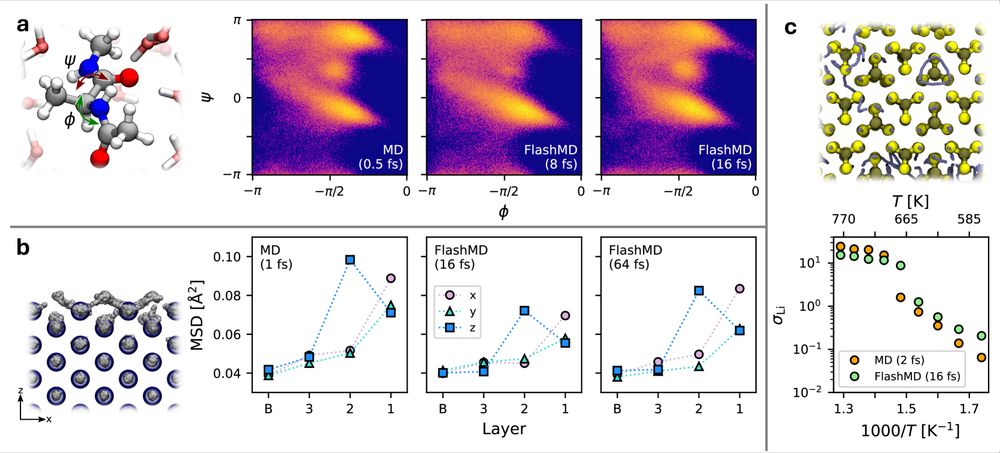

We can get long-stride geometry-conserving integration by learning the Hamilton-Jacobi action. This fixes for good, doesn't just patch up, the instability of direct MD prediction, although it's not as fast. And work also for serious simulations, like glassy relaxation in deep supercooled GeTe!

August 8, 2025 at 5:46 AM

We can get long-stride geometry-conserving integration by learning the Hamilton-Jacobi action. This fixes for good, doesn't just patch up, the instability of direct MD prediction, although it's not as fast. And work also for serious simulations, like glassy relaxation in deep supercooled GeTe!

If you are excited about 30x longer time steps in molecular dynamics using FlashMD, but are worried about it not being symplectic, Filippo has something new cooking that should make you even more excited. Head to the #arxiv for a preview arxiv.org/html/2508.01...

August 8, 2025 at 5:46 AM

If you are excited about 30x longer time steps in molecular dynamics using FlashMD, but are worried about it not being symplectic, Filippo has something new cooking that should make you even more excited. Head to the #arxiv for a preview arxiv.org/html/2508.01...

Thanks to the 🧑🚀🧑🚀🧑🚀 who put this together, Sofiia in particular, and thanks to the #metatrain team as this would not be so easy without their work metatensor.github.io/metatrain/la...

July 24, 2025 at 1:38 AM

Thanks to the 🧑🚀🧑🚀🧑🚀 who put this together, Sofiia in particular, and thanks to the #metatrain team as this would not be so easy without their work metatensor.github.io/metatrain/la...

Two new recipes landed in the #atomistic-cookbook 🧑🍳📖. One explaining how to fine-tune the #PET-MAD universal model on a system-specific dataset, one training a model with conservative fine tuning. Check them out on atomistic-cookbook.org/examples/pet... and atomistic-cookbook.org/examples/pet...

July 24, 2025 at 1:38 AM

Two new recipes landed in the #atomistic-cookbook 🧑🍳📖. One explaining how to fine-tune the #PET-MAD universal model on a system-specific dataset, one training a model with conservative fine tuning. Check them out on atomistic-cookbook.org/examples/pet... and atomistic-cookbook.org/examples/pet...

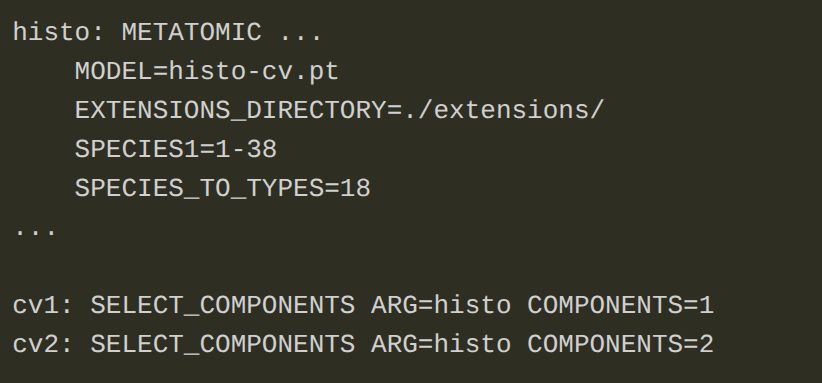

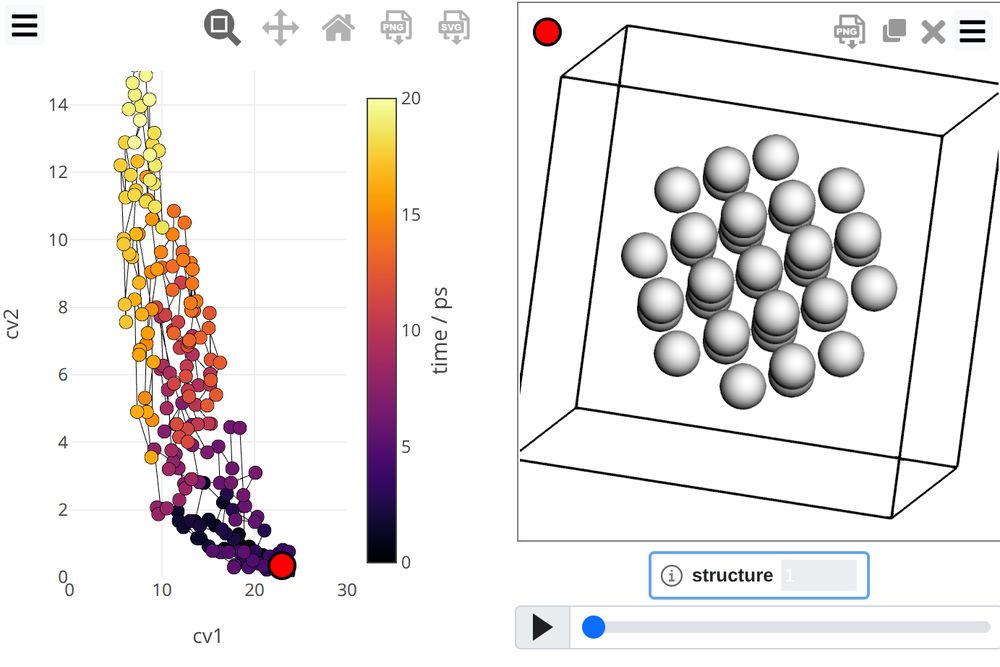

Basically, you just need to define a `torch.nn.module` with a specific API, and then you can define anything you like as a CV calculator. Export as .pt torchscript model, and it's just on METATOMIC action away from reading it in #plumed

July 7, 2025 at 8:21 PM

Basically, you just need to define a `torch.nn.module` with a specific API, and then you can define anything you like as a CV calculator. Export as .pt torchscript model, and it's just on METATOMIC action away from reading it in #plumed

New 🧑🍳📖 #recipe landed, doubling up as a @plumed.org tutorial 🐦 atomistic-cookbook.org/examples/met..., and explaining how to use the #metatomic interface in #plumed to define custom collective variables with all the flexibility and speed of torch.

July 7, 2025 at 8:21 PM

New 🧑🍳📖 #recipe landed, doubling up as a @plumed.org tutorial 🐦 atomistic-cookbook.org/examples/met..., and explaining how to use the #metatomic interface in #plumed to define custom collective variables with all the flexibility and speed of torch.

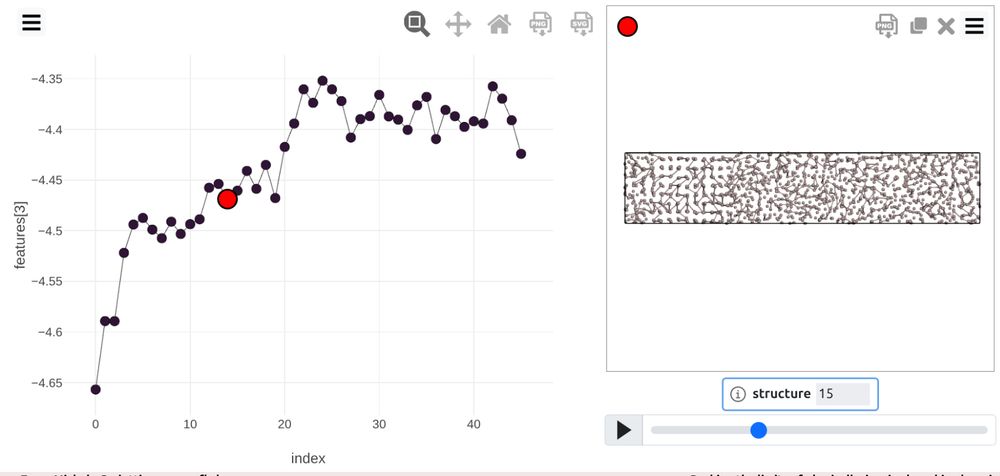

As a nice side-effect, we distribute (well, PR still underway 😆) a featurizer based on PET-MAD latent features that you can use together with `chemiscope.explore` to as a universal materials cartography tool - it even works out of the box to follow the melting of Al!

June 26, 2025 at 11:41 AM

As a nice side-effect, we distribute (well, PR still underway 😆) a featurizer based on PET-MAD latent features that you can use together with `chemiscope.explore` to as a universal materials cartography tool - it even works out of the box to follow the melting of Al!

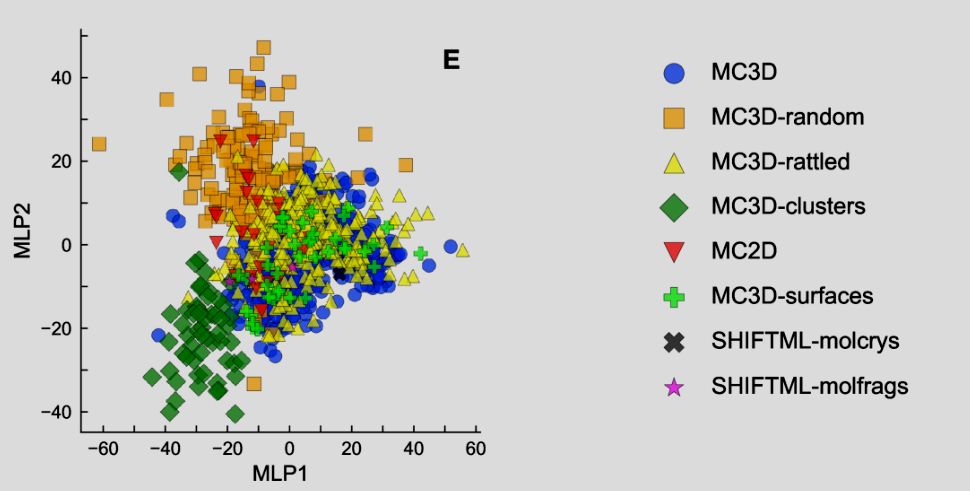

Diverse data is good data! Took a while to polish it, but we have finally released the small-but-smart MAD dataset we used to train PET-MAD. You can find more on the #preprint arxiv.org/html/2506.19... or just head to the #materialscloud to fetch MAD archive.materialscloud.org/records/xdsb...

June 26, 2025 at 11:41 AM

Diverse data is good data! Took a while to polish it, but we have finally released the small-but-smart MAD dataset we used to train PET-MAD. You can find more on the #preprint arxiv.org/html/2506.19... or just head to the #materialscloud to fetch MAD archive.materialscloud.org/records/xdsb...

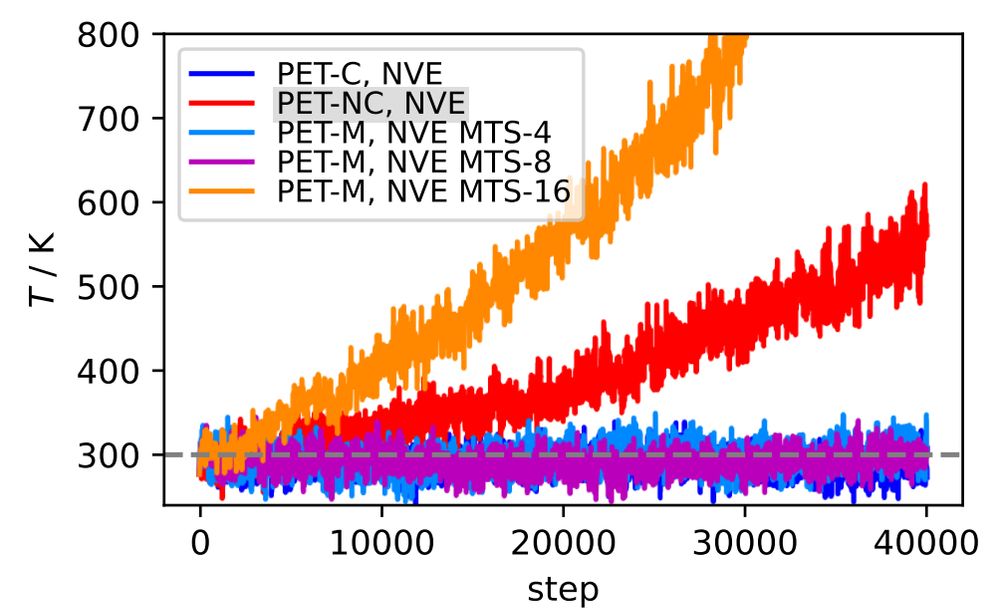

And you can also use with multiple time stepping #moleculardynamics, recovering most of the speed-up of direct forces, and avoiding the sampling artefacts. Pretty sweet deal, and easy to realize with ipi-code.org, see the 🧑🍳📖 atomistic-cookbook.org/examples/pet...

June 20, 2025 at 3:53 PM

And you can also use with multiple time stepping #moleculardynamics, recovering most of the speed-up of direct forces, and avoiding the sampling artefacts. Pretty sweet deal, and easy to realize with ipi-code.org, see the 🧑🍳📖 atomistic-cookbook.org/examples/pet...

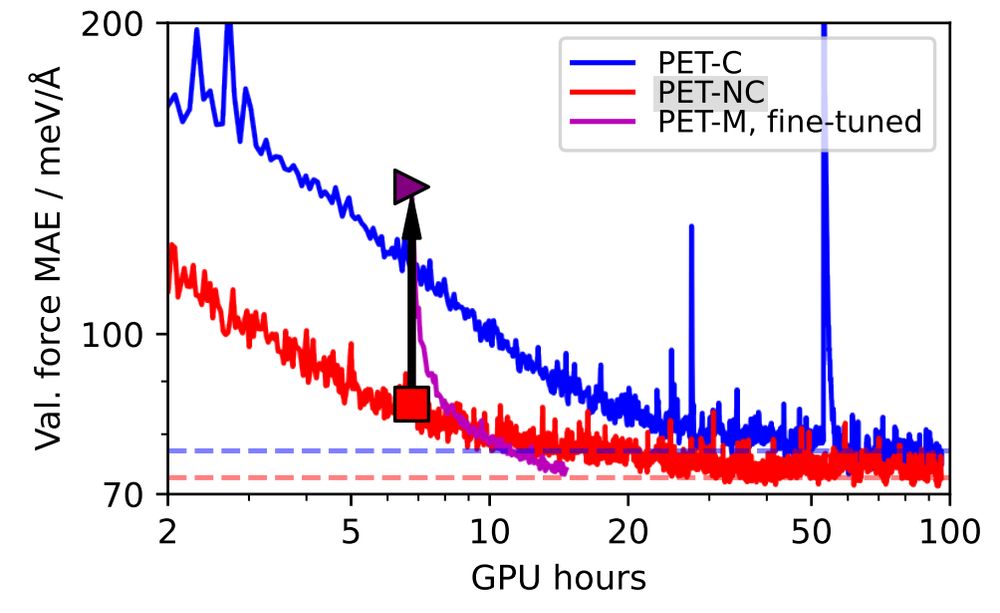

Best still, you can use direct forces to pre-train a ML potential, and then use "conservative fine tuning" with back-propagation - saving time and achieving similar (or better 😆) accuracy

June 20, 2025 at 3:53 PM

Best still, you can use direct forces to pre-train a ML potential, and then use "conservative fine tuning" with back-propagation - saving time and achieving similar (or better 😆) accuracy

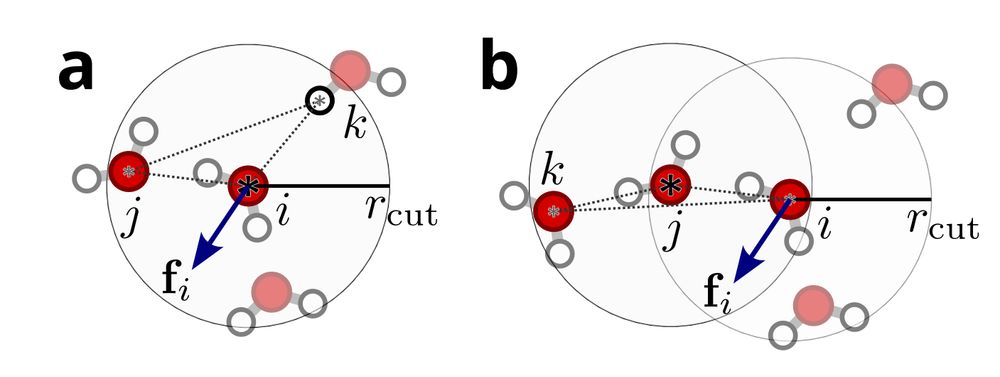

We have however added quite a bit - from direct stresses, to a discussion of why direct forces are shorter-ranged than those you get from differentiating a potential with the same receptive radius.

June 20, 2025 at 3:53 PM

We have however added quite a bit - from direct stresses, to a discussion of why direct forces are shorter-ranged than those you get from differentiating a potential with the same receptive radius.

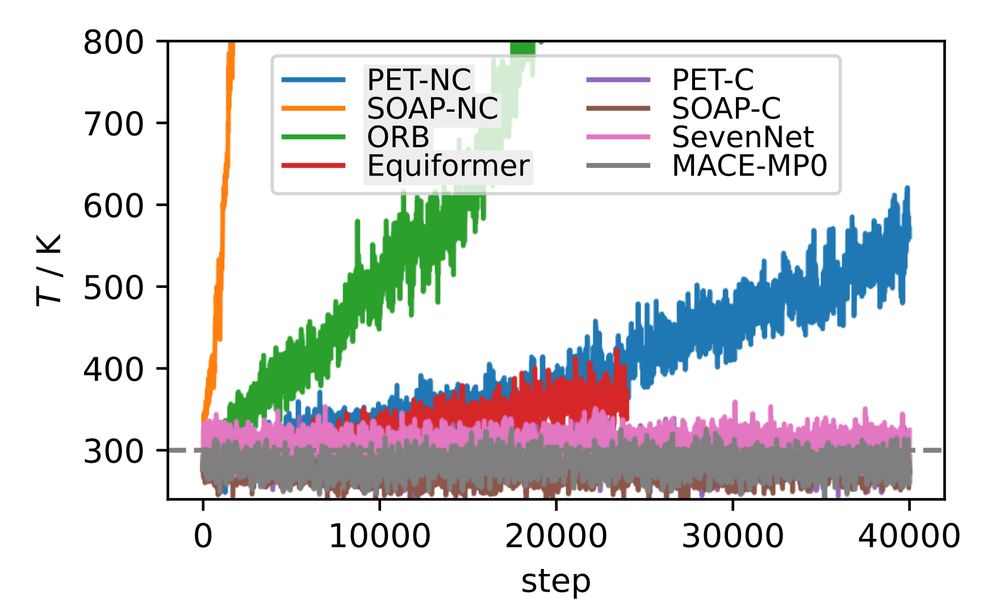

The conclusions haven't changed: using direct force predictions in atomistic simulations is a bad idea - they don't conserve energy, lead to unstable geometry optimization, and break energy equipartition when used with thermostats. Clear from the plot: conservative 👌, non-conservative 💥.

June 20, 2025 at 3:53 PM

The conclusions haven't changed: using direct force predictions in atomistic simulations is a bad idea - they don't conserve energy, lead to unstable geometry optimization, and break energy equipartition when used with thermostats. Clear from the plot: conservative 👌, non-conservative 💥.

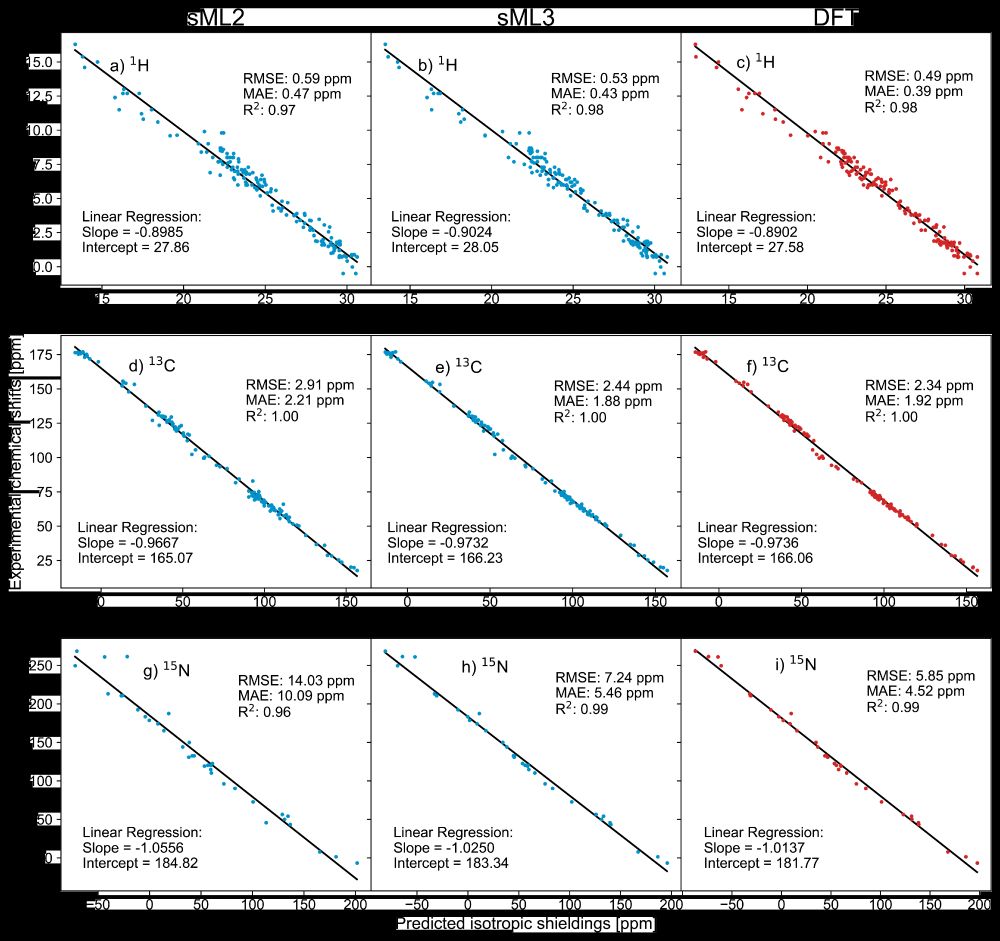

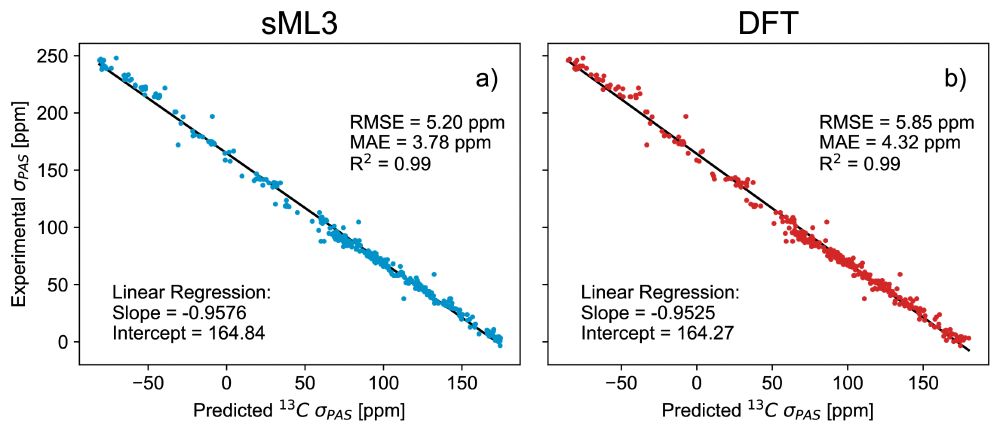

🎉 DFT-accurate, with built-in uncertainty quantification, providing chemical shielding anisotropy - ShiftML3.0 has it all! Building on a successful @nccr-marvel.bsky.social-funded collaboration with LRM🧲⚛️, it just landed on the arXiv arxiv.org/html/2506.13... and on pypi pypi.org/project/shif...

June 17, 2025 at 1:18 PM

🎉 DFT-accurate, with built-in uncertainty quantification, providing chemical shielding anisotropy - ShiftML3.0 has it all! Building on a successful @nccr-marvel.bsky.social-funded collaboration with LRM🧲⚛️, it just landed on the arXiv arxiv.org/html/2506.13... and on pypi pypi.org/project/shif...

#metatensor day about to start! Join us on zoom if you're not at #EPFL epfl.zoom.us/j/68368776745 @nccr-marvel.bsky.social

June 13, 2025 at 7:32 AM

#metatensor day about to start! Join us on zoom if you're not at #EPFL epfl.zoom.us/j/68368776745 @nccr-marvel.bsky.social

If you do, the rewards can be very impressive: you can run solvated alanine dipeptide and observe superionic behavior in LiPS with 16fs time step, and watch the Al(110) surface pre-melt in strides of 64fs. And all with the same universal model, no fine-tuning needed!

May 27, 2025 at 7:03 AM

If you do, the rewards can be very impressive: you can run solvated alanine dipeptide and observe superionic behavior in LiPS with 16fs time step, and watch the Al(110) surface pre-melt in strides of 64fs. And all with the same universal model, no fine-tuning needed!