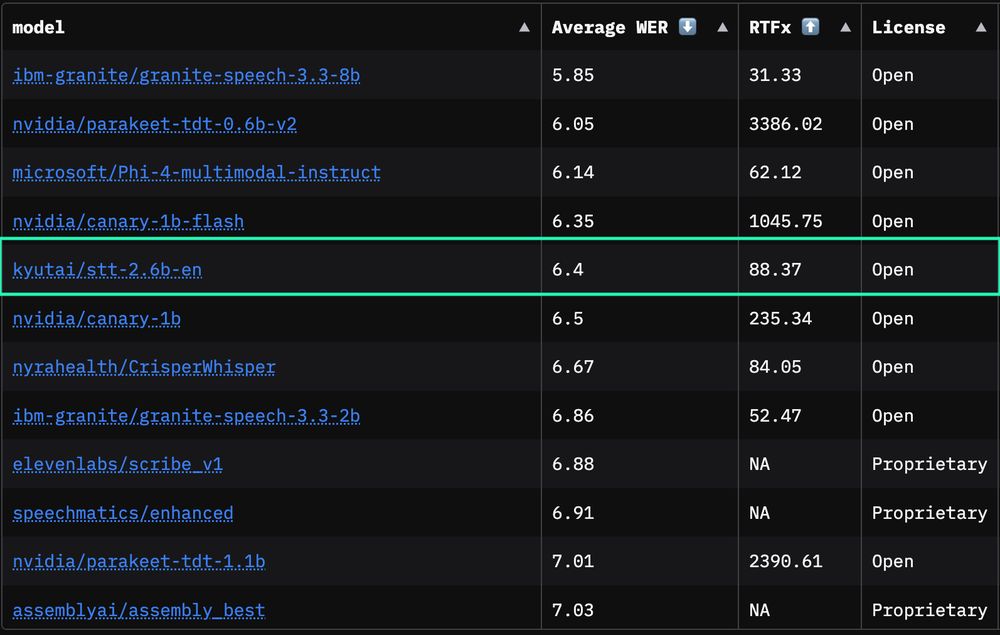

While all other models need the whole audio, ours delivers top-tier accuracy on streaming content.

Open, fast, and ready for production!

While all other models need the whole audio, ours delivers top-tier accuracy on streaming content.

Open, fast, and ready for production!

It sees, understands, and talks about images — naturally, and out loud.

This opens up new applications, from audio description for the visual impaired to visual access to information.

It sees, understands, and talks about images — naturally, and out loud.

This opens up new applications, from audio description for the visual impaired to visual access to information.

Hibiki produces spoken and text translations of the input speech in real-time, while preserving the speaker’s voice and optimally adapting its pace based on the semantic content of the source speech. 🧵

Hibiki produces spoken and text translations of the input speech in real-time, while preserving the speaker’s voice and optimally adapting its pace based on the semantic content of the source speech. 🧵

huggingface.co/kyutai/heliu...

huggingface.co/kyutai/heliu...