I really like the "function matching" angle we discovered (or rediscovered) in one of our papers that partially demystifies distillation for me: arxiv.org/abs/2106.05237

I really like the "function matching" angle we discovered (or rediscovered) in one of our papers that partially demystifies distillation for me: arxiv.org/abs/2106.05237

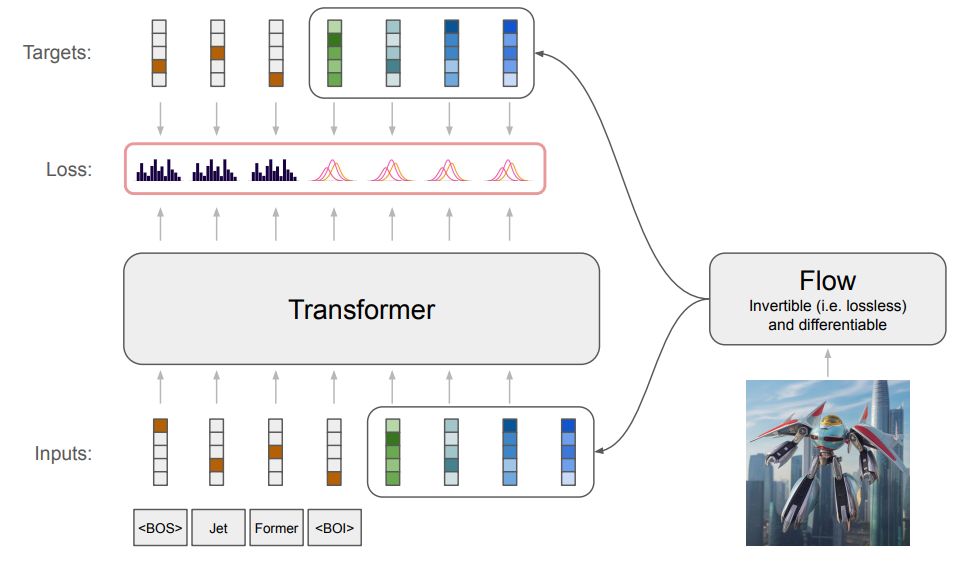

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

My favorite mini-insight is how implicit half-precision matrix multiplications (with float32 accumulation) can 'eat' entropy and lead to an overly optimistic, flawed objective and evaluations.

My favorite mini-insight is how implicit half-precision matrix multiplications (with float32 accumulation) can 'eat' entropy and lead to an overly optimistic, flawed objective and evaluations.

Another contribution is a demonstration that transfer learning is effective in mitigating overfitting. The recipe is: pretrain on a large image database and then fine-tune to a small dataset, e.g., CIFAR-10.

Another contribution is a demonstration that transfer learning is effective in mitigating overfitting. The recipe is: pretrain on a large image database and then fine-tune to a small dataset, e.g., CIFAR-10.

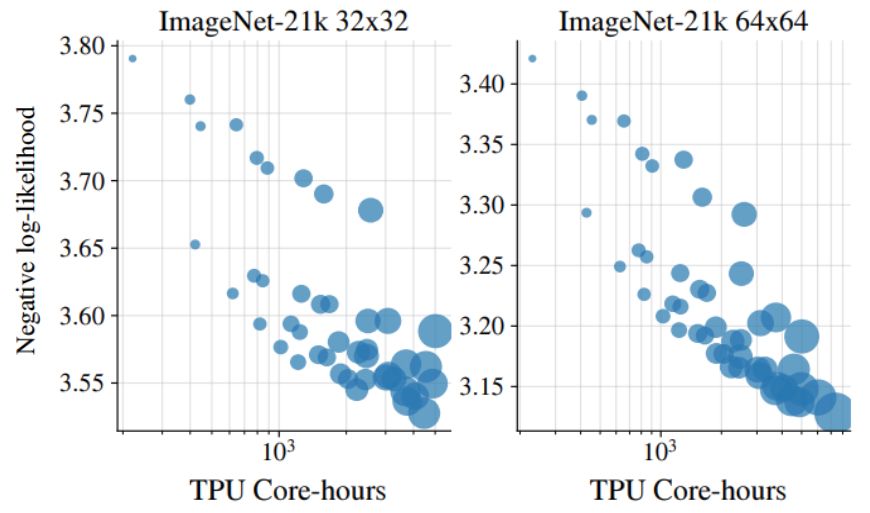

These are the results of varying the Jet model size when training on ImageNet-21k images:

These are the results of varying the Jet model size when training on ImageNet-21k images:

❌ invertible dense layer

❌ ActNorm layer

❌ multiscale latents

❌ dequant. noise

❌ invertible dense layer

❌ ActNorm layer

❌ multiscale latents

❌ dequant. noise

and @asusanopinto.bsky.social.

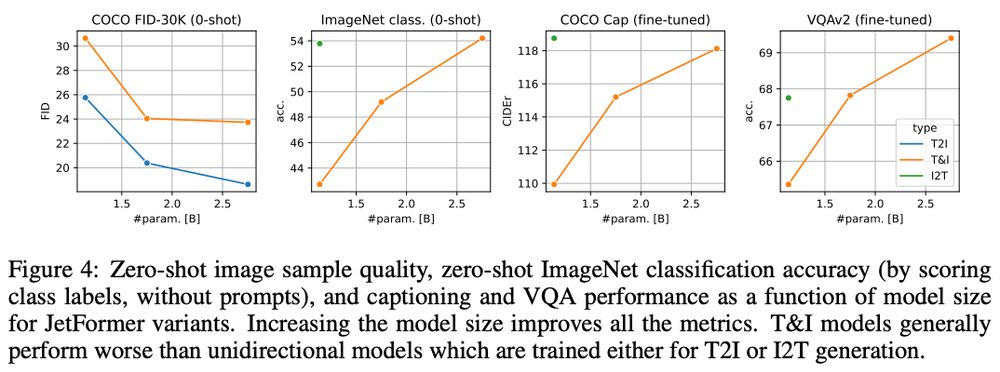

Very excited about this model due to its potential to unify multimodal learning with a simple and universal end-to-end approach.

and @asusanopinto.bsky.social.

Very excited about this model due to its potential to unify multimodal learning with a simple and universal end-to-end approach.

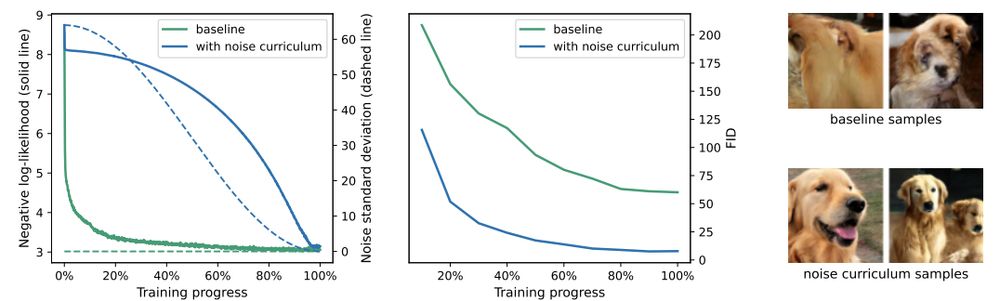

Even though it is inspired by diffusion, it is very different: it only affects training and does not require iterative denoising during inference.

Even though it is inspired by diffusion, it is very different: it only affects training and does not require iterative denoising during inference.

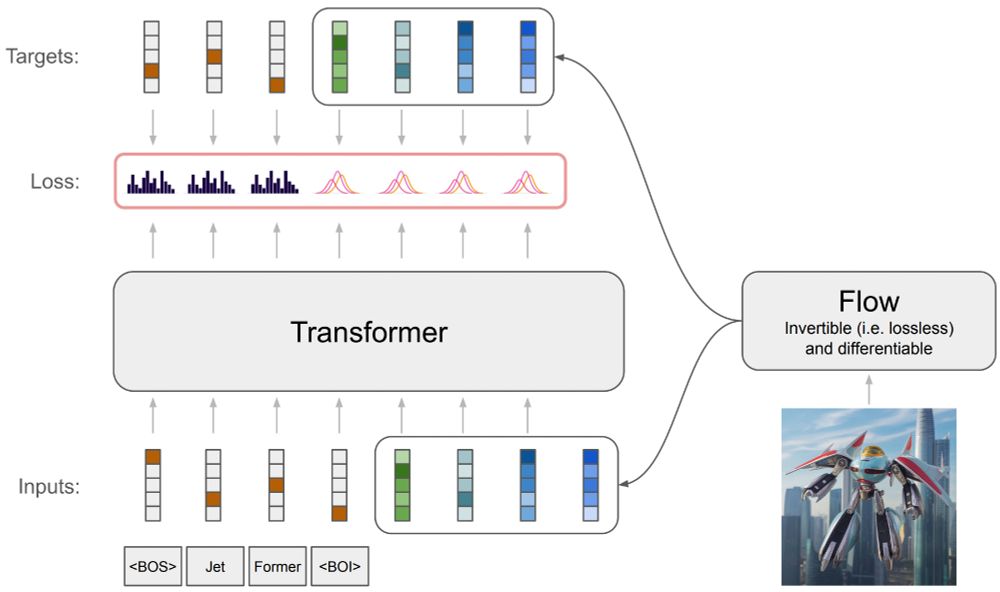

There is a small twist though. An image input is re-encoded with a normalizing flow model, which is trained jointly with the main transformer model.

There is a small twist though. An image input is re-encoded with a normalizing flow model, which is trained jointly with the main transformer model.