Interested in MARL, Social Reasoning, and Collective Decision making in people, machines, and other organisms

kjha02.github.io

While initial program generation takes time, the inferred code can be executed rapidly, making it orders of magnitude more efficient than other LLM-based methods for long-horizon predictions.

While initial program generation takes time, the inferred code can be executed rapidly, making it orders of magnitude more efficient than other LLM-based methods for long-horizon predictions.

ROTE still significantly outperformed all LLM-based and behavior cloning baselines for high-level action prediction in this domain!

ROTE still significantly outperformed all LLM-based and behavior cloning baselines for high-level action prediction in this domain!

Programs inferred from one environment transfer to new settings more effectively than all other baselines. ROTE's learned programs transfer without needing to re-incur the cost of text generation.

Programs inferred from one environment transfer to new settings more effectively than all other baselines. ROTE's learned programs transfer without needing to re-incur the cost of text generation.

We collected human gameplay data and found ROTE not only outperformed all baselines but also achieved human-level performance when predicting the trajectories of real people!

We collected human gameplay data and found ROTE not only outperformed all baselines but also achieved human-level performance when predicting the trajectories of real people!

We use LLMs to generate Python programs 💻 that model observed behavior, then uses Bayesian inference to select the most likely ones. The result: A dynamic, composable, and analyzable predictive representation!

We use LLMs to generate Python programs 💻 that model observed behavior, then uses Bayesian inference to select the most likely ones. The result: A dynamic, composable, and analyzable predictive representation!

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

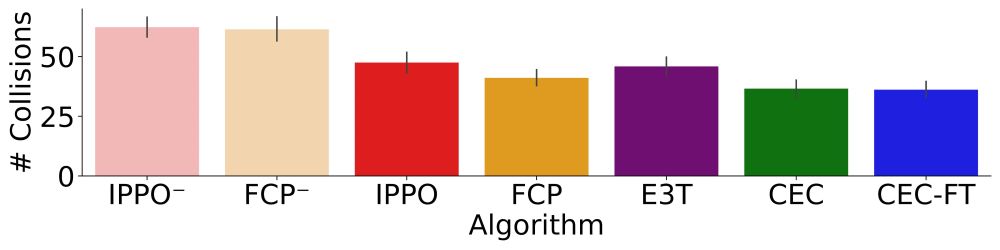

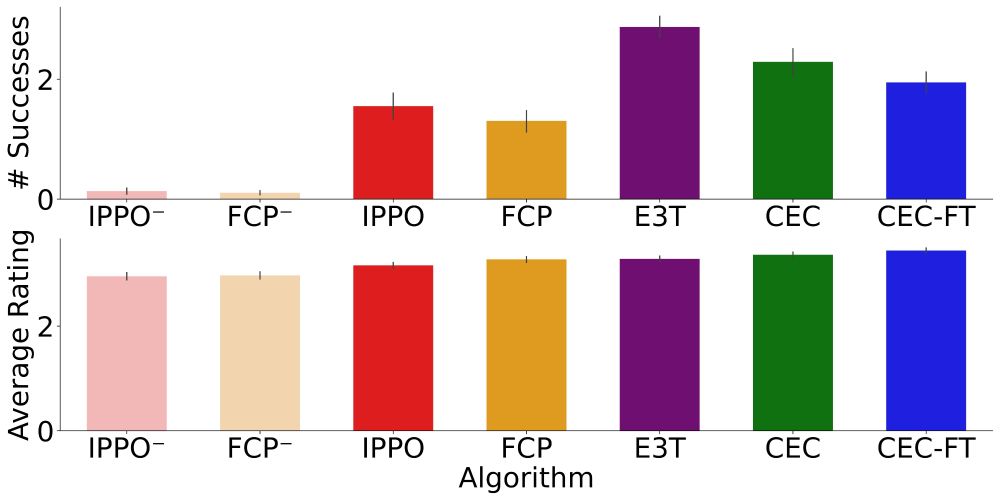

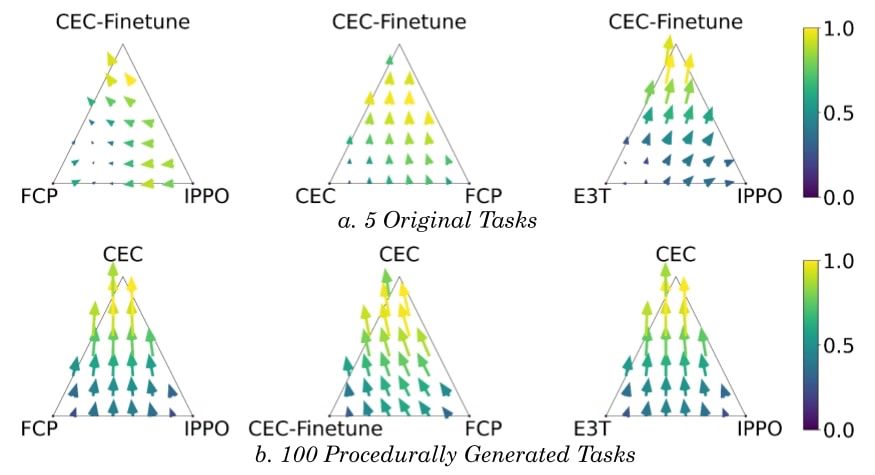

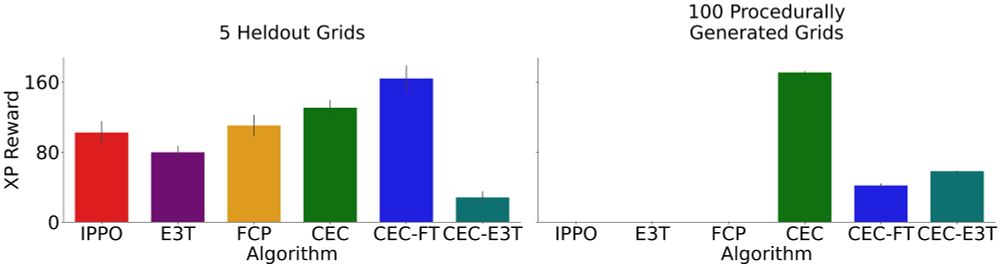

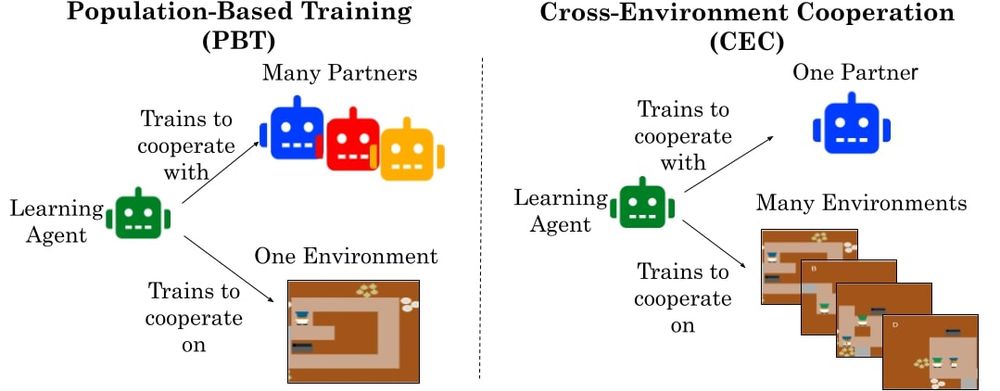

This increased adaptability reflects general norms for cooperation learned across many environments, not just memorized strategies.

This increased adaptability reflects general norms for cooperation learned across many environments, not just memorized strategies.

When diverse agents must collaborate, the CEC-trained agents are selected for their adaptability and cooperative skills.

When diverse agents must collaborate, the CEC-trained agents are selected for their adaptability and cooperative skills.

Even more impressive: CEC agents outperform methods that were specifically trained on the test environment but struggle to adapt to new partners!

Even more impressive: CEC agents outperform methods that were specifically trained on the test environment but struggle to adapt to new partners!

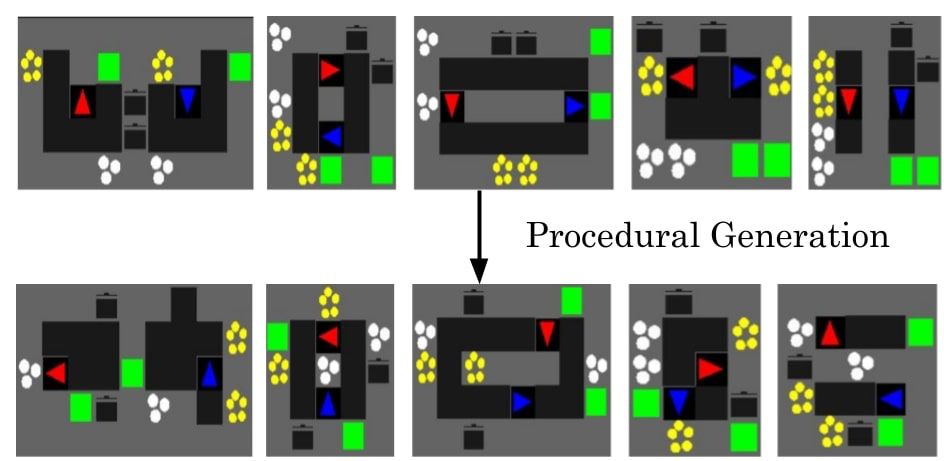

Unlike prior work studying only 5 layouts, we can now study cooperative skill transfer at unprecedented scale (1.16e17 possible environments)!

Code available at: shorturl.at/KxAjW

Unlike prior work studying only 5 layouts, we can now study cooperative skill transfer at unprecedented scale (1.16e17 possible environments)!

Code available at: shorturl.at/KxAjW

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...