Katherine Lee

@katherinelee.bsky.social

Researcher at OpenAI and at the GenLaw Center.

I just want things to work (:

https://katelee168.github.io/

I just want things to work (:

https://katelee168.github.io/

Reposted by Katherine Lee

Llama 3.1 70B contains copies of nearly the entirety of some books. Harry Potter is just one of them. I don’t know if this means it’s an infringing copy. But the first question to answer is if it’s a copy at all/in the first place. That’s what our new results suggest:

arxiv.org/abs/2505.12546

arxiv.org/abs/2505.12546

Extracting memorized pieces of (copyrighted) books from open-weight language models

Plaintiffs and defendants in copyright lawsuits over generative AI often make sweeping, opposing claims about the extent to which large language models (LLMs) have memorized plaintiffs' protected expr...

arxiv.org

May 21, 2025 at 11:20 AM

Llama 3.1 70B contains copies of nearly the entirety of some books. Harry Potter is just one of them. I don’t know if this means it’s an infringing copy. But the first question to answer is if it’s a copy at all/in the first place. That’s what our new results suggest:

arxiv.org/abs/2505.12546

arxiv.org/abs/2505.12546

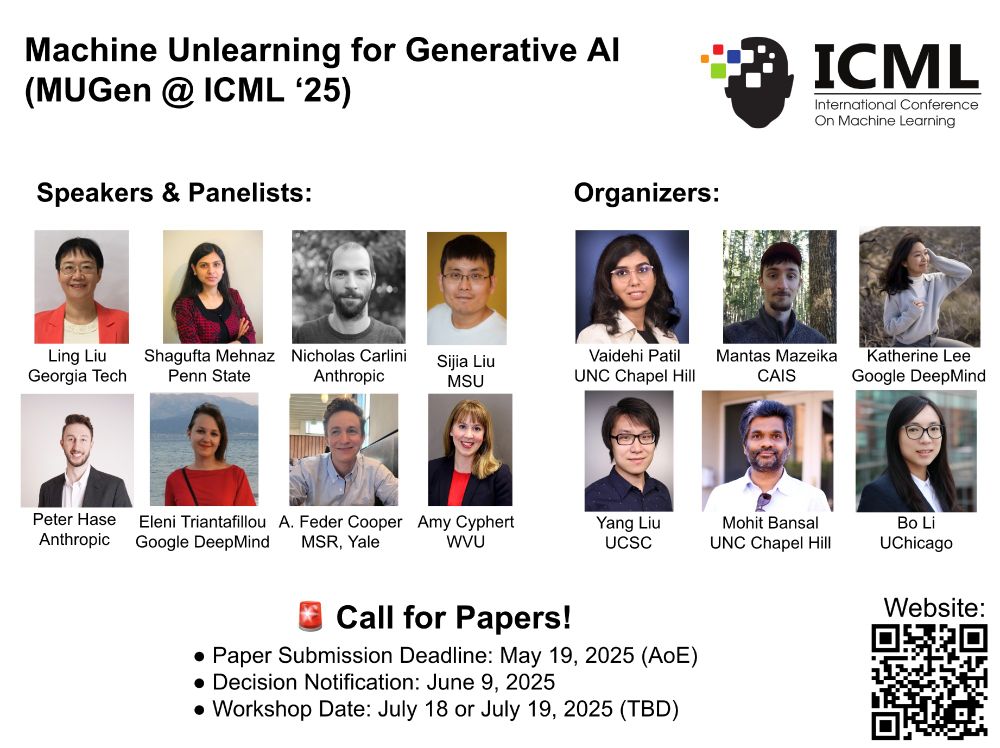

Come chat about unlearning with us!!

🚨Exciting @icmlconf.bsky.social workshop alert 🚨

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

April 2, 2025 at 4:57 PM

Come chat about unlearning with us!!

Reposted by Katherine Lee

We’ve been receiving a bunch of questions about a CFP for GenLaw 2025.

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

March 9, 2025 at 8:33 PM

We’ve been receiving a bunch of questions about a CFP for GenLaw 2025.

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

Nicholas is leaving GDM at the end of this week, and we're feeling big sad about it: nicholas.carlini.com/writing/2025...

Career Update: Google DeepMind -> Anthropic

TODO

nicholas.carlini.com

March 5, 2025 at 9:56 PM

Nicholas is leaving GDM at the end of this week, and we're feeling big sad about it: nicholas.carlini.com/writing/2025...

Reposted by Katherine Lee

📢 The First Workshop on Large Language Model Memorization (L2M2) will be co-located with

@aclmeeting.bsky.social in Vienna 🎉

💡 L2M2 brings together researchers to explore memorization from multiple angles. Whether it's text-only LLMs or Vision-language models, we want to hear from you! 🌍

@aclmeeting.bsky.social in Vienna 🎉

💡 L2M2 brings together researchers to explore memorization from multiple angles. Whether it's text-only LLMs or Vision-language models, we want to hear from you! 🌍

January 27, 2025 at 9:51 PM

📢 The First Workshop on Large Language Model Memorization (L2M2) will be co-located with

@aclmeeting.bsky.social in Vienna 🎉

💡 L2M2 brings together researchers to explore memorization from multiple angles. Whether it's text-only LLMs or Vision-language models, we want to hear from you! 🌍

@aclmeeting.bsky.social in Vienna 🎉

💡 L2M2 brings together researchers to explore memorization from multiple angles. Whether it's text-only LLMs or Vision-language models, we want to hear from you! 🌍

Reposted by Katherine Lee

Registration for CSLaw 2025 is now open! Please share far and wide!

Early bird prices are available until February 24. The main conference will begin March 25!

Register here: web.cvent.com/event/dbf97d...

Early bird prices are available until February 24. The main conference will begin March 25!

Register here: web.cvent.com/event/dbf97d...

4th ACM Symposium on Computer Science & Law (CS&Law 2025).

<div class="ag87-crtemvc-hsbk"><div class="css-vsf5of"><p style="text-align:center;" class="carina-rte-public-DraftStyleDefault-block">The ACM Symposium on Computer .

web.cvent.com

February 2, 2025 at 1:27 PM

Registration for CSLaw 2025 is now open! Please share far and wide!

Early bird prices are available until February 24. The main conference will begin March 25!

Register here: web.cvent.com/event/dbf97d...

Early bird prices are available until February 24. The main conference will begin March 25!

Register here: web.cvent.com/event/dbf97d...

Reposted by Katherine Lee

New paper on why machine "unlearning" is much harder than it seems is now up on arXiv: arxiv.org/abs/2412.06966 This was a huuuuuge cross-disciplinary effort led by @msftresearch.bsky.social FATE postdoc @grumpy-frog.bsky.social!!!

Machine Unlearning Doesn't Do What You Think: Lessons for Generative AI Policy, Research, and Practice

We articulate fundamental mismatches between technical methods for machine unlearning in Generative AI, and documented aspirations for broader impact that these methods could have for law and policy. ...

arxiv.org

December 14, 2024 at 12:55 AM

New paper on why machine "unlearning" is much harder than it seems is now up on arXiv: arxiv.org/abs/2412.06966 This was a huuuuuge cross-disciplinary effort led by @msftresearch.bsky.social FATE postdoc @grumpy-frog.bsky.social!!!

Reposted by Katherine Lee

My paper with @jtlg.bsky.social, Daniel Ho, A. Feder Cooper, and a host of computer science folks on the limits of AI "unlearning" of data and content is now posted on Arxiv

arxiv.org/abs/2412.06966

arxiv.org/abs/2412.06966

Machine Unlearning Doesn't Do What You Think: Lessons for Generative AI Policy, Research, and Practice

We articulate fundamental mismatches between technical methods for machine unlearning in Generative AI, and documented aspirations for broader impact that these methods could have for law and policy. ...

arxiv.org

December 11, 2024 at 7:46 PM

My paper with @jtlg.bsky.social, Daniel Ho, A. Feder Cooper, and a host of computer science folks on the limits of AI "unlearning" of data and content is now posted on Arxiv

arxiv.org/abs/2412.06966

arxiv.org/abs/2412.06966